-

Články

Top novinky

Reklama- Vzdělávání

- Časopisy

Top články

Nové číslo

- Témata

Top novinky

Reklama- Videa

- Podcasty

Nové podcasty

Reklama- Kariéra

Doporučené pozice

Reklama- Praxe

Top novinky

ReklamaAssociation between the 2012 Health and Social Care Act and specialist visits and hospitalisations in England: A controlled interrupted time series analysis

Using a controlled interrupted time series analysis, James Lopez Bernal and colleagues investigate the changes in specialist visits and hospitalizations following the healthcare reforms of the 2012 Health and Social Care Act in England.

Published in the journal: . PLoS Med 14(11): e32767. doi:10.1371/journal.pmed.1002427

Category: Research Article

doi: https://doi.org/10.1371/journal.pmed.1002427Summary

Using a controlled interrupted time series analysis, James Lopez Bernal and colleagues investigate the changes in specialist visits and hospitalizations following the healthcare reforms of the 2012 Health and Social Care Act in England.

Introduction

The 2012 Health and Social Care Act (HSCA) in England has been described as “the biggest and most far reaching [reorganisation] in the history of the NHS” [1, 2]. The reforms centred around the introduction of general practitioner (GP) led Clinical Commissioning Groups (CCGs), which received about two-thirds of the National Health Service (NHS) budget (£66.8 billion in 2015–2016) to commission (plan and contract) secondary care, including hospital and specialist services [1]. CCGs represent all GP practices in their local area, and the key difference from the previous commissioning structures was purported to be a major new role for GPs as key decision makers in the commissioning process [1, 3, 4].

Health policy experts and parliamentary and professional bodies have hypothesised that GP-led commissioning could potentially lead to reductions in referrals to specialist care, as either an intended or unintended consequence of the Act [5–10]. They theorise that by giving the gatekeepers, who control access to specialist care, a greater role in budget holding and the purchasing of specialist care, they may be incentivised to reduce referrals [5, 6]. Indeed, Smith and Mays suggest that the primary rationale for GP-led commissioning is to encourage a shift away from expensive secondary care towards more community-based care [6]. Furthermore, 2 out of the 3 main reasons cited by the government for introducing the reforms centred around a need to control costs, although the mechanisms by which GP-led CCGs would achieve cost savings were not made explicit [4]. While the potential for much-needed cost savings in the NHS as a result of reduced secondary care activity has been viewed positively, some—including the National Audit Office and the Royal College of Surgeons—have raised concerns that a reduction in referrals as a consequence of the HSCA and policies introduced by CCGs could result in inequitable rationing of care and missed diagnoses and that their role in commissioning presents GPs with a conflict of interest [7–10].

CCGs and GPs could reduce secondary care activity through various means, including restricting referral criteria, developing community-based care models, investing in preventative healthcare, or promoting services to prevent readmissions [6, 9, 10]. There also exist potential incentives for them to do so: reducing expensive care would allow CCGs to invest savings in other services. Furthermore CCGs are required to maintain a surplus; otherwise, they cannot access additional funding in the form of a “Quality Premium” of up to £5 per person within the population covered by the CCG person [11]. In addition, there are incentives to individual GPs: savings from reduced specialist visits and hospitalisations could allow investment in community-based services provided by GP practices themselves; also, some CCGs have introduced direct payments of £6,000–£11,000 to GP practices for reducing referral rates [7, 8]. Nevertheless, whether these provide a real incentive in practice depends on how engaged GPs feel with the new commissioning organisations, how much responsibility they feel for their budgets, and how much influence they have on the commissioning process. Previous policies that have begun with an intention to place GPs at the centre of commissioning have ultimately resulted in the formation of bureaucratic bodies that have become detached from local practitioners [6]. Furthermore, given existing evidence that increasing GP workload may increase referral rates, it is possible that the increased administrative burden associated with their new commissioning role could instead result in an increase in referrals [12, 13].

We use a controlled interrupted time series (CITS) design to compare changes in the trends of specialist referrals and hospital admissions in England before and after the HSCA with those in Scotland, where the reforms did not occur. We hypothesise that the 2012 HSCA was associated with a reduction in specialist visits and hospitalisations.

Methods

Ethics

Ethical approval was obtained from the London School of Hygiene and Tropical Medicine Observational/Interventions Research Ethics Committee (LSHTM Ethics Ref: 10505).

The intervention

The 2012 HSCA introduced broad ranging and complex reforms to the NHS and public health services in England. These have been described in more detail elsewhere [1, 2, 4, 14, 15]. The principal change was in the way secondary care services were commissioned within the NHS. Prior to 2012, regional healthcare administrative bodies known as primary care trusts (PCTs) and Strategic Health Authorities (SHAs) were responsible for all commissioning. These were abolished as part of the Act and were replaced by CCGs. CCGs are led by a governing body, which includes a representative from each member GP practice, lay members, a secondary care doctor, and a registered nurse [3]. CCGs were first introduced in shadow form (working alongside PCTs) in April 2012 following the enactment of the HSCA; they then took over full budgetary responsibility in March 2013 [1].

Control

While a control is not required in interrupted time series studies, the primary comparison being between preintervention and postintervention trends within the study population, a control population can help to exclude additional confounding events and cointerventions. Healthcare is a largely devolved power in the United Kingdom and the HSCA only applied to England; therefore, we considered the other 3 nations of the UK (Northern Ireland, Scotland, and Wales) as potential controls. These are neighbouring countries with similar population demographics (S1 Table), similar health systems, and shared political structures. Data equivalent to those in England were not available from Northern Ireland; therefore, it was excluded. We chose Scotland as the control, as preintervention data were more stable than for Wales. We also include an analysis as a supplementary appendix with Wales as the control (S2 Table, S1 and S2 Figs).

Data and study population

We obtained quarterly data on all hospital admissions and outpatient specialist visits in NHS hospitals in England between April 2007 and December 2015 from the Health and Social Care Information Centre: Hospital Episode Statistics (HES) [16]. Hospital admissions include all inpatients in NHS hospitals as well as NHS-funded inpatients in the private sector. NHS outpatient activity in England is hospital based; specialist visit data include outpatients in English NHS hospitals and NHS-funded outpatients in the private sector. Our outcomes were total hospital admissions, elective (planned) and emergency (unplanned) admissions, total first specialist visits (excluding follow-up appointments), and GP-referred first specialist visits. Equivalent data for Scotland were obtained from the NHS Scotland Information Services Division: Scottish Medical Records (SMR) [17]. We obtained demographic data for England and Scotland from the Office for National Statistics including midyear population estimates (for denominators), age and sex distribution, crude birth rate, and crude death rate [18]. The Scottish hospital admission data did not include obstetric and psychiatric hospitals and the outpatient visit data did not include visits to nurses, dentists, or other allied health professionals. We therefore excluded these categories from the English data to make the 2 datasets comparable. Data quality reports identified a coding error in the outpatient data prior to April 2010 (3 years before the introduction of the policy); we therefore excluded these data from the analysis [19]. The raw data are provided in the supplementary appendix (S1 and S2 Data). A complete list of the data codes and algorithms used in the data extraction is also provided in the supplementary appendix (S3 and S4 data).

Statistical analysis

We used a CITS design, which allowed us to control both for preintervention trends in the outcome and for potential confounding events that would have affected both the control and the study groups. Although a Poisson distribution is assumed for individual counts, we had very large numbers and the aggregate data were well approximated by a Gaussian distribution (log transformed). Therefore, we used a simple linear segmented regression model to estimate the change in trend in hospital admissions and outpatient visits following the introduction of the HSCA [20]. In order to account for the year during which CCGs were in shadow form, we allowed a one-year phase-in period by excluding the second quarter of 2012 to the first quarter of 2013 from the analysis. We modelled the association as a slope change rather than an immediate level change because choice of providers and referral patterns were likely to change gradually when existing contracts expired and new models of care developed [1]. Adjustments were made for any seasonal effect using a Fourier term [20].

We first estimated the slope changes in England and in Scotland independently. We then used an interaction model for the CITS to estimate the additional trend change in England over and above any change in Scotland, while controlling for any difference in the preintervention trends of the 2 groups (S1 Text). We examined the preintervention data a priori for linearity and autocorrelation at different lags using scatterplots, plots of residuals, and partial autocorrelation functions [20]. A linear trend provided a reasonable fit for all outcomes in the primary model. We included an autoregressive term at the appropriate lag to adjust for any detected autocorrelation. All analyses were conducted using Stata version 14.

Protocol

The original study protocol from the ethics application is available as a supplementary appendix (S1 Protocol). The analysis has only differed from this protocol in that Scotland was selected as the primary control, as it had the most stable data; Wales was included as an additional control following reviewers’ recommendations. Northern Ireland was not included as equivalent data to that in England was not available. Furthermore, in this protocol, we also proposed including patient experience measures as secondary outcomes; this was ultimately not included within the current study but we plan to conduct a future study looking at the potential impact on patient experience.

Reporting

This study is reported as per the REporting of studies Conducted using Observational Routinely-collected health Data (RECORD) Statement (S1 Checklist).

Results

Population characteristics

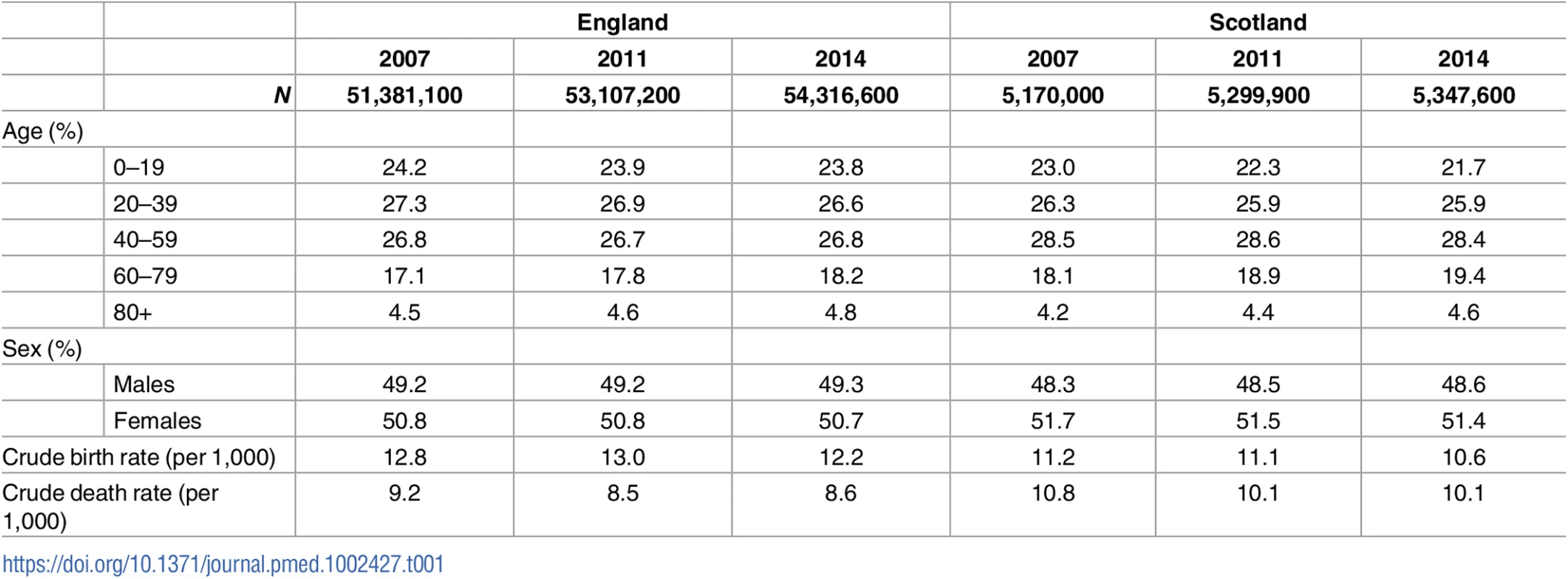

Age and sex distributions were similar in both England and Scotland (Table 1 and S1 Table). Both populations were slowly aging; the proportion aged 60 or older increased from 21.6% to 23.0% in England and from 22.3% to 24.0% in Scotland. The crude birth rate was consistently about 1.8 per 1,000 higher in England than in Scotland while the crude death rate was consistently about 1.5 per 1,000 lower.

Tab. 1. Population characteristics of England and Scotland, 2007–2014.

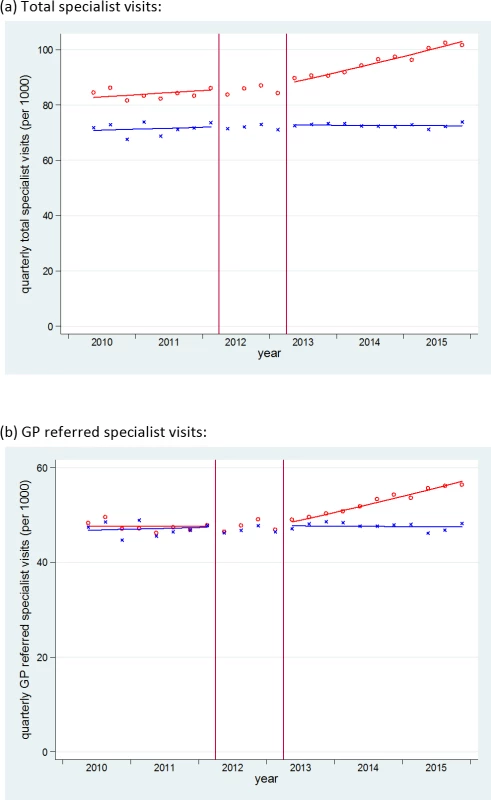

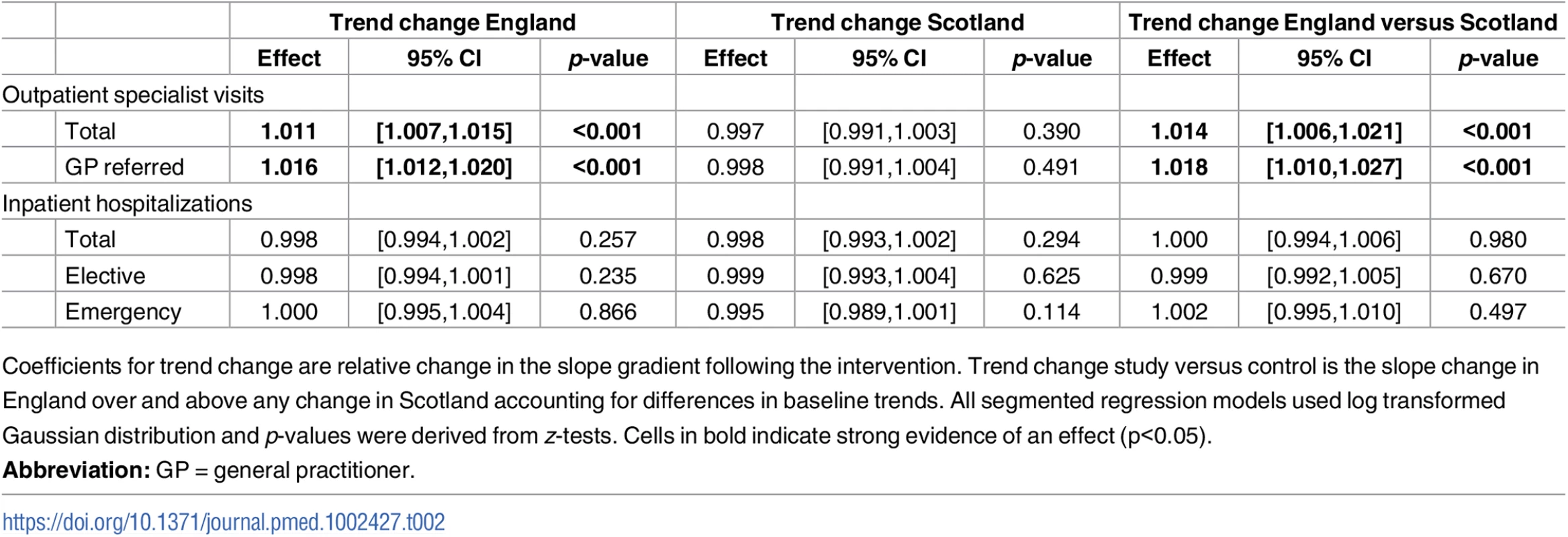

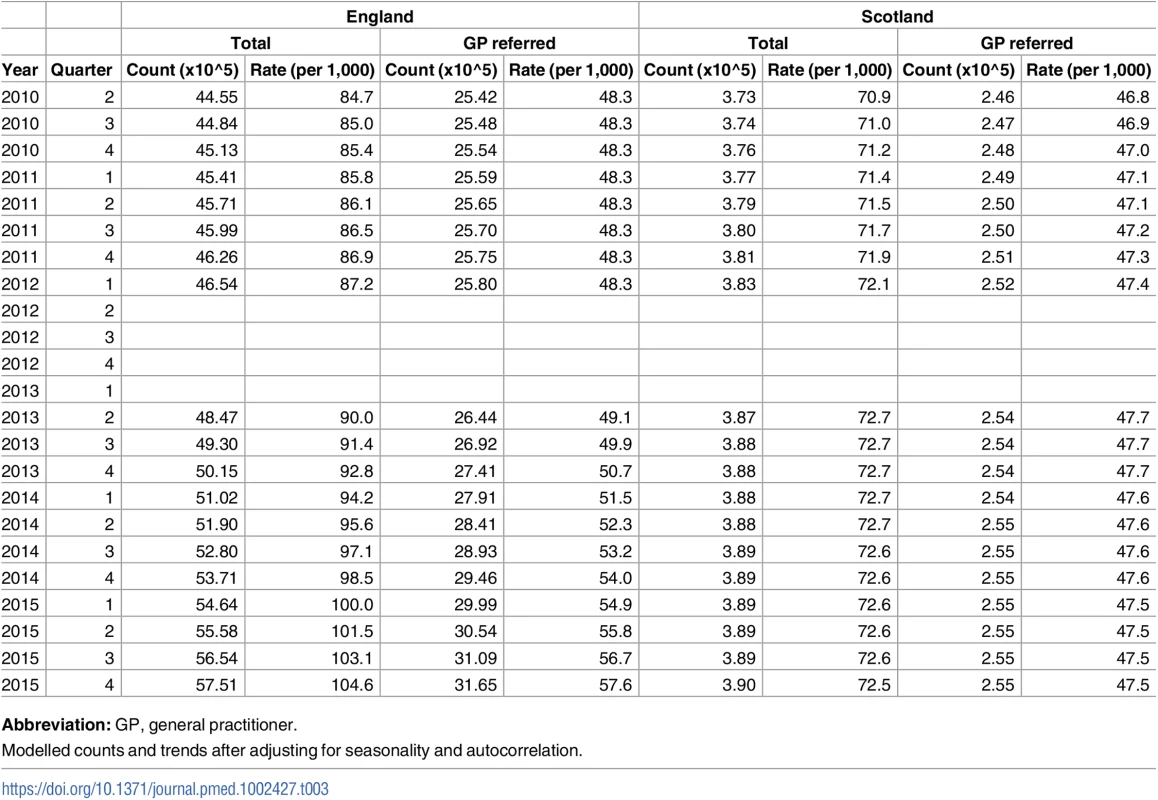

Changes in outpatient specialist visits

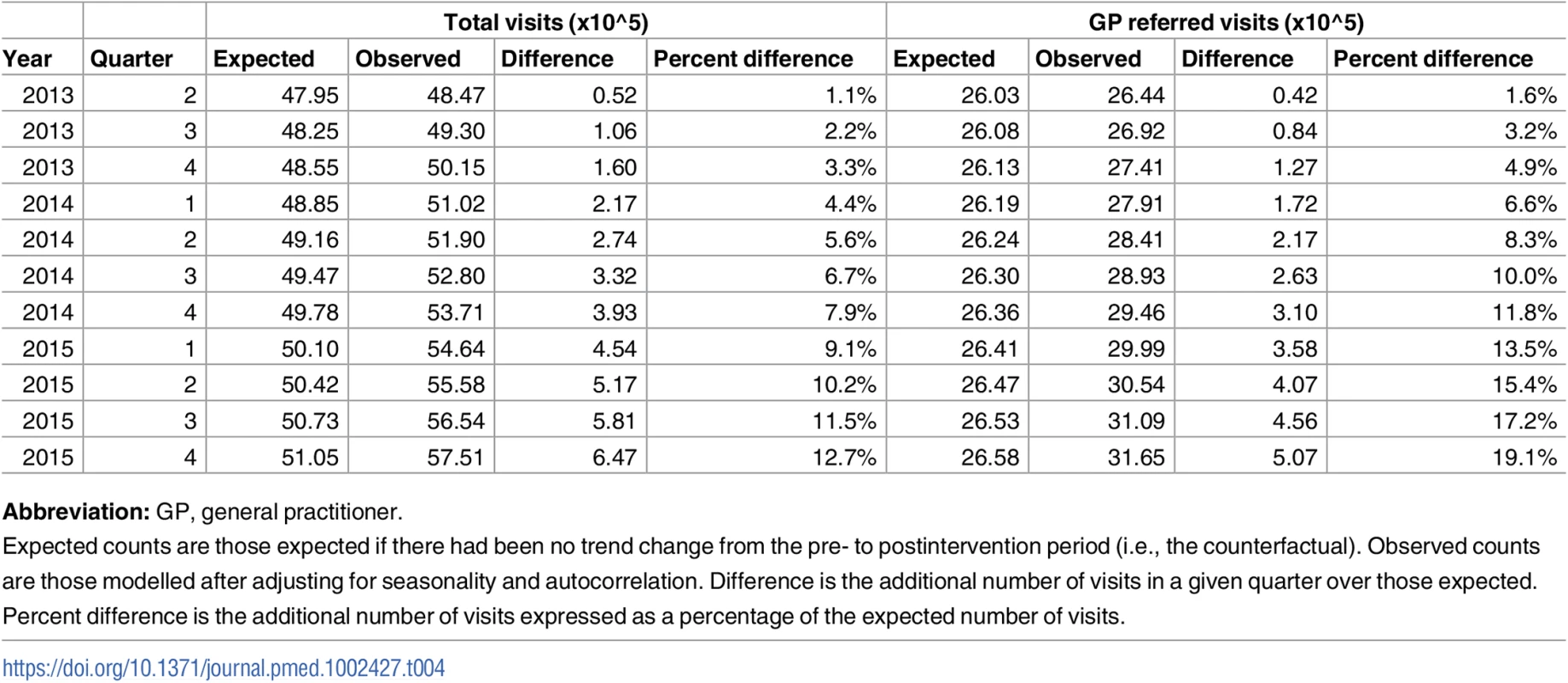

Changes in trends of specialist visits are shown in Fig 1 and Table 2. Absolute counts and the rate per 1,000 for each quarter are presented in Table 3. In England, total specialist visits rose slowly by 0.5% per quarter (from 84.7 per 1,000 in quarter 2 [Q2] 2010 to 87.2 per 1,000 in Q1 2012) in the baseline. After the intervention, they rose approximately 3.6 times faster at 1.5% per quarter (from 90.0 per 1,000 in Q2 2013 to 104.6 per 1,000 in Q4 2015). This was equivalent to an increase in slope (additional quarterly increase) of 1.1% (95% CI 0.7%–1.5%), which resulted in a 12.7% higher rate of specialist visits (647,000 additional visits) by the end of the postintervention period in Q4 2015, compared to the underlying (counterfactual) trend. The slope increase was even more marked for GP-referred visits. During the preintervention period, these had a flat trend at 48.3 visits per 1,000 per quarter (trend 1.000, 95% CI 0.998–1.002). After the intervention, this trend increased by 1.6% per quarter (from 49.1 per 1,000 in Q2 2013 to 57.6 per 1,000 in Q4 2015). This was equivalent to an increase in slope of 1.6% (95% CI 1.2%–2.0%) per quarter, which resulted in a 19.1% higher than expected rate of specialist visits (507,000 additional visits) by the end of the study period. For those outcomes that showed strong evidence of a trend change (total and GP-referred specialist visits in England), we have presented observed compared to expected counts in the postintervention period in Table 4. Total specialist visits had weak evidence of seasonal effect with peaks during Q3 (Fourier sin wave p = 0.055, cos wave p = 0.010).

Fig. 1. Time series of outpatient specialist visits in England and Scotland.

Red o = England, blue x = Scotland. Lines = deseasonalized linear trend. Vertical lines delineate the intervention phase (between quarter 2 [Q2] 2012 and Q2 2013). The data underlying this figure are presented in Table 3. GP, general practitioner. Tab. 2. Changes in trend in specialist visits and hospitalisations following the intervention.

Coefficients for trend change are relative change in the slope gradient following the intervention. Trend change study versus control is the slope change in England over and above any change in Scotland accounting for differences in baseline trends. All segmented regression models used log transformed Gaussian distribution and p-values were derived from z-tests. Cells in bold indicate strong evidence of an effect (p<0.05). Tab. 3. Absolute counts and rates of specialist visits in each quarter.

Abbreviation: GP, general practitioner. Tab. 4. Observed and expected counts for specialist visits in the postintervention period in England.

Abbreviation: GP, general practitioner. Specialist visits in Scotland, however, showed no significant change after the policy. Total specialist visits and GP-referred specialist visits almost level at about 72 per 1,000 per quarter (preintervention trend 1.002, 95% CI 0.999–1.006; postintervention trend 1.000, 95% CI 0.996–1.003) and 47 per 1,000 per quarter (preintervention trend 1.002, 95% CI 0.998–1.005, postintervention trend 1.000, 95% CI 0.996–1.003), respectively, throughout the study period.

After controlling for trends in Scotland, the CITS analysis produced similar results. The magnitude of the change in slope in total specialist visits in England increased slightly to 1.4% (95% CI 0.6%–2.1%) per quarter (a 15.9% higher rate by the end of the study period). The change in trend in GP-referred specialist visits increased to 1.9% (95% CI 1.1%–2.7%) per quarter (a 22.5% higher rate than expected by the end of the study period).

The magnitude of the differential increase in trend in England was even greater when using Wales as a control series, although this was partly due to an independent reduction in the trend in Wales (S2 Table and S1 Fig).

Changes in inpatient hospitalisations

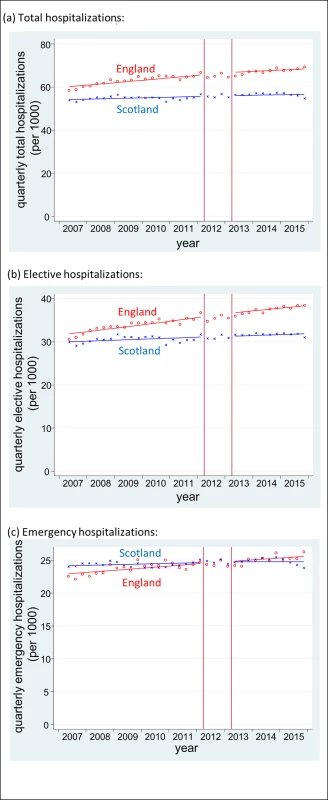

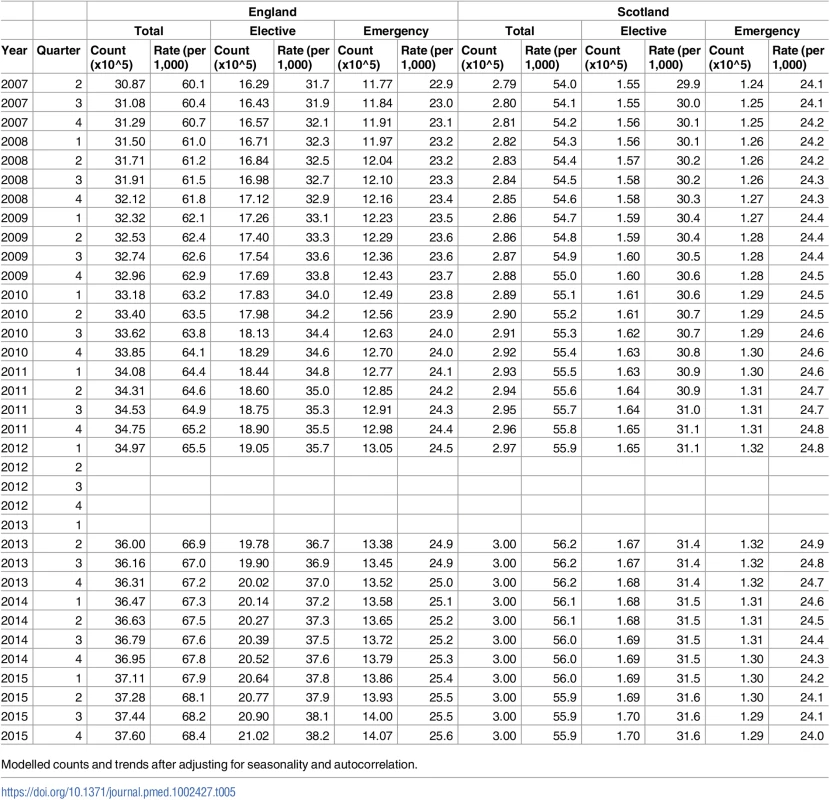

Changes in trends in hospitalisations following the HSCA are shown in Fig 2 and Table 2. Absolute counts and the rate per 1,000 for each quarter are presented in Table 5. In England, there were slowly increasing trends in all hospitalisations during the baseline period. Total hospitalisations increased by 0.5% per quarter (from 60.1 per 1,000 in Q2 2007 to 65.5 per 1,000 in Q1 2012), elective admissions increased by 0.6% per quarter (from 31.7 to 35.7 per 1,000), and emergency admissions increased by 0.3% per quarter (from 22.9 to 24.5 per 1,000). Total hospitalisations and emergency hospitalisations had a seasonal effect with winter peaks in Q4 (emergency hospitalisations Fourier terms: sin wave p = 0.002, cos wave p = 0.039). There were no statistically significant changes in any of these trends following the HSCA. Slope changes were −0.2% (95% CI −0.6%–0.2%), −0.2% (95% CI −0.6%–0.1%), and 0.0 (95% CI −0.5%–0.4%) per quarter for total, elective, and emergency hospitalisations, respectively.

Fig. 2. Time series of inpatient hospitalisations in England and Scotland.

Red o = England, blue x = Scotland. Lines = deseasonalized linear trend. Vertical lines delineate the intervention phase (between quarter 2 [Q2] 2012 and Q2 2013). The data underlying this figure are presented in Table 5. Tab. 5. Absolute counts and rates of hospitalisations in each quarter.

Modelled counts and trends after adjusting for seasonality and autocorrelation. Trends in Scotland were flatter during the baseline. Total hospitalisations increased by 0.2% per quarter (from 54.0 to 55.9 per 1,000), elective admissions increased by 0.20% per quarter (from 29.9 to 31.1 per 1,000), and emergency admissions increased by 0.2% per quarter (from 24.1 to 24.8 per 1,000). Again, there was no evidence of any change in these trends after the HSCA: slope changes were −0.3% (95% CI −0.7%–0.2%), −0.1% (95% CI −0.7%–0.4%) and −0.5% (95% CI −1.1%–0.1%), respectively.

The results of the CITS analysis were again similar. The differential slope changes in England (that is, the additional quarterly change following the HSCA after controlling for trends in Scotland) were: 0.0% (95% CI −0.6%–0.6%) per quarter for total hospitalisations, −0.1% (95% CI −0.7%–0.5%) per quarter for elective hospitalisations, and 0.2% (95% CI −0.5%–1.0%) per quarter for emergency hospitalisations.

Results using Wales as a control instead of Scotland were similar (S2 Table and S2 Fig).

Discussion

To our knowledge, this is the first study of the potential impact on secondary care activity of a universal, national policy that gave control of an unprecedented two-thirds of the English NHS budget to GP-led CCGs. Contrary to the underlying hypothesis, we found no evidence of a reduction in hospitalisations or specialist visits in England following the HSCA. Moreover, we found evidence of an increase over and above the underlying trend in specialist visits in England, with no comparable increase in Scotland, where this policy did not occur. This increase was equivalent to approximately 3.7 million additional specialist visits since the policy was implemented (compared to those expected), of which the majority (approximately 2.9 million) were GP referred.

We used a robust CITS design. By modelling long-term underlying trends, we controlled for secular changes in practice and artefactual changes due to regression to the mean. Selection bias is only an issue in the unlikely event that the population changed suddenly and substantially in contrast to the underlying trend and differentially from trends in the control. S1 Table shows that population characteristics maintained stable trends over the study period, suggesting that this is not an alternative explanation for our findings. Furthermore, we controlled for unknown confounding events coincident with the policy by including Scotland as a comparator. Our study is also based on a very large population with stable trends in the outcomes before and after the intervention; therefore, we are well powered to detect effects. Finally, the compulsory nature and large scale of the intervention again limits selection bias and increases both the internal and external validity of our results.

Our study has several limitations. First, it is possible that the observed changes in trends could have been due to other concurrent policies targeting these outcomes but that did not occur in the control population. Following a literature review, we found one such national policy: an “enhanced service” encouraging GPs to provide extra support for patients deemed at risk of unplanned admission to the hospital; however, this was introduced a year after the Act and only targeted 1 of our outcomes (emergency admissions) in which we did not see a change [21]. We also considered the fact that the Act included a broad range of changes alongside GP-led commissioning and that observed changes in trends might be due to other aspects of the reforms. However, most of the other changes were support structures for the changes to commissioning (such as accountability systems and services regulating specialist care providers) that would be considered integral to the intervention itself, or structural changes to public health and preventative services that are likely to have little direct impact on hospitalisations or specialist visits [2, 4]. Second, smaller-scale effects on certain specialties or diagnoses may have been diluted by the scale of our data. However, as the first study of this nationwide policy, and given the government’s aim to address rising demands and treatment costs within the NHS as a whole [4], our goal was to examine the association between the policy and trends in specialist visits and hospitalisations. Third, while we have nearly 3 years of postintervention data, it is possible that some effects of GP-led commissioning have not yet become evident. For example, GPs may have chosen to invest more in preventative services, which can take several years to result in population-level reductions in disease. Finally, our study uses routine data that were not specifically created to answer this research question. However, we use the data in high-level aggregate analysis and only use final, rather than provisional, data, which are regarded as complete. Therefore, quarterly changes are unlikely to be due to issues such as data completeness or misclassification [22].

Following the introduction of the HSCA, the Department of Health (DH) called for research to evaluate its impact [23]. Nevertheless, initial proposals were rejected, and, while the DH has published an evaluation focussing on the processes of the reforms, we were unable to find any studies looking at the impact of this policy on hospital activity [23, 24]. There have been studies of previous policies that handed greater budgetary responsibility to GPs in the UK and in Israel [25–32]. However, the results of these studies are mixed and difficult to interpret, as all used simple pre-post designs, which do not take into account underlying trends in hospitalisations or specialist visits, and they examined smaller policies, which were voluntary and subject to volunteer selection bias. The lack of control for underlying trends in these studies is particularly important because study groups often appear to have had unusually high referral rates prior to the intervention (partly because budget allocations based on existing referral rates incentivized practices to inflate referrals before becoming budget holders) [27]. Any reduction could therefore have simply been due to regression to the mean.

Our findings suggest that, on a national scale, the concerns raised around restrictions in access to specialist services and rationing of care have not been realised. However, the lack of decrease in hospitalisations and the unanticipated increase in specialist visits also suggest the theorised shift in care away from hospitals to less expensive community settings does not appear to have occurred and, if anything, the increase in specialist visits may have led to cost increases. There are a number of possible reasons why specialist visits and hospitalisations did not decrease. First, while CCGs intended to increase clinical involvement in commissioning, survey evidence suggests that some GPs do not feel fully engaged with their CCG [33]. For example, the majority of GPs are CCG members but do not have a formal role in the governing body, and this group reported much lower levels of influence and ownership than governing body members. A lack of engagement with members may mean that many GPs feel detached from their CCG and under little pressure to make cost savings or unable to influence the way local health services are managed [33]. Second, the financial incentive for CCGs to reduce costs and GPs to change referral patterns may have been too small or too indirect, and, while practice income may have increased by shifting some care from hospitals to community-based care provided by GPs, concerns about potential conflicts of interest could have discouraged this [7]. Finally, it is also possible that referrals to specialists were already appropriate prior to the intervention, resulting in little scope for further reduction. This is supported by evidence that variations in referral rates in the NHS are primarily explained by characteristics of the patient population and not factors affecting GP services [34].

The increase in specialist visits in our study was surprising and may be an unintended consequence of the policy. We identified annual data on NHS costs for outpatient specialist visits from an independent source (S3 Fig). This also appears to show an increase in costs, corroborating our findings regarding upward trends in specialist visits. One explanation might be that the new responsibility for managing budgets has inadvertently increased administrative workload for GPs, resulting in less time to see patients. Under such circumstances, GPs may reduce their threshold for referral to avoid missing a diagnosis. There is some existing evidence to suggest that increased workload and reduced consultation time is associated with increased referral rates [12, 13], although other studies have shown no effect [35]. We considered decreasing GP numbers or increasing supply of specialists as other potential explanations for this finding. However, although there was a slight decrease in the number of full-time equivalent GPs (from 0.69 to 0.67 per 1,000 population) between 2009 and 2010, this does not coincide with the increase in specialist visits, and the number of GPs remained stable from 2010 and, in fact, increased back to 0.69 per 1,000 population in 2014 [36]. Number of specialists (full-time equivalent consultants) increased gradually over the study period from 0.67 per 1,000 population in 2009 to 0.76 per 1,000 population in 2014 and there was no deviation in this trend around the introduction of the HSCA [37].

In conclusion, we found no evidence that the introduction of GP-led commissioning in England was associated with a reduction in overall hospitalisations or specialist visits. In fact, there was an increase in specialist visits, which appears to have been paralleled by an increase in expenditure. This study begins to decipher the macro effects of these significant reforms to the organisation of NHS commissioning. However, many questions remain unanswered. Examples include the appropriateness of any change in rates of specialist visits and hospitalisations, the effect of this change on health outcomes, whether changes differed according to CCG and why, and the generalizability of our findings to other health systems. This study alone is unable to determine whether the HSCA can be regarded as a good or bad policy, and further research is needed to evaluate other important outcomes such as costs and quality of care. Nevertheless, in the context of similar findings from other large-scale health policy experiments [38], more effort may be needed to target specific costly or poorly evidenced practices (such as tonsillectomy, tympanostomy, or antibiotics prescribed for viral infections) rather than to count on broad, system-wide policy changes that often have unintended consequences.

Supporting Information

Zdroje

1. Ham C, Baird B, Gregory S, Jabbal J, Alderwick H. The NHS under the coalition government. Part one: NHS reform. The King's Fund, 2015.

2. Health and Social Care Act. Health and Social Care Act 2012 [Chapter 7]. Available from: http://www.legislation.gov.uk/ukpga/2012/7/pdfs/ukpga_20120007_en.pdf.

3. NHS Commissioning Board. Clinical commissioning group governing body members: role outlines, attributes and skills. Guidance July. 2012.

4. Department of Health. Health and Social Care Act 2012: fact sheets. https://www.gov.uk/government/publications/health-and-social-care-act-2012-fact-sheets: 2012.

5. Mannion R. General practitioner-led commissioning in the NHS: progress, prospects and pitfalls. British Medical Bulletin. 2011;97(1):7–15. doi: 10.1093/bmb/ldq042 21257619

6. Smith JA, Mays N. GP led commissioning: time for a cool appraisal. BMJ. 2012;344. doi: 10.1136/bmj.e980 22344307

7. National Audit Office. Managing conflicts of interest in NHS clinical commissioning groups. National Audit Office, 2015.

8. Shaw D, Melton P. Should GPs be paid to reduce unnecessary referrals? BMJ. 2015;351. doi: 10.1136/bmj.h6148 26578085

9. Kmietowicz Z. Commissioners defend policies on surgery referrals amid accusations of rationing. BMJ. 2014;349. doi: 10.1136/bmj.g4644 25034351

10. Lacobucci G. GPs put the squeeze on access to hospital care. BMJ. 2013;347. doi: 10.1136/bmj.f4432 23843502

11. NHS England. Quality Premium: 2014/15 guidance for CCGs. 2014.

12. Groenewegen PP, Hutten JBF. Workload and job satisfaction among general practitioners: A review of the literature. Social Science & Medicine. 1991;32(10):1111–9. http://dx.doi.org/10.1016/0277-9536(91)90087-S.

13. Camasso MJ, Camasso AE. Practitioner productivity and the product content of medical care in publicly supported health centers. Social Science & Medicine. 1994;38(5):733–48.

14. Naylor C, Curry N, Holder H, Ross S, Marshall L, Tait E. Clinical Commissioning Groups: Supporting Improvements in General Practice? 2013. Kings's Fnd and Nuffield Trust. 2013.

15. Timmins N. Never Again? The story of the Health and Social Care Act 2012: A study in coalition government and policy making. The King's Fund and the Institute for Government, 2012.

16. Health and Social Care Information Centre. Hospital Episode Statistics: Health and Social Care Information Centre; [14th March 2016]. Available from: http://www.hscic.gov.uk/hes.

17. NHS Scotland Information Services Division. SMR Datasets [14th March 2016]. Available from: http://www.ndc.scot.nhs.uk/Data-Dictionary/SMR-Datasets/.

18. Office for National Statistics. Population estimates [14th March 2016]. Available from: http://www.ons.gov.uk/peoplepopulationandcommunity/populationandmigration/populationestimates.

19. Health and Social Care Information Centre. HES 2010–11 Outpatient Data Quality Note: Health and Social Care Information Centre; 2011. Available from: http://www.hscic.gov.uk/catalogue/PUB03032/host-outp-acti-2010-11-qual.pdf.

20. Lopez Bernal J, Cummins S, Gasparrini A. Interrupted time series regression for the evaluation of public health interventions: a tutorial. Int J Epidemiol. 2016. Epub 2016/06/11. doi: 10.1093/ije/dyw098. 27283160.

21. NHS England. Avoiding unplanned admissions enhanced service: Guidance and audit requirements 2014 [05/07/2014]. Available from: http://www.nhsemployers.org/~/media/Employers/Publications/Avoiding%20unplanned%20admissions%20guidance%202014-15.pdf.

22. Health and Social Care Information Centre. Hospital Episode Statistics (HES) Analysis Guide 2015. Available from: http://content.digital.nhs.uk/media/1592/HES-analysis-guide/pdf/HES_Analysis_Guide_Jan_2014.pdf.

23. Vittal Katikireddi S, McKee M, Craig P, Stuckler D. The NHS reforms in England: four challenges to evaluating success and failure. Journal of the Royal Society of Medicine. 2014;107(10):387–92. doi: 10.1177/0141076814550358 25271273

24. Department of Health. Post-legislative assessment of the Health and Social Care Act 2012: Memorandum to the House of Commons Health Select Committee. In: Health Do, editor. 2014.

25. Howie JG, Heaney DJ, Maxwell M. Evaluating care of patients reporting pain in fundholding practices. BMJ. 1994;309(6956):705–10. Epub 1994/09/17. 7950524; PubMed Central PMCID: PMCPmc2540843.

26. Kammerling RM, Kinnear A. The extent of the two tier service for fundholders. BMJ. 1996;312(7043):1399–401. 8646099

27. Croxson B, Propper C, Perkins A. Do doctors respond to financial incentives? UK family doctors and the GP fundholder scheme. Journal of Public Economics. 2001;79(2):375–98. http://dx.doi.org/10.1016/S0047-2727(00)00074-8.

28. Dusheiko M, Gravelle H, Jacobs R, Smith P. The effect of financial incentives on gatekeeping doctors: Evidence from a natural experiment. Journal of Health Economics. 2006;25(3):449–78. http://dx.doi.org/10.1016/j.jhealeco.2005.08.001. doi: 10.1016/j.jhealeco.2005.08.001 16188338

29. Coulter A, Bradlow J. Effect of NHS reforms on general practitioners' referral patterns. BMJ. 1993;306(6875):433–7. 8461728

30. Fear CF, Cattell HR. Fund-holding general practices and old age psychiatry. The Psychiatrist. 1994;18(5):263–5. doi: 10.1192/pb.18.5.263

31. Gross R, Nirel N, Boussidan S, Zmora I, Elhayany A, Regev S. The influence of budget-holding on cost containment and work procedures in primary care clinics. Social Science & Medicine. 1996;43(2):173–86. http://dx.doi.org/10.1016/0277-9536(95)00359-2.

32. Elhayany A, Regev S, Sherf M, Reuveni H, Shvartzman P. Effects of a fundholding discontinuation. An Israeli health maintenance organization natural experiment. Scandinavian journal of primary health care. 2001;19(4):223–6. Epub 2002/02/02. 11822644.

33. Robertson R, Ross S, Bennett L, Holder H, Gosling J, Curry N. Risk or reward? The changing role of CCGs in general practice. King's Fund and Nuffield Trust, 2015.

34. Reid FDA, Cook DG, Majeed A. Explaining variation in hospital admission rates between general practices: cross sectional study. BMJ. 1999;319(7202):98–103. doi: 10.1136/bmj.319.7202.98 10398636

35. Wilson A, Childs S. The effect of interventions to alter the consultation length of family physicians: a systematic review. British Journal of General Practice. 2006;56(532):876–82. 17132356

36. NHS Digital. General and Personal Medical Services, England September 2015—March 2016. 2016.

37. NHS Digital. NHS Workforce Statistics—September 2016, Provisional statistics 2016 [cited 2017 24/07/2017]. Available from: http://www.content.digital.nhs.uk/catalogue/PUB22716.

38. Naci H, Soumerai SB. History, Bias, Study Design, and the Unfulfilled Promise of Pay-for-Performance Policies in Health Care. Preventing Chronic Disease. 2016;13. doi: 10.5888/pcd13.160133 27337559

Štítky

Interní lékařství

Článek Contemporary disengagement from antiretroviral therapy in Khayelitsha, South Africa: A cohort studyČlánek Bioequivalence of twice-daily oral tacrolimus in transplant recipients: More evidence for consensus?

Článek vyšel v časopisePLOS Medicine

Nejčtenější tento týden

2017 Číslo 11- Berberin: přírodní hypolipidemikum se slibnými výsledky

- Léčba bolesti u seniorů

- Příznivý vliv Armolipidu Plus na hladinu cholesterolu a zánětlivé parametry u pacientů s chronickým subklinickým zánětem

- Červená fermentovaná rýže účinně snižuje hladinu LDL cholesterolu jako vhodná alternativa ke statinové terapii

- Jak postupovat při výběru betablokátoru − doporučení z kardiologické praxe

-

Všechny články tohoto čísla

- Labour trafficking: Challenges and opportunities from an occupational health perspective

- The end of HIV: Still a very long way to go, but progress continues

- Contemporary disengagement from antiretroviral therapy in Khayelitsha, South Africa: A cohort study

- Bioequivalence of twice-daily oral tacrolimus in transplant recipients: More evidence for consensus?

- Treatment guidelines and early loss from care for people living with HIV in Cape Town, South Africa: A retrospective cohort study

- Perinatal mortality associated with induction of labour versus expectant management in nulliparous women aged 35 years or over: An English national cohort study

- Core Outcome Set-STAndards for Development: The COS-STAD recommendations

- Closing the gaps in the HIV care continuum

- Association between the 2012 Health and Social Care Act and specialist visits and hospitalisations in England: A controlled interrupted time series analysis

- HIV pre-exposure prophylaxis and early antiretroviral treatment among female sex workers in South Africa: Results from a prospective observational demonstration project

- Sexual exploitation of unaccompanied migrant and refugee boys in Greece: Approaches to prevention

- Child sex trafficking in the United States: Challenges for the healthcare provider

- The expanding epidemic of HIV-1 in the Russian Federation

- Cardiovascular disease (CVD) and chronic kidney disease (CKD) event rates in HIV-positive persons at high predicted CVD and CKD risk: A prospective analysis of the D:A:D observational study

- Validity of a minimally invasive autopsy for cause of death determination in maternal deaths in Mozambique: An observational study

- malERA: An updated research agenda for malaria elimination and eradication

- malERA: An updated research agenda for health systems and policy research in malaria elimination and eradication

- A combination intervention strategy to improve linkage to and retention in HIV care following diagnosis in Mozambique: A cluster-randomized study

- Bioequivalence between innovator and generic tacrolimus in liver and kidney transplant recipients: A randomized, crossover clinical trial

- malERA: An updated research agenda for basic science and enabling technologies in malaria elimination and eradication

- Human trafficking and exploitation: A global health concern

- Virological response and resistance among HIV-infected children receiving long-term antiretroviral therapy without virological monitoring in Uganda and Zimbabwe: Observational analyses within the randomised ARROW trial

- Postmenopausal hormone therapy and risk of stroke: A pooled analysis of data from population-based cohort studies

- Lansoprazole use and tuberculosis incidence in the United Kingdom Clinical Practice Research Datalink: A population based cohort

- malERA: An updated research agenda for insecticide and drug resistance in malaria elimination and eradication

- Safety, pharmacokinetics, and immunological activities of multiple intravenous or subcutaneous doses of an anti-HIV monoclonal antibody, VRC01, administered to HIV-uninfected adults: Results of a phase 1 randomized trial

- HIV prevalence and behavioral and psychosocial factors among transgender women and cisgender men who have sex with men in 8 African countries: A cross-sectional analysis

- Treatment eligibility and retention in clinical HIV care: A regression discontinuity study in South Africa

- malERA: An updated research agenda for characterising the reservoir and measuring transmission in malaria elimination and eradication

- Effectiveness of a combination strategy for linkage and retention in adult HIV care in Swaziland: The Link4Health cluster randomized trial

- The value of confirmatory testing in early infant HIV diagnosis programmes in South Africa: A cost-effectiveness analysis

- HIV self-testing among female sex workers in Zambia: A cluster randomized controlled trial

- The US President's Malaria Initiative, transmission and mortality: A modelling study

- Comparison of two cash transfer strategies to prevent catastrophic costs for poor tuberculosis-affected households in low- and middle-income countries: An economic modelling study

- Direct provision versus facility collection of HIV self-tests among female sex workers in Uganda: A cluster-randomized controlled health systems trial

- malERA: An updated research agenda for diagnostics, drugs, vaccines, and vector control in malaria elimination and eradication

- malERA: An updated research agenda for combination interventions and modelling in malaria elimination and eradication

- HIV-1 persistence following extremely early initiation of antiretroviral therapy (ART) during acute HIV-1 infection: An observational study

- Respondent-driven sampling for identification of HIV- and HCV-infected people who inject drugs and men who have sex with men in India: A cross-sectional, community-based analysis

- Extensive virologic and immunologic characterization in an HIV-infected individual following allogeneic stem cell transplant and analytic cessation of antiretroviral therapy: A case study

- Measuring success: The challenge of social protection in helping eliminate tuberculosis

- Prospects for passive immunity to prevent HIV infection

- Reaching global HIV/AIDS goals: What got us here, won't get us there

- Evidence-based restructuring of health and social care

- Extreme exploitation in Southeast Asia waters: Challenges in progressing towards universal health coverage for migrant workers

- PLOS Medicine

- Archiv čísel

- Aktuální číslo

- Informace o časopisu

Nejčtenější v tomto čísle- Postmenopausal hormone therapy and risk of stroke: A pooled analysis of data from population-based cohort studies

- Bioequivalence between innovator and generic tacrolimus in liver and kidney transplant recipients: A randomized, crossover clinical trial

- HIV pre-exposure prophylaxis and early antiretroviral treatment among female sex workers in South Africa: Results from a prospective observational demonstration project

- Bioequivalence of twice-daily oral tacrolimus in transplant recipients: More evidence for consensus?

Kurzy

Zvyšte si kvalifikaci online z pohodlí domova

Současné možnosti léčby obezity

nový kurzAutoři: MUDr. Martin Hrubý

Všechny kurzyPřihlášení#ADS_BOTTOM_SCRIPTS#Zapomenuté hesloZadejte e-mailovou adresu, se kterou jste vytvářel(a) účet, budou Vám na ni zaslány informace k nastavení nového hesla.

- Vzdělávání