-

Články

Top novinky

Reklama- Vzdělávání

- Časopisy

Top články

Nové číslo

- Témata

Top novinky

Reklama- Videa

- Podcasty

Nové podcasty

Reklama- Kariéra

Doporučené pozice

Reklama- Praxe

Top novinky

ReklamaEvidence for the Selective Reporting of Analyses and Discrepancies in Clinical Trials: A Systematic Review of Cohort Studies of Clinical Trials

Background:

Most publications about selective reporting in clinical trials have focussed on outcomes. However, selective reporting of analyses for a given outcome may also affect the validity of findings. If analyses are selected on the basis of the results, reporting bias may occur. The aims of this study were to review and summarise the evidence from empirical cohort studies that assessed discrepant or selective reporting of analyses in randomised controlled trials (RCTs).Methods and Findings:

A systematic review was conducted and included cohort studies that assessed any aspect of the reporting of analyses of RCTs by comparing different trial documents, e.g., protocol compared to trial report, or different sections within a trial publication. The Cochrane Methodology Register, Medline (Ovid), PsycInfo (Ovid), and PubMed were searched on 5 February 2014. Two authors independently selected studies, performed data extraction, and assessed the methodological quality of the eligible studies. Twenty-two studies (containing 3,140 RCTs) published between 2000 and 2013 were included. Twenty-two studies reported on discrepancies between information given in different sources. Discrepancies were found in statistical analyses (eight studies), composite outcomes (one study), the handling of missing data (three studies), unadjusted versus adjusted analyses (three studies), handling of continuous data (three studies), and subgroup analyses (12 studies). Discrepancy rates varied, ranging from 7% (3/42) to 88% (7/8) in statistical analyses, 46% (36/79) to 82% (23/28) in adjusted versus unadjusted analyses, and 61% (11/18) to 100% (25/25) in subgroup analyses. This review is limited in that none of the included studies investigated the evidence for bias resulting from selective reporting of analyses. It was not possible to combine studies to provide overall summary estimates, and so the results of studies are discussed narratively.Conclusions:

Discrepancies in analyses between publications and other study documentation were common, but reasons for these discrepancies were not discussed in the trial reports. To ensure transparency, protocols and statistical analysis plans need to be published, and investigators should adhere to these or explain discrepancies.

Please see later in the article for the Editors' Summary

Published in the journal: . PLoS Med 11(6): e32767. doi:10.1371/journal.pmed.1001666

Category: Research Article

doi: https://doi.org/10.1371/journal.pmed.1001666Summary

Background:

Most publications about selective reporting in clinical trials have focussed on outcomes. However, selective reporting of analyses for a given outcome may also affect the validity of findings. If analyses are selected on the basis of the results, reporting bias may occur. The aims of this study were to review and summarise the evidence from empirical cohort studies that assessed discrepant or selective reporting of analyses in randomised controlled trials (RCTs).Methods and Findings:

A systematic review was conducted and included cohort studies that assessed any aspect of the reporting of analyses of RCTs by comparing different trial documents, e.g., protocol compared to trial report, or different sections within a trial publication. The Cochrane Methodology Register, Medline (Ovid), PsycInfo (Ovid), and PubMed were searched on 5 February 2014. Two authors independently selected studies, performed data extraction, and assessed the methodological quality of the eligible studies. Twenty-two studies (containing 3,140 RCTs) published between 2000 and 2013 were included. Twenty-two studies reported on discrepancies between information given in different sources. Discrepancies were found in statistical analyses (eight studies), composite outcomes (one study), the handling of missing data (three studies), unadjusted versus adjusted analyses (three studies), handling of continuous data (three studies), and subgroup analyses (12 studies). Discrepancy rates varied, ranging from 7% (3/42) to 88% (7/8) in statistical analyses, 46% (36/79) to 82% (23/28) in adjusted versus unadjusted analyses, and 61% (11/18) to 100% (25/25) in subgroup analyses. This review is limited in that none of the included studies investigated the evidence for bias resulting from selective reporting of analyses. It was not possible to combine studies to provide overall summary estimates, and so the results of studies are discussed narratively.Conclusions:

Discrepancies in analyses between publications and other study documentation were common, but reasons for these discrepancies were not discussed in the trial reports. To ensure transparency, protocols and statistical analysis plans need to be published, and investigators should adhere to these or explain discrepancies.

Please see later in the article for the Editors' SummaryIntroduction

Selective reporting in clinical trial reports has been described mainly with respect to the reporting of a subset of the originally recorded outcome variables in the final trial report. Selective outcome reporting can create outcome reporting bias, if reporting is driven by the statistical significance or direction of the estimated effect (e.g., outcomes where the results are not statistically significant are suppressed or reported only as p>0.05) [1]. A recent review showed that statistically significant outcomes were more likely to be fully reported than non-significant outcomes (range of odds ratios: 2.2 to 4.7). In 40% to 62% of studies at least one primary outcome was changed, introduced, or omitted between the protocol and the trial publication [2]. Another review reached similar conclusions and also found that studies with significant results tended to be published earlier than studies with non-significant results [3].

Other types of selective reporting may also affect the validity of reported findings from clinical trials. Discrepancies can occur in analyses, if data for a given outcome are analysed and reported differently from the trial protocol or statistical analysis plan. For example, a trial's publication may report a per protocol analysis rather than a pre-planned intention-to-treat analysis, or report on an unadjusted analysis rather than a pre-specified adjusted analysis. In the latter example, discrepancies in analyses may also occur if adjustment covariates are used that are different to those originally planned. If analyses are selected for inclusion in a trial report based on the results being more favourable than those obtained by following the analysis plan, then analysis reporting bias occurs in a similar way to outcome reporting bias.

Examples of the various ways in which selective reporting can occur in randomised controlled trials (RCTs) have previously been described [4]. Furthermore, a systematic review of cohorts of RCTs comparing protocols or trial registry entries with corresponding publications found that discrepancies in methodological details, outcomes, and analyses were common [5]. However, no study to our knowledge has yet systematically reviewed the empirical evidence for the selective reporting of analyses in clinical trials or examined discrepancies with documents apart from the protocol or trial registry entry.

This study aimed to fill this gap by reviewing and summarising the evidence from empirical cohort studies that have assessed (1) selective reporting of analyses in RCTs and (2) discrepancies in analyses of RCTs between different sources (i.e., grant proposal, protocol, trial registry entry, information submitted to regulatory authorities, and the publication of the trial's findings), or between sections within a publication.

Methods

Study Inclusion Criteria

We included research that compared different sources of information when assessing any aspect of the analysis of outcome data in RCTs.

Cohorts containing RCTs alone, or a mixture of RCTs and non-RCTs were eligible. For those cohorts where it was not possible to identify the study type (i.e., to determine whether any RCTs were included), we sought clarification from the authors of the cohort study. Studies were excluded where inclusion of RCTs could not be confirmed, or where only non-RCTs had been included.

Search Strategy

The search was conducted without language restrictions. In May 2013, the Cochrane Methodology Register, Medline (Ovid), PsycInfo (Ovid), and PubMed were searched (Text S2). These searches were updated on 5 February 2014, except for the search of the Cochrane Methodology Register, which has been unchanged since July 2012. Cochrane Colloquium conference proceedings from 2011, 2012, and 2013 were hand-searched, noting that abstracts from previous Cochrane Colloquia had already been included in the Cochrane Methodology Register. A citation search of a key article [1] was also performed. The lead or contact authors of all identified studies and other experts in this area were asked to identify further studies.

Two authors independently applied the inclusion criteria to the studies identified. Any discrepancies between the authors were resolved through discussion, until consensus was reached.

Data Extraction

One author extracted details of the characteristics and results of the empirical cohort studies. Information on the main objectives of each empirical study was also extracted, and the studies were separated according to whether they related to selective reporting or discrepancies between sources. Selective reporting of analyses was defined as when the reported analyses had been selected from multiple analyses of the same data for a given outcome. A discrepancy was defined as when information was absent in one source but reported in another, or when the information given in two sources was contradictory. If selective reporting bias was studied, the definition of “significance” used in each cohort was noted (i.e., direction of results or whether the study used a particular p-value [e.g., p<0.05] to determine significance).

Data extraction was checked by another author. No masking was used, and disagreements were resolved through discussion.

Methodological Quality

In the absence of a recognised tool to evaluate the methodological quality of the empirical studies eligible for this review, we developed and used three criteria to assess methodological quality:

-

Independent data extraction by at least two people

-

High quality: data extraction completed independently by at least two people.

-

Methodological concerns: data extraction not completed independently by at least two people.

-

Uncertain quality: not stated.

-

-

Definition of positive and negative findings

-

High quality: clearly defined.

-

Methodological concerns: not clearly defined.

-

Not applicable: this was not included in the objectives of the study.

-

-

Risk of selective reporting bias in the empirical study

-

High quality: all comparisons and outcomes mentioned in the methods section of the empirical study report are fully reported in the results section of the publication.

-

Methodological concerns: not all comparisons and outcomes mentioned in the methods section of the empirical study report are fully reported in the results section of the publication.

-

Two authors independently assessed these items for all studies. An independent assessor (Matthew Page) was invited to assess one study [6] because the first author was directly involved in its design. Any discrepancies were resolved through a consensus discussion with a third reviewer not involved with the included studies.

Data Analysis

This review provides a descriptive summary of the included empirical cohort studies. We refrained from any statistical combination of the results from the different cohorts because of the differences in their design.

Results

Search Results

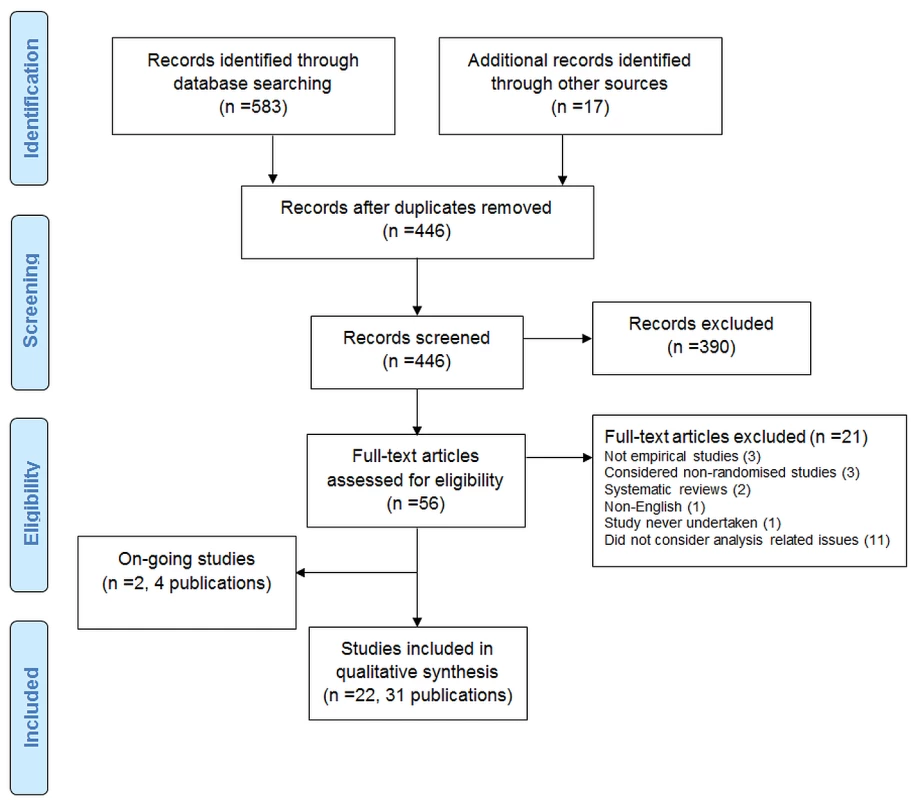

The search strategy identified 600 records. After duplicates were removed, 446 records were screened, and 390 were excluded. Full texts were accessed for 56 articles.

The PRISMA flow diagram is shown in Figure 1.

Excluded Studies

Twenty-one articles were excluded after assessment of their full text: two were reviews; one was not in English; one was reported in abstract form only and the study was never undertaken; three were not empirical studies; three included only non-RCTs; and 11 did not consider analysis-related issues.

Two ongoing studies (four publications) were also identified [7]–[10]. One study [7],[9],[10] included 894 protocols (and 520 related journal publications) approved by six research ethics committees from 2000 to 2003 in Switzerland, Germany, and Canada. The aim of the study was to determine the agreement between planning of subgroups, interim analyses, and stopping rules and their reporting in subsequent publications. A conference abstract was identified in which the authors assessed RCTs submitted to the European Medicines Agency for marketing approval and assessed selective reporting of analyses [8]. The authors were contacted for further details.

Included Studies

Twenty-two studies (in 31 publications) containing a total of 3,140 RCTs were included [6],[11]–[31].

All 22 included studies investigated discrepancies, and although several of these studies considered the statistical significance of results, none investigated selective reporting bias.

Study Characteristics

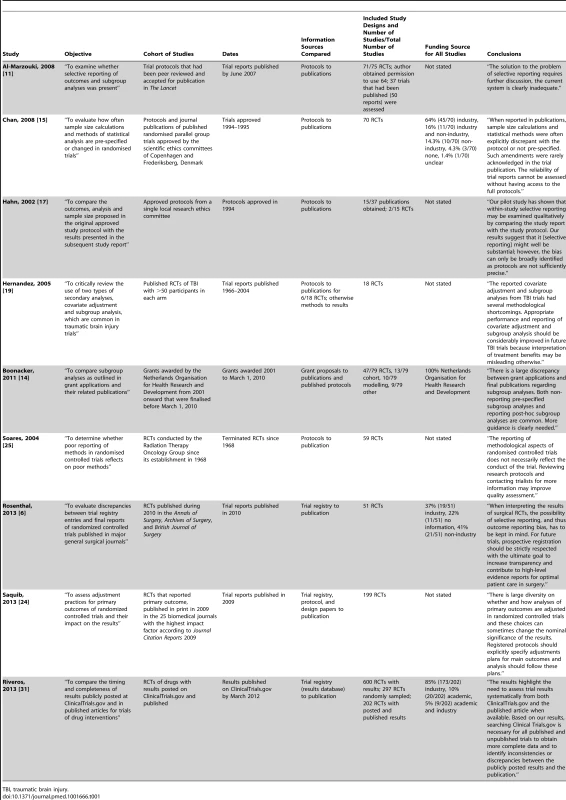

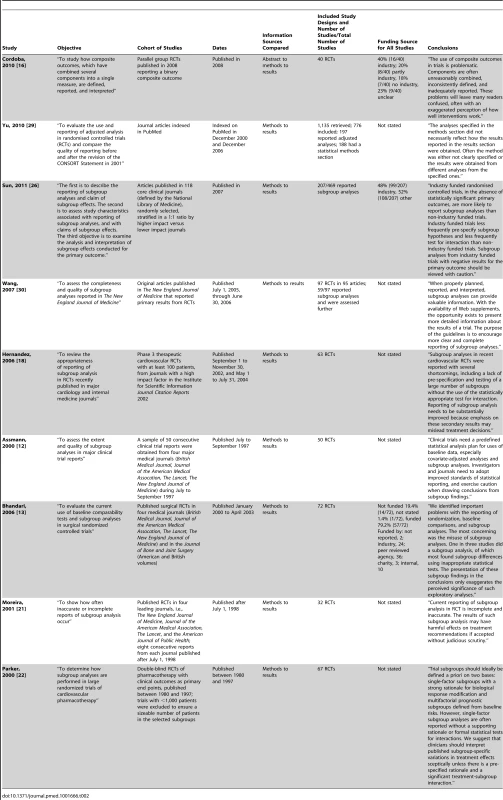

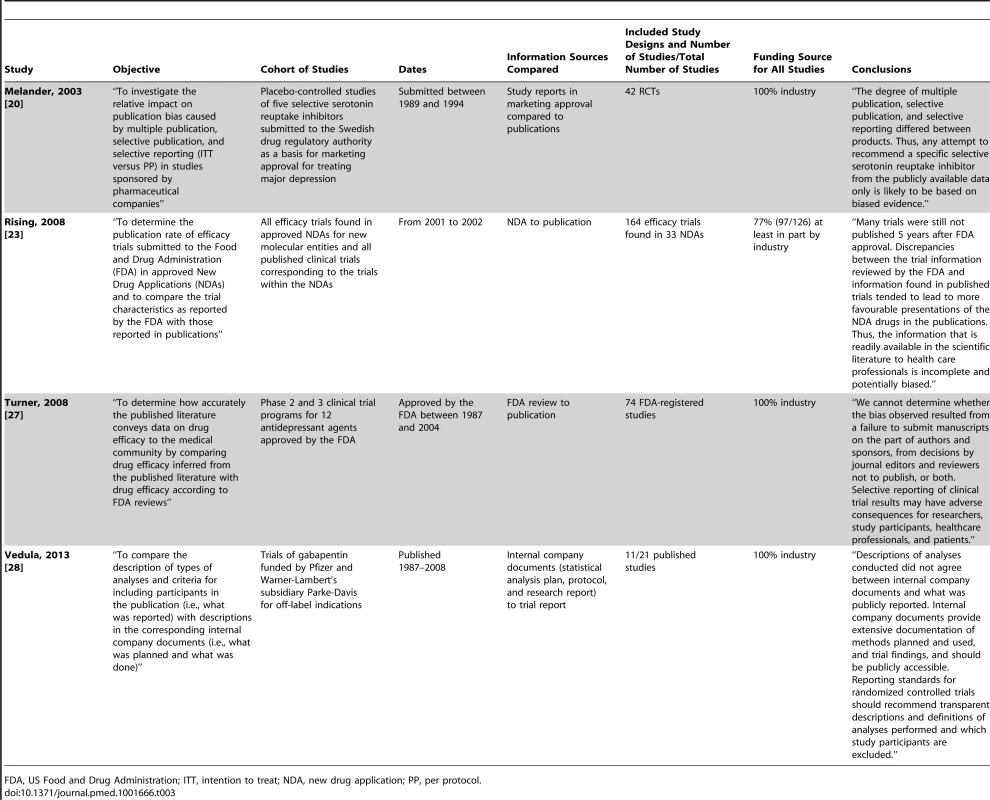

Study characteristics are presented in Tables 1–3.

Tab. 1. Characteristics of included studies comparing protocols or trial registry entries to full publications.

TBI, traumatic brain injury. Tab. 2. Characteristics of included studies comparing information within a trial report.

Tab. 3. Characteristics of included studies comparing other documentation to full publications.

FDA, US Food and Drug Administration; ITT, intention to treat; NDA, new drug application; PP, per protocol. Included cohort studies were published between 2000 and 2013: two compared marketing approval or new drug applications to publications; one compared US Food and Drug Administration reviews to publications; one compared internal company documents to publications; five compared protocols to publications; two compared trial registry entries to publications; one compared grant proposals and protocols to publications; one compared trial registry entries/protocols/design papers to publications; and nine compared information within the trial report (i.e., between sections such as the abstract, methods, and results). Of the cohort studies, 91% (20/22) included only RCTs, with a median of 61 RCTs per cohort study (interquartile range: 41 to 91; range: 2 to 776).

Included RCTs were published between 1966 and 2012. The source of funding of RCTs was not considered in 11 of the cohort studies. In ten studies, industry funded a median of 70.5% of the RCTs (interquartile range: 46% to 100%). In one study, all of the RCTs were funded by the Netherlands Organisation for Health Research and Development.

Methodological Quality

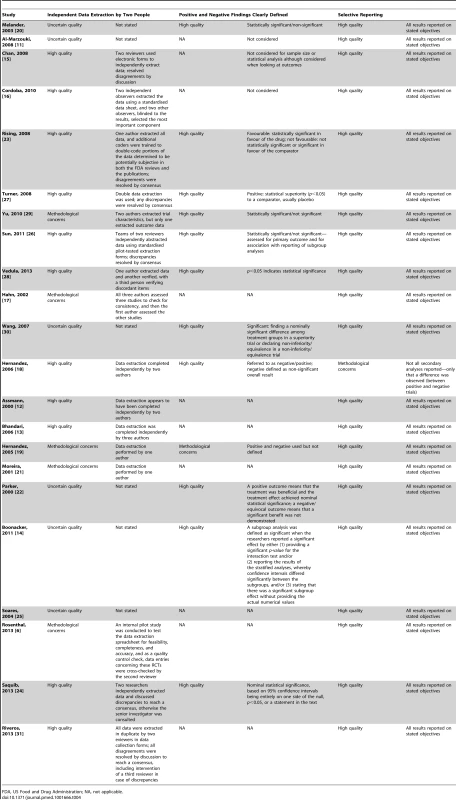

Details of the methodological quality of the included studies are presented in Table 4.

Tab. 4. Methodological quality.

FDA, US Food and Drug Administration; NA, not applicable. Independent data extraction by at least two people

We had methodological concerns about five studies because data extraction was completed by only one person or only a sample was checked by a second author. Eleven studies were high quality, as data extraction was completed by at least two people. Six studies were rated as uncertain quality, as information regarding data extraction was not provided.

Definition of positive and negative findings

We had methodological concerns about one study because positive and negative findings were not defined, while 11 studies were of high quality, with clear definitions of positive and negative findings. Defining a positive or negative finding was not a study objective in ten studies.

Risk of selective reporting bias in the empirical study

One study [18] generated methodological concerns because some secondary analyses were not reported, and the study report stated only that no difference was observed between positive and negative trials. The remaining 21 studies were of high quality, with all comparisons and outcomes stated in the study methods reported in full. We did not have access to any protocols for the empirical studies in order to make a more comprehensive assessment of how each study performed for this methodological quality item.

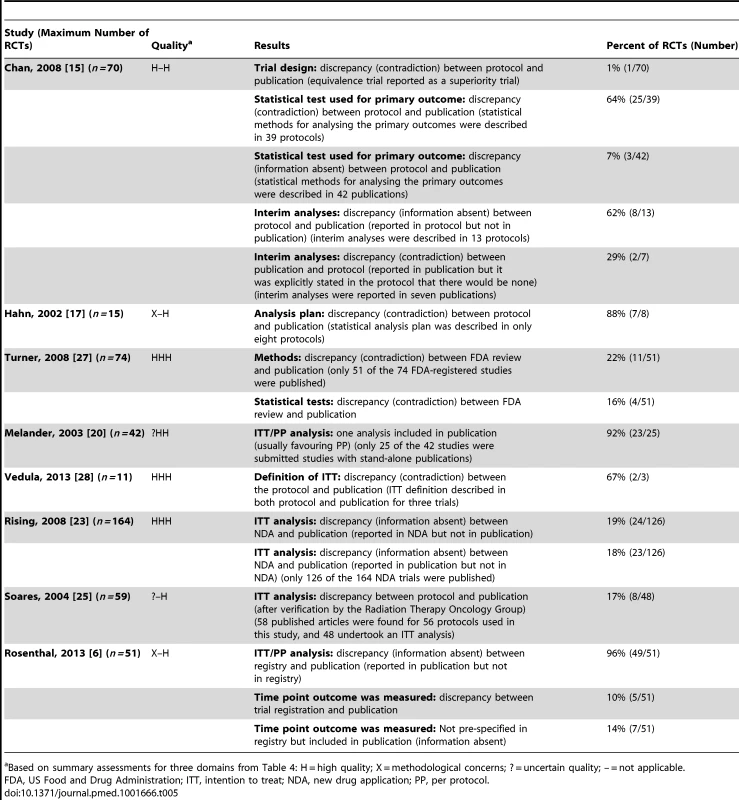

Statistical Analyses

Eight studies investigated discrepancies in statistical analyses [6],[15],[17],[20],[23],[25],[27],[28]. Table 5 summarises the results of these studies. Three studies reported discrepancies in the analysis methods between documents: discrepancy rates ranged from 7% (3/42) of their included studies to 88% (7/8) [15],[17],[27]. Five studies considered whether an intention-to-treat analysis or per protocol analysis was specified or reported [6],[20],[23],[25],[28]. Three of these studies found discrepancies between different documents (due to the absence of information) in 17% (8/48) to 96% (49/51) of included RCTs [6],[23],[25], and another study [28] found discrepancies (due to a contradiction) between protocols and publications in 67% (2/3) of RCTs. Melander et al. found that only one analysis was reported in 92% (23/25) of RCTs, usually favouring the per protocol analysis [20]. One study [15] found that an equivalence RCT was reported as a superiority RCT, and that there were discrepancies (information absent) in interim analyses in 62% (8/13) of RCTs. Rosenthal and Dwan found discrepancies (contradictions and information absent) between trial registry entries and publications in the reporting of outcomes at different time points [6].

Tab. 5. Statistical analyses.

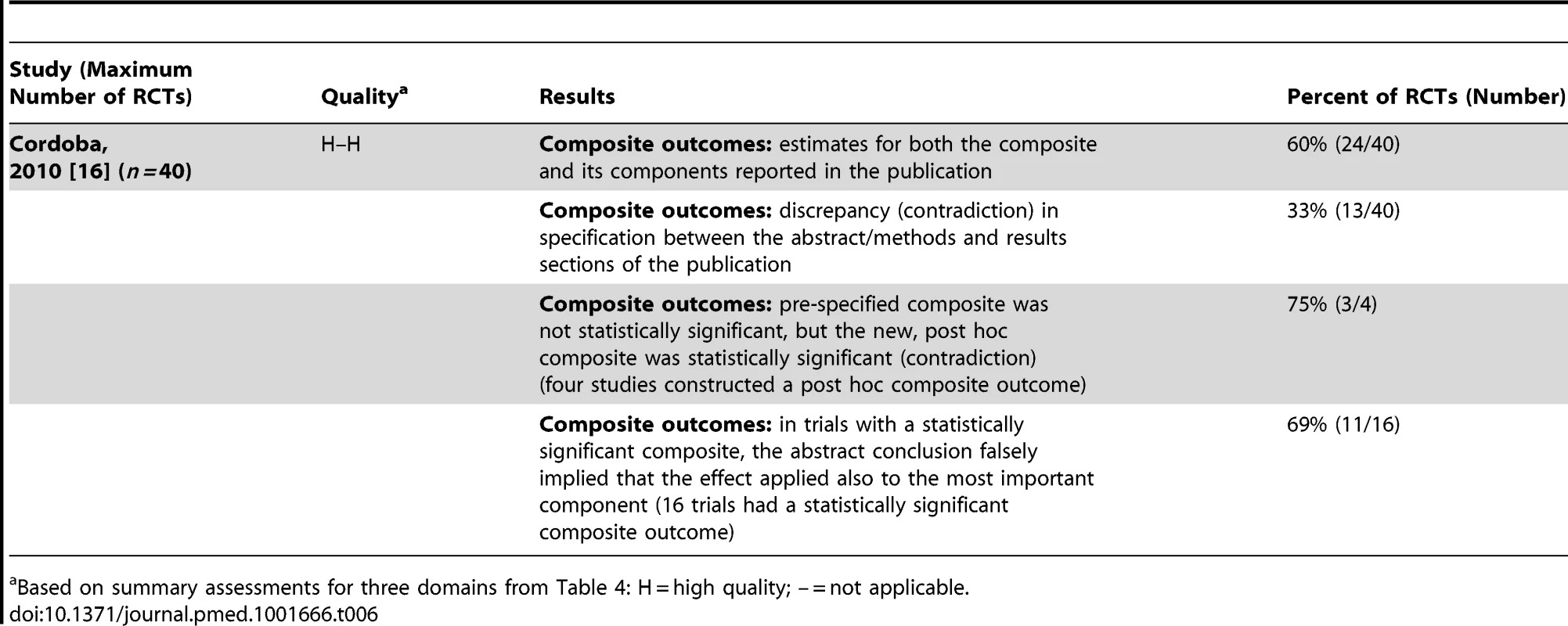

Based on summary assessments for three domains from Table 4: H = high quality; X = methodological concerns; ? = uncertain quality; – = not applicable. Composite Outcomes

One study (Table 6) investigated discrepancies in composite outcomes [16] and found changes in the specification of the composite outcome between abstracts, methods, and results sections in 33% (13/40) of RCTs. In 69% (11/16) of RCTs with a statistically significant composite outcome, the abstract's conclusion falsely implied that the effect was also seen for the most important component. This reporting strategy of highlighting that the experimental treatment is beneficial despite a statistically non-significant difference for the primary outcome is one form of “spin” [32].

Tab. 6. Composite outcomes.

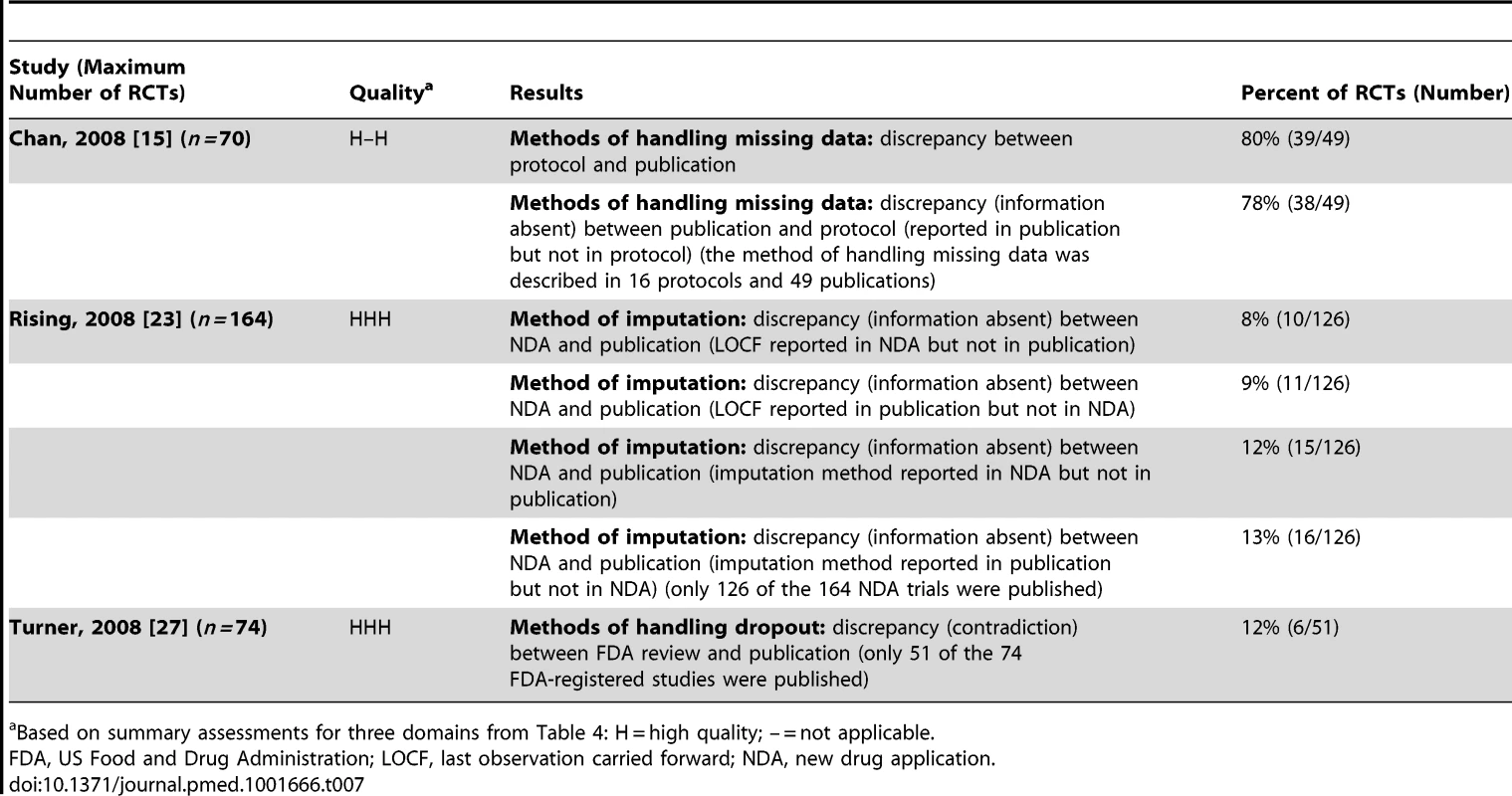

Based on summary assessments for three domains from Table 4: H = high quality; – = not applicable. Handling Missing Data

Three studies investigated discrepancies in the handling of missing data [15],[23],[27] (Table 7). One study found that methods of handling missing data differed between documents in 12% (6/51) of RCTs [27]. Chan et al. found discrepancies in methods between protocols and publications in 80% (39/49) of RCTs, and also that in 78% (38/49) of RCTs that reported methods in the publication, these methods were not pre-specified [15]. Rising et al. [23] found that some studies reported the method in the new drug application but not in the trial publication and vice versa.

Tab. 7. Handling missing data.

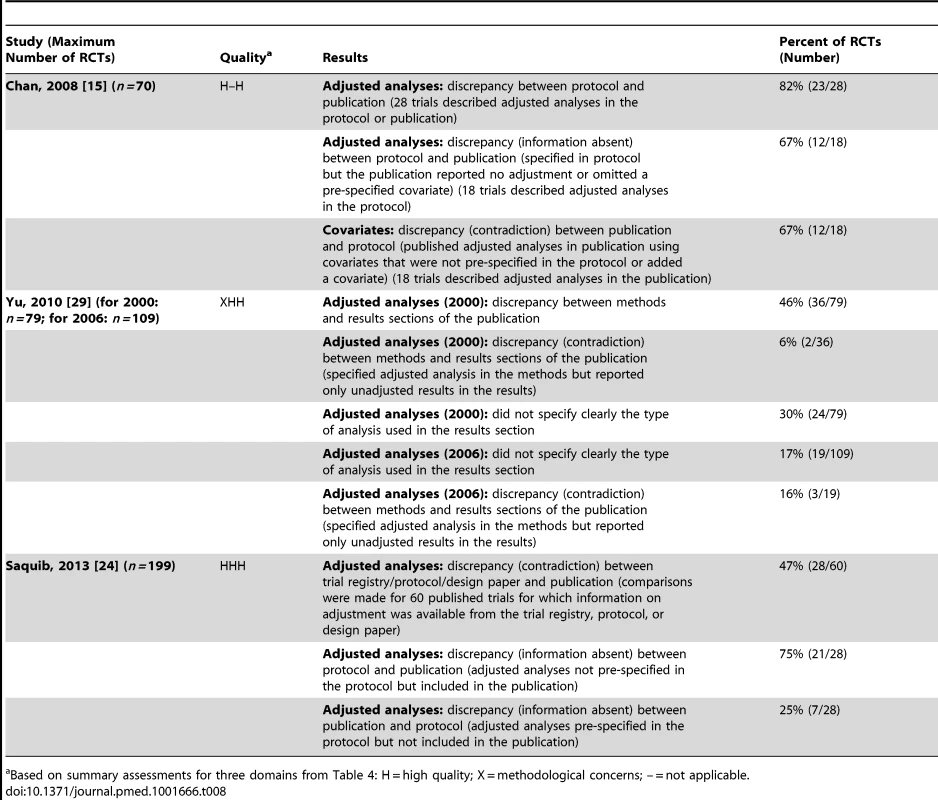

Based on summary assessments for three domains from Table 4: H = high quality; – = not applicable. Unadjusted versus Adjusted Analyses

Three studies [15],[24],[29] found discrepancies in unadjusted versus adjusted analyses in 46% (36/79) to 82% (23/28) of RCTs (Table 8).

Tab. 8. Unadjusted versus adjusted analyses.

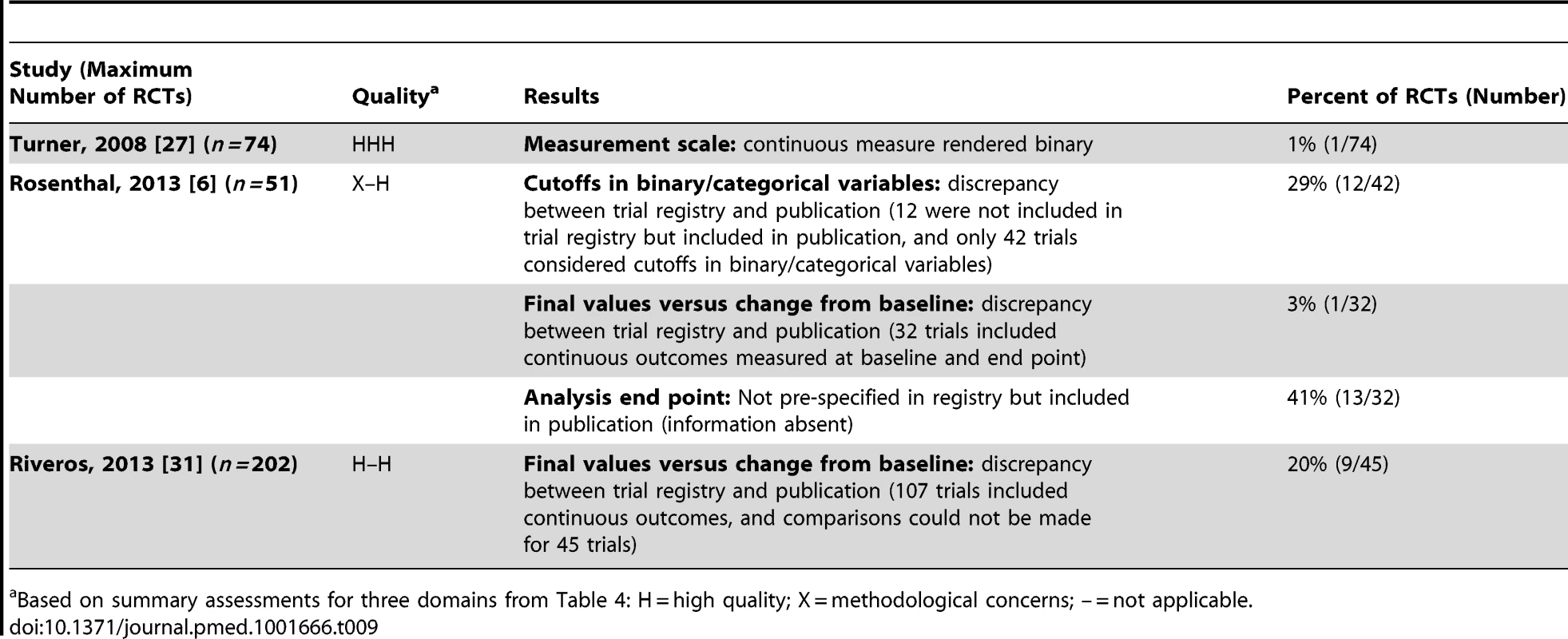

Based on summary assessments for three domains from Table 4: H = high quality; X = methodological concerns; – = not applicable. Continuous/Binary Data

Two studies investigated discrepancies in the use of continuous and binary versions of the same underlying data [6],[27] (Table 9). Turner et al. [27] found a continuous measure rendered binary in 1% (1/74) of RCTs, and Rosenthal and Dwan [6] found discrepancies between trial registry entries and publications in 29% (12/42) of RCTs. A third study, Riveros et al. [31], found that in 20% (9/45) of RCTs there were discrepancies due to different types of analysis (i.e., change from baseline versus final-value mean) between results posted in trial registry entries at ClinicalTrials.gov and in the corresponding publications. Rosenthal et al. also found discrepancies (contradictions and information absent) for final values versus change from baseline [6].

Tab. 9. Continuous/binary data.

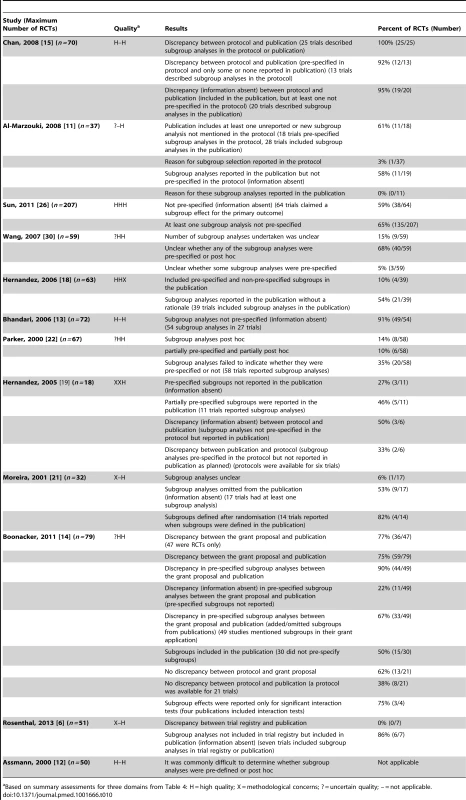

Based on summary assessments for three domains from Table 4: H = high quality; X = methodological concerns; – = not applicable. Subgroup Analyses

Twelve studies investigated discrepancies in subgroup analyses [6],[11]–[15],[18],[19],[21],[22],[26],[30] (Table 10). The majority considered whether subgroup analyses were pre-specified or post hoc. Assmann et al. found that it was commonly difficult to distinguish between these different timings for the choice of subgroup analyses [12]. Four studies considered discrepancies; and the discrepancy rate ranged from 61% (11/18) to 100% (25/25) of RCTs in three studies [11],[14],[15]. The fourth study found subgroup analyses reported in only seven RCTs, and no details had been included in the trial registry entries for six of these RCTs, while the seventh had no discrepancies [6]. In seven studies [11],[13],[18],[21],[22],[26],[30], where the comparison was mostly made between the methods and results sections of the trial publication, it was found that a number of subgroup analyses conducted were not pre-specified (range: 14% [8/58] to 91% [49/54] of RCTs), pre-specified, but not reported in the publication (range: 27% [3/11] to 53% [9/17]), or contained a mixture of pre-specified and non-pre-specified subgroup analyses (range: 10% [6/58] to 65% [135/207]).

Tab. 10. Subgroup analyses.

Based on summary assessments for three domains from Table 4: H = high quality; X = methodological concerns; ? = uncertain quality; – = not applicable. Funding

Although 11 studies looked at funding as a study characteristic, only two considered the relationship between discrepancies and funding. Sun et al. found that trials funded by industry were more likely to report subgroup results when the primary outcome was not statistically significant compared to when the primary outcome was statistically significant (odds ratio 3.00 [95% CI: 1.56 to 5.76], p = 0.001) [26].

Rosenthal and Dwan found no statistically significant differences in discrepancy rates of primary and secondary outcomes between registry entries and final reports of industry-sponsored versus non–industry-sponsored trials [6].

Discussion

Summary of Main Results

Twenty-two cohort studies of RCTs were included in this review that examined discrepancies between information given in different sources. Many different types of discrepancies in analyses between documents were found, and discrepancy rates ranged from 7% (3/42) to 88% (7/8) for analysis methods, 46% (36/79) to 82% (23/28) for the presentation of adjusted versus unadjusted analyses, and 61% (11/18) to 100% (25/25) for subgroup analyses.

None of the included studies examined the selective reporting bias of analyses in RCTs that would arise if analyses were reported or concealed because of the results. Such an assessment may prove to be difficult without access to statistical analysis plans and trial datasets to determine the statistical significance of the results for the analyses that were planned and for those that were reported.

The majority of studies (12) focussed on the reporting of subgroup analyses. None of the included studies provided any detail on the reasons for inconsistencies. A number of studies commented on whether or not reported subgroup analyses were pre-specified. While this may not be seen as a comparison, we reported the findings for these studies within this review because post hoc decisions about which subgroups to analyse and report may be influenced by the findings of those or related analyses. There are likely to be many other selective inclusion and reporting mechanisms for which there is no current empirical evidence, and a more complete categorisation is provided elsewhere [4]. The methodological concerns that were observed in the included studies were not critical, and they should not impact importantly on the interpretation of the results of the studies.

These discrepancies may be due to reporting bias, errors, or legitimate departures from a pre-specified protocol. Reliance on the source documentation to distinguish between these reasons may be inadequate, and contact with trial authors may be necessary. Only one study [29] contacted the original authors of the RCTs for information about the discrepancies, but only 9% (3/34) of those authors replied, and no details were given on the reasons for the discrepancies. In terms of selective reporting of outcomes, a previous study interviewed a cohort of trialists about outcomes that were specified in trial protocols but not fully reported in subsequent publications [33]. In nearly a quarter of trials (24%, 4/17) in which pre-specified outcomes had been measured but not analysed, the “direction” of the main findings influenced the investigators' decision not to analyse the remaining data collected.

Agreements and Disagreements with Other Studies or Reviews

Three of the included studies [11],[17],[25] were included in a previous Cochrane methodology review that was restricted to studies that compared any aspect of trial protocols or trial registry entries to publications [5]. The conclusions of the current review and the previous review are similar in that discrepancies were common, and reasons for them were rarely reported in the original RCTs. This current review focussed on analyses only, and included studies that compared different pieces of trial documentation or details within a trial publication.

Implications for RCTs

In accordance to International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use E 9 guidance (Statistical Principles for Clinical Trials [34]), procedures for executing the statistical analysis of the primary, secondary, and other data should be finalised before breaking the blind on those analyses. The availability of a trial protocol (or separate statistical analysis plan) is of prime importance for inferring whether the results presented are a selected subset of the analyses that were actually done and whether there are legitimate reasons for departures from a pre-specified protocol. Many leading medical journals, e.g., PLOS Medicine (http://www.plosmedicine.org/static/guidelines.action) and the British Medical Journal (http://www.bmj.com/about-bmj/resources-authors/article-submission/article-requirements), now require the submission of a trial protocol alongside the report of the RCT for comparison during the peer review process. In order to ensure transparency, protocols and any separate analysis plans for all trials need to be made publicly available, along with the date that the statistical analysis plan was finalised, details of reasons for any changes, and the dates of those changes. Additional analyses suggested by peer reviewers when a manuscript is submitted for publication should be judged on their own merits, and any additional analyses that are included in the final paper should be labelled as such.

Whilst evidence-based guidelines exist for researchers to develop high-quality protocols for clinical trials (e.g., SPIRIT [35]) and for reporting trial findings (CONSORT [36]), more guidance is needed for writing statistical analysis plans.

Implications for Systematic Reviews and Evaluations of Healthcare

Systematic reviewers need to ensure they access all possible trial documentation, whether it is publicly available or obtained from the trialists, in order to assess the potential for selective reporting bias for analyses. The Cochrane risk of bias tool is currently being updated, and the revised version will acknowledge the possibility of selective analysis reporting in addition to selective outcome reporting. Selective analysis reporting generally leads to a reported result that may be biased, so sits more naturally alongside other aspects of bias assessment of trials, such as randomisation methods, use of blinding, and patient exclusions. Selective outcome reporting may lead either to bias in a reported result (e.g., if a particular measurement scale is selected from among several) or to non-availability of any data for a particular outcome (e.g., if no measures for an outcome are reported). The latter sits more naturally alongside consideration of “publication bias” (suppression of all information about a trial).

Conclusions

There are to date no readily accessible data on selective reporting bias of analyses in cohorts of RCTs. Studies that have compared source documentation from before the start of a trial with the final trial publication have found a number of discrepancies in the way that analyses were planned and conducted. From the published literature, there is insufficient information to distinguish between bias, error, and legitimate changes. Reliance on the source documentation to distinguish between these may be inadequate, and contact with trial authors may be necessary. If journals insisted that authors provide protocols and analysis plans, selective reporting would be more easily detectable, and possibly reduced. Journals could flag research articles that provide no protocol or statistical analysis plans. More guidance is needed on how a detailed statistical analysis plan should be written.

Supporting Information

Zdroje

1. HuttonJL, WilliamsonPR (2000) Bias in meta-analysis due to outcome variable selection within studies. Appl Stat 49 : 359–370.

2. DwanK, GambleC, WilliamsonPR, KirkhamJJ (2013) Reporting Bias G (2013) Systematic review of the empirical evidence of study publication bias and outcome reporting bias - an updated review. PLoS ONE 8: e66844.

3. SongF, ParekhS, HooperL, LokeYK, RyderJ, et al. (2010) Dissemination and publication of research findings: an updated review of related biases. Health Technol Assess 14 : 1–193.

4. PageMJ, McKenzieJE, ForbesA (2013) Many scenarios exist for selective inclusion and reporting of results in randomized trials and systematic reviews. J Clin Epidemiol 66 : 524–537.

5. DwanK, AltmanDG, CresswellL, BlundellM, GambleCL, et al. (2011) Comparison of protocols and registry entries to published reports for randomised controlled trials. Cochrane Database Syst Rev 2011: MR000031.

6. RosenthalR, DwanK (2013) Comparison of randomized controlled trial registry entries and content of reports in surgery journals. Ann Surg 257 : 1007–1015.

7. Kasenda B, Sun X, von Elm E (2013) Planning and reporting of subgroup analyses in randomized trials—between confidence and delusion [abstract]. Cochrane Colloquium 2013; 19–23 September 2013; Quebec, Canada.

8. Maund E, Tendal B, Hróbjartsson A, Jørgensen KJ, Lundh A, et al.. (2013) Publication bias in randomised trials of duloxetine for the treatment of major depressive disorder [abstract]. Cochrane Colloquium 2013; 19–23 September 2013; Quebec, Canada.

9. Stegert M, Kasenda B, von Elm E, Briel M (2013) Reporting of interim analyses, stopping rules, and Data Safety and Monitoring Boards in protocols and publications of discontinued RCTs [abstract]. Cochrane Colloquium 2013; 19–23 September 2013; Quebec, Canada.

10. KasendaB, von ElmEB, YouJ, BlumleA, TomonagaY, et al. (2012) Learning from failure—rationale and design for a study about discontinuation of randomized trials (DISCO study). BMC Med Res Methodol 12 : 131.

11. Al-MarzoukiS, RobertsI, EvansS, MarshallT (2008) Selective reporting in clinical trials: analysis of trial protocols accepted by The Lancet. Lancet 372 : 201.

12. AssmannSF, PocockSJ, EnosLE, KastenLE (2000) Subgroup analysis and other (mis)uses of baseline data in clinical trials. Lancet 355 : 1064–1069.

13. BhandariM, DevereauxPJ, LiP, MahD, LimK, et al. (2006) Misuse of baseline comparison tests and subgroup analyses in surgical trials. Clin Orthop Relat Res 447 : 247–251.

14. BoonackerCW, HoesAW, van Liere-VisserK, SchilderAG, RoversMM (2011) A comparison of subgroup analyses in grant applications and publications. Am J Epidemiol 174 : 219–225.

15. ChanAW, HrobjartssonA, JorgensenKJ, GotzschePC, AltmanDG (2008) Discrepancies in sample size calculations and data analyses reported in randomised trials: comparison of publications with protocols. BMJ a2299.

16. CordobaG, SchwartzL, WoloshinS, BaeH, GotzschePC (2010) Definition, reporting, and interpretation of composite outcomes in clinical trials: systematic review. BMJ 341: c3920.

17. HahnS, WilliamsonPR, HuttonJL (2002) Investigation of within-study selective reporting in clinical research: follow-up of applications submitted to a local research ethics committee. J Eval Clin Pract 8 : 353–359.

18. HernandezAV, BoersmaE, MurrayGD, HabbemaJD, SteyerbergEW (2006) Subgroup analyses in therapeutic cardiovascular clinical trials: are most of them misleading? Am Heart J 151 : 257–264.

19. HernandezAV, SteyerbergEW, TaylorGS, MarmarouA, HabbemaJD, et al. (2005) Subgroup analysis and covariate adjustment in randomized clinical trials of traumatic brain injury: a systematic review. Neurosurgery 57 : 1244–1253.

20. MelanderH, Ahlqvist-RastadJ, MeijerG, BeermannB (2003) Evidence b(i)ased medicine—selective reporting from studies sponsored by pharmaceutical industry: review of studies in new drug applications. BMJ 326 : 1171–1173.

21. MoreiraED, SteinZ, SusserE (2001) Reporting on methods of subgroup analysis in clinical trials: a survey of four scientific journals. Braz J Med Biol Res 34 : 1441–1446.

22. ParkerAB, NaylorCD (2000) Subgroups, treatment effects, and baseline risks: some lessons from major cardiovascular trials. Am Heart J 139 : 952–961.

23. RisingK, BacchettiP, BeroL (2008) Reporting bias in drug trials submitted to the Food and Drug Administration: review of publication and presentation. PLoS Med 5: e217.

24. SaquibN, SaquibJ, IoannidisJP (2013) Practices and impact of primary outcome adjustment in randomized controlled trials: meta-epidemiologic study. BMJ 347: f4313.

25. SoaresHP, DanielsS, KumarA, ClarkeM, ScottC, et al. (2004) Bad reporting does not mean bad methods for randomised trials: observational study of randomised controlled trials performed by the Radiation Therapy Oncology Group. BMJ 328 : 22–24.

26. SunX, BrielM, BusseJW, YouJJ, AklEA, et al. (2011) The influence of study characteristics on reporting of subgroup analyses in randomised controlled trials: systematic review. BMJ 342: d1569.

27. TurnerEH, MatthewsAM, LinardatosE, TellRA, RosenthalR (2008) Selective publication of antidepressant trials and its influence on apparent efficacy. N Engl J Med 358 : 252–260.

28. VedulaSS, LiT, DickersinK (2013) Differences in reporting of analyses in internal company documents versus published trial reports: comparisons in industry-sponsored trials in off-label uses of gabapentin. PLoS Med 10: e1001378.

29. YuLM, ChanAW, HopewellS, DeeksJJ, AltmanDG (2010) Reporting on covariate adjustment in randomised controlled trials before and after revision of the 2001 CONSORT statement: a literature review. Trials 11 : 59.

30. WangR, LagakosSW, WareJH, HunterDJ, DrazenJM (2007) Statistics in medicine—reporting of subgroup analyses in clinical trials. N Engl J Med 357 : 2189–2194.

31. RiverosC, DechartresA, PerrodeauE, HaneefR, BoutronI, et al. (2013) Timing and completeness of trial results posted at ClinicalTrials.gov and published in journals. PLoS Med 10: e1001566.

32. BoutronI, DuttonS, RavaudP, AltmanDG (2010) Reporting and interpretation of randomized controlled trials with statistically nonsignificant results for primary outcomes. JAMA 303 : 2058–2064.

33. SmythRM, KirkhamJJ, JacobyA, AltmanDG, GambleC, et al. (2011) Frequency and reasons for outcome reporting bias in clinical trials: interviews with trialists. BMJ 342: c7153.

34. International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use (1998) ICH topic E 9: statistical principles for clinical trials. Geneva: International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use.

35. ChanAW, TetzlaffJM, AltmanDG, LaupacisA, GotzschePC, et al. (2013) SPIRIT 2013 statement: defining standard protocol items for clinical trials. Ann Intern Med 158 : 200–207.

36. SchulzKF, AltmanDG, MoherD (2010) Group C (2010) CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. BMJ 340: c332.

Štítky

Interní lékařství

Článek vyšel v časopisePLOS Medicine

Nejčtenější tento týden

2014 Číslo 6- Berberin: přírodní hypolipidemikum se slibnými výsledky

- Léčba bolesti u seniorů

- Příznivý vliv Armolipidu Plus na hladinu cholesterolu a zánětlivé parametry u pacientů s chronickým subklinickým zánětem

- Červená fermentovaná rýže účinně snižuje hladinu LDL cholesterolu jako vhodná alternativa ke statinové terapii

- Jak postupovat při výběru betablokátoru − doporučení z kardiologické praxe

-

Všechny články tohoto čísla

- Evidence for the Selective Reporting of Analyses and Discrepancies in Clinical Trials: A Systematic Review of Cohort Studies of Clinical Trials

- Health Care in Danger: Deliberate Attacks on Health Care during Armed Conflict

- Melanocytic Nevi as Biomarkers of Breast Cancer Risk

- Blood Transfusions following Trauma: Finding an Evidence-Based Vein

- Antiretroviral Therapy for Refugees and Internally Displaced Persons: A Call for Equity

- Association between Cutaneous Nevi and Breast Cancer in the Nurses' Health Study: A Prospective Cohort Study

- Efficacy of Pneumococcal Nontypable Protein D Conjugate Vaccine (PHiD-CV) in Young Latin American Children: A Double-Blind Randomized Controlled Trial

- Pediatric Oncology as the Next Global Child Health Priority: The Need for National Childhood Cancer Strategies in Low- and Middle-Income Countries

- Place and Cause of Death in Centenarians: A Population-Based Observational Study in England, 2001 to 2010

- HIV among People Who Inject Drugs in the Middle East and North Africa: Systematic Review and Data Synthesis

- Association between Melanocytic Nevi and Risk of Breast Diseases: The French E3N Prospective Cohort

- Patient-Safety-Related Hospital Deaths in England: Thematic Analysis of Incidents Reported to a National Database, 2010–2012

- Pushback: The Current Wave of Anti-Homosexuality Laws and Impacts on Health

- HIV Treatment-as-Prevention Research at a Crossroads

- Red Blood Cell Transfusion and Mortality in Trauma Patients: Risk-Stratified Analysis of an Observational Study

- PLOS Medicine

- Archiv čísel

- Aktuální číslo

- Informace o časopisu

Nejčtenější v tomto čísle- Melanocytic Nevi as Biomarkers of Breast Cancer Risk

- Antiretroviral Therapy for Refugees and Internally Displaced Persons: A Call for Equity

- Efficacy of Pneumococcal Nontypable Protein D Conjugate Vaccine (PHiD-CV) in Young Latin American Children: A Double-Blind Randomized Controlled Trial

- Blood Transfusions following Trauma: Finding an Evidence-Based Vein

Kurzy

Zvyšte si kvalifikaci online z pohodlí domova

Současné možnosti léčby obezity

nový kurzAutoři: MUDr. Martin Hrubý

Všechny kurzyPřihlášení#ADS_BOTTOM_SCRIPTS#Zapomenuté hesloZadejte e-mailovou adresu, se kterou jste vytvářel(a) účet, budou Vám na ni zaslány informace k nastavení nového hesla.

- Vzdělávání