-

Články

Top novinky

Reklama- Vzdělávání

- Časopisy

Top články

Nové číslo

- Témata

Top novinky

Reklama- Videa

- Podcasty

Nové podcasty

Reklama- Kariéra

Doporučené pozice

Reklama- Praxe

Top novinky

ReklamaPrioritizing CD4 Count Monitoring in Response to ART in Resource-Constrained Settings: A Retrospective Application of Prediction-Based Classification

Background:

Global programs of anti-HIV treatment depend on sustained laboratory capacity to assess treatment initiation thresholds and treatment response over time. Currently, there is no valid alternative to CD4 count testing for monitoring immunologic responses to treatment, but laboratory cost and capacity limit access to CD4 testing in resource-constrained settings. Thus, methods to prioritize patients for CD4 count testing could improve treatment monitoring by optimizing resource allocation.Methods and Findings:

Using a prospective cohort of HIV-infected patients (n = 1,956) monitored upon antiretroviral therapy initiation in seven clinical sites with distinct geographical and socio-economic settings, we retrospectively apply a novel prediction-based classification (PBC) modeling method. The model uses repeatedly measured biomarkers (white blood cell count and lymphocyte percent) to predict CD4+ T cell outcome through first-stage modeling and subsequent classification based on clinically relevant thresholds (CD4+ T cell count of 200 or 350 cells/µl). The algorithm correctly classified 90% (cross-validation estimate = 91.5%, standard deviation [SD] = 4.5%) of CD4 count measurements <200 cells/µl in the first year of follow-up; if laboratory testing is applied only to patients predicted to be below the 200-cells/µl threshold, we estimate a potential savings of 54.3% (SD = 4.2%) in CD4 testing capacity. A capacity savings of 34% (SD = 3.9%) is predicted using a CD4 threshold of 350 cells/µl. Similar results were obtained over the 3 y of follow-up available (n = 619). Limitations include a need for future economic healthcare outcome analysis, a need for assessment of extensibility beyond the 3-y observation time, and the need to assign a false positive threshold.Conclusions:

Our results support the use of PBC modeling as a triage point at the laboratory, lessening the need for laboratory-based CD4+ T cell count testing; implementation of this tool could help optimize the use of laboratory resources, directing CD4 testing towards higher-risk patients. However, further prospective studies and economic analyses are needed to demonstrate that the PBC model can be effectively applied in clinical settings.

: Please see later in the article for the Editors' Summary

Published in the journal: . PLoS Med 9(4): e32767. doi:10.1371/journal.pmed.1001207

Category: Research Article

doi: https://doi.org/10.1371/journal.pmed.1001207Summary

Background:

Global programs of anti-HIV treatment depend on sustained laboratory capacity to assess treatment initiation thresholds and treatment response over time. Currently, there is no valid alternative to CD4 count testing for monitoring immunologic responses to treatment, but laboratory cost and capacity limit access to CD4 testing in resource-constrained settings. Thus, methods to prioritize patients for CD4 count testing could improve treatment monitoring by optimizing resource allocation.Methods and Findings:

Using a prospective cohort of HIV-infected patients (n = 1,956) monitored upon antiretroviral therapy initiation in seven clinical sites with distinct geographical and socio-economic settings, we retrospectively apply a novel prediction-based classification (PBC) modeling method. The model uses repeatedly measured biomarkers (white blood cell count and lymphocyte percent) to predict CD4+ T cell outcome through first-stage modeling and subsequent classification based on clinically relevant thresholds (CD4+ T cell count of 200 or 350 cells/µl). The algorithm correctly classified 90% (cross-validation estimate = 91.5%, standard deviation [SD] = 4.5%) of CD4 count measurements <200 cells/µl in the first year of follow-up; if laboratory testing is applied only to patients predicted to be below the 200-cells/µl threshold, we estimate a potential savings of 54.3% (SD = 4.2%) in CD4 testing capacity. A capacity savings of 34% (SD = 3.9%) is predicted using a CD4 threshold of 350 cells/µl. Similar results were obtained over the 3 y of follow-up available (n = 619). Limitations include a need for future economic healthcare outcome analysis, a need for assessment of extensibility beyond the 3-y observation time, and the need to assign a false positive threshold.Conclusions:

Our results support the use of PBC modeling as a triage point at the laboratory, lessening the need for laboratory-based CD4+ T cell count testing; implementation of this tool could help optimize the use of laboratory resources, directing CD4 testing towards higher-risk patients. However, further prospective studies and economic analyses are needed to demonstrate that the PBC model can be effectively applied in clinical settings.

: Please see later in the article for the Editors' SummaryIntroduction

Successful maintenance and expansion of anti-HIV-1 therapy programs in resource-limited settings is determined by multiple factors, such as clinical thresholds to start antiretroviral therapy (ART), drug access, trained personnel, and laboratory infrastructure. World Health Organization (WHO) guidelines for HIV-1 therapy in adults recommend initiation of anti-HIV-1 therapy after CD4 count drops below 350 cells/µl, with a clear indication to treat irrespective of clinical state if the CD4 count is below 200 cells/µl [1]. While the ideal monitoring of response to ART is dual (virological monitoring with high-sensitivity PCR as the benchmark to assess viral suppression, and monitoring of ART-mediated immune reconstitution via assessment of change in CD4 count [2]–[4]), this level of monitoring is often unsustainable within national health programs in resource-constrained settings because of the cost and limitations of the healthcare system infrastructure [5]–[11]. Although development of viral resistance linked to ineffective monitoring remains a concern in resource-poor settings, monitoring of clinical response (i.e., initial weight gain) and immune reconstitution (i.e., rise in CD4 cell counts) has been broadly used as a primary tool to assess success of therapy: there is a direct relationship between a lack of clinical response or a lack of a rise in CD4 count and risk of developing or not recovering from opportunistic infections. Indeed, WHO guidelines for patient monitoring address the imbalance between increasing treatment access and limited monitoring capacity by promoting therapy success definitions such as frequency of patients with CD4 count >200 cells/µl at 6, 12, and 24 mo after starting ART [1].

Despite the advent of newer, more cost-effective point-of-care devices for CD4+ T cell count determination using peripheral or capillary blood, the cost of laboratory-based CD4 count determinations to determine disease progression, indication for therapy, and response to ART remains high in terms of both economic and human resources (i.e., the need for specialized instrumentation and trained laboratory staff). Thus, numerous attempts have been made to identify low-cost surrogate markers that are widely available even in resource-limited settings, with the intent of eliminating the need for such intense CD4 count testing within resource/capacity-limited national HIV therapy programs [12]. The WHO recommends the use of total lymphocyte count to monitor untreated chronic HIV infection as a surrogate for disease progression changes, recommending treatment for patients with TLC<1,200 cells/µl [1]. While useful in the context of when to start treatment, TLC and other surrogate markers have not been shown to be useful in monitoring therapy response and/or treatment failure [13]–[19]. To date, no strategy has been proposed to reduce the need for CD4 testing after ART.

Using prediction-based classification (PBC) [20], a recently described model-based approach that accommodates repeatedly measured quantitative biomarkers for outcome prediction, we have developed a prioritization strategy to monitor response to ART based on baseline CD4 count, prospective white blood cell count (WBCC), and lymphocyte percent (Lymph%) measurements. In contrast to previous attempts focused on providing a direct surrogate marker for CD4 count, our approach could be used to direct limited healthcare resources to high-priority patients classified below predetermined CD4 count thresholds of clinical significance.

Methods

Cohorts

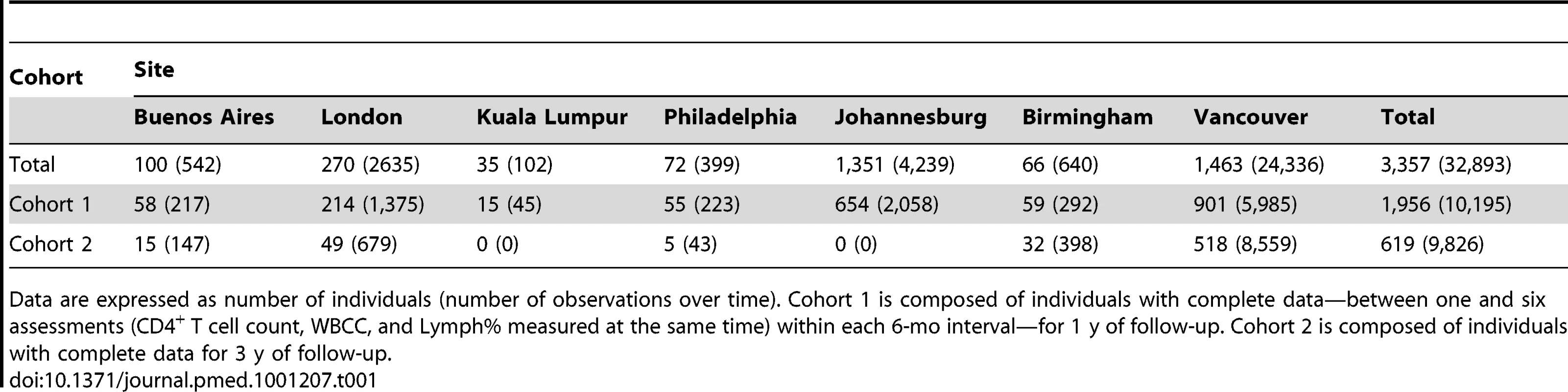

Anonymized data (WBCC, Lymph%, and CD4 count) were obtained from a cohort of 3,357 HIV-1-infected, ART-naïve individuals at the following clinical sites: Royal Free Hampstead NHS Trust, London, UK (used to generate the prediction rule); University of Alabama at Birmingham, Birmingham, Alabama, US; Jonathan Lax Center, Philadelphia FIGHT, Philadelphia, Pennsylvania, US; University of Malaya, Kuala Lumpur, Malaysia; University of the Witwatersrand, Johannesburg, South Africa; Fundación Huésped, Buenos Aires, Argentina; and University of British Columbia, Vancouver, British Columbia, Canada, for a total of 32,974 cumulative observations. Individual contributions from each site are summarized in Table 1. Participants were repeatedly observed for up to 3 y after ART initiation, and all patients had at least one post-initiation-of-ART (baseline) assessment. There were no restrictions on initial CD4 count.

Tab. 1. Cohort description.

Data are expressed as number of individuals (number of observations over time). Cohort 1 is composed of individuals with complete data—between one and six assessments (CD4+ T cell count, WBCC, and Lymph% measured at the same time) within each 6-mo interval—for 1 y of follow-up. Cohort 2 is composed of individuals with complete data for 3 y of follow-up. Primary analysis is focused on a subset of individuals (n = 1,956; Cohort 1) with complete data, defined as having at least one assessment (CD4+ T cell count, WBCC, and Lymph% measured at the same time) and no more than six assessments in each 6-mo period of follow-up—for 1 y after initiation of ART. Additionally, we consider a subset of these individuals (n = 619; Cohort 2) with complete data for 3 y of follow-up to assess the longer-term feasibility of this strategy.

Statistical Analysis

We applied the PBC algorithm, which in brief involved fitting a mixed-effects model to the repeated measures of CD4 counts and, in turn, using model-derived estimates to define a prediction rule for whether post-baseline (start of ART) observations would be above predefined thresholds of 200 and 350 CD4+ T cells/µl. Patients with values predicted to be below these thresholds would be prioritized for actual CD4 testing. Algorithm performance compared to an alternative generalized linear modeling approach is detailed in Foulkes et al. [20] and includes improvements in sensitivity, positive predictive value, and negative predictive value for the same false positive rate (FPR). Briefly, the primary advantages of PBC over alternative strategies are the following: (1) PBC draws strength from the full range of continuous outcomes (through application of a linear model) while offering clinically relevant measures (such as positive and negative predictive value) through subsequent classification; and (2) it incorporates simultaneously multiple, repeatedly measured biomarkers observed at unevenly spaced intervals.

Formally, the PBC algorithm with cross-validation (CV) is given as follows, with additional details and formal mathematical derivations provided in Foulkes et al. [20]. Re-substitution estimates were determined using the same algorithm detailed below, with the full cohort used in place of both the learning and test samples (i.e., removing steps 1 and 7).

PBC Algorithm

Step 1

We first randomly selected a learning sample composed of approximately 90% of the individuals in the full cohort to derive the prediction rule. Sample data included baseline (defined as time of ART initiation) and repeated measurements of CD4 count, WBBC, and Lymph% up to 3 y after ART initiation. The remaining approximately 10% of individuals made up the test sample.

Step 2

Based on the learning sample selected in step 1, we fitted a mixed-effects change-point model to repeatedly measured CD4 counts, with fixed effects for allowing different slopes before and after 1 mo on ART, and random person-specific intercept and slope terms (for time). The two time slopes are intended to reflect the rapid rise in CD4 count during the first month after ART initiation, and then the more gradual increase in CD4 over the remaining observation time. Additional fixed-effects terms for baseline CD4 count and baseline and time-varying WBCC and Lymph% are included as predictor variables. Also included in the model are fixed interaction effects between baseline CD4 count and (1) time before and after the change point, (2) baseline and time-varying values of WBCC, and (3) baseline and time-varying values of Lymph%. All terms in the model have Wald test statistic p-values of less than 0.10 for the complete cohort analysis, and main effects are included when corresponding interaction effects are statistically significant. Notably, application of a less stringent level 0.10 test is appropriate at this stage given that the algorithm additionally includes implementation of a CV procedure. Model fitting is performed using the lme() function of the nlme package in R version 2.11.1.

Step 3

Based on the model-based estimates derived in step 2, we calculated predicted values of CD4 count for all post-baseline observations in the learning sample. We also determined the lower bounds of corresponding one-sided level-α prediction intervals for a range of α values. Details about the calculation of these prediction intervals, including derivation of the prediction variance, as well as a discussion of their interpretation as credible intervals are provided in Foulkes et al. [20]. For a given α, the lower bound is denoted for the jth time point for individual i. A new binary predicted response, denoted for the jth time point for individual i, is then defined as an indicator for whether the corresponding lower bound is greater than K where K = 200 or 350. That is, we let(1)for each CD4 threshold K = 200 and 350. We selected 200 CD4+ T cells/µl as indicative of a risk of development of opportunistic infections, and 350 CD4+ T cells/µl, the WHO-recommended ART initiation threshold [1], defining high-priority patients (i.e., patients requiring laboratory-based testing) as those failing to maintain CD4 counts above either of these thresholds after ART initiation.

Step 4

Again based on the learning sample, we compared the predicted variable to an indicator for whether the observed CD4 count is greater than K, which we denote , where(2)and CD4ij is the observed CD4 count at the jth time point for individual i. To measure a prediction rule for a given α level and K, we calculated the FPR, defined as the proportion of post-baseline observations that fall below the threshold but are predicted to be above the threshold among those observed to be below the threshold.

Step 5

The “optimal” prediction rule was defined for a given threshold K as the rule (across all rules defined by the range of α values) that maximizes the FPR in the learning sample, subject to the constraint that the FPR is less than a predefined cut point. FPR cut points of 5% and 10% were considered clinically relevant. The α level corresponding to this optimal rule, denoted αoptimal, was fixed for all subsequent analyses in the test sample.

Step 6

The test sample data were used to evaluate the optimal prediction rule as follows. Baseline CD4 counts, time since ART initiation, and baseline and post-baseline WBCC and Lymph% were used as inputs in the model derived in step 2 above to arrive at predicted CD4 counts at all measured post-baseline time points for individuals in the test sample. Notably, it is assumed that post-baseline CD4 count is not observed in the test sample, and so a correction for the empirical Bayes estimates from the mixed model is required, as described in Foulkes et al. [20]. Corresponding lower bounds for one-sided level-αoptimal prediction intervals were determined. Formally, this is denoted for the jth time point for individual i in the test sample. Binary predictions for all post-baseline CD4 counts within the test cohort were then defined according to Equation 1 where was replaced with . The dichotomized observed CD4 counts, as given by Equation 2, were compared to these binary predictions to arrive at the cross-validated estimates of sensitivity, specificity, positive predictive value, negative predictive value, and capacity savings. Capacity savings is defined as the ratio of tests spared by use of the model (i.e., the number across all individuals of post-baseline time points at which CD4 count is predicted to be above the K threshold, and thus a CD4 test would not be performed, divided by the total number of post-baseline time points).

Steps 1 to 6 are repeated ten times, and the average and standard deviation (SD) of the estimates listed in step 6 are reported as CV estimates. As these parameters are interdependent, CV estimates are not consistently lower (or higher) than re-substitution estimates using the full cohort. Copies of the R scripts used are available at http://people.umass.edu/foulkes/software.html.

Results

Cohort Description and Follow-Up

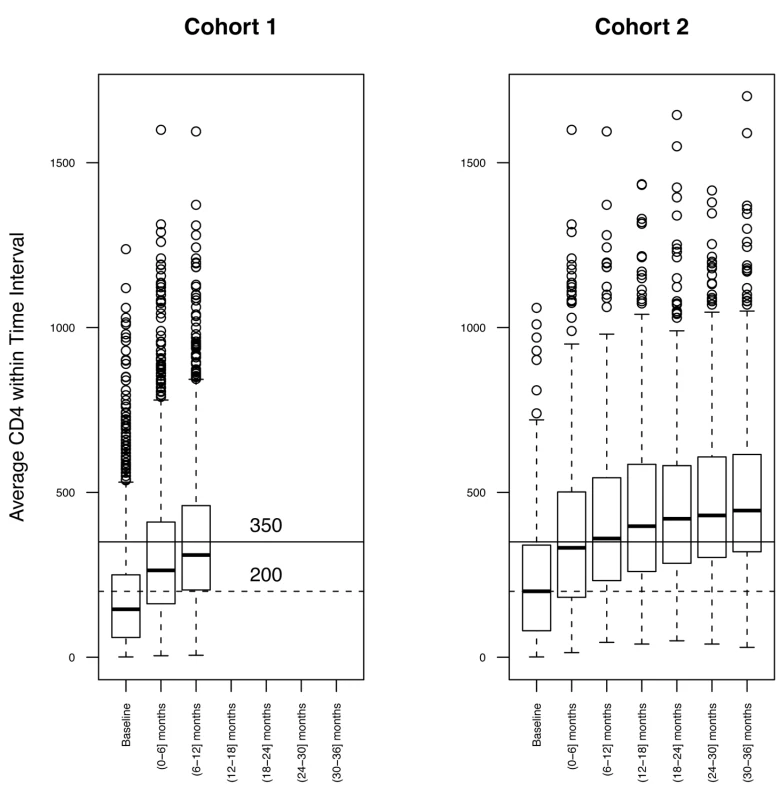

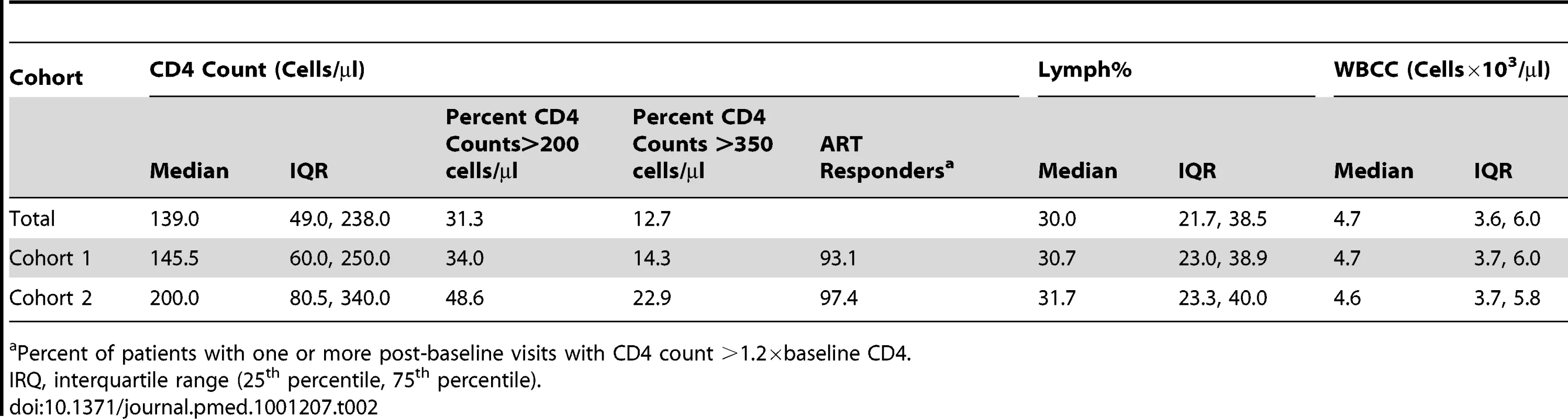

The geographical distributions of patients and corresponding numbers of observations over time are summarized in Table 1. For Cohort 1, the median baseline (pre-ART) CD4 count was 145.5 cells/µl; 34% of the patients initiated ART with a CD4 count >200 cells/µl, and 14.3% with a CD4 count >350 cells/µl. Median baseline WBCC was 4.7×103 cells/µl, and median Lymph% was 30.7%. A detailed breakdown of these values for each cohort is summarized in Table 2. Unlike Lymph% and WBCC, median CD4 count and fraction of patients above 200 or 350 CD4+ T cells/µl were higher in the longer follow-up subset (200 for Cohort 2, as compared to 139 for Cohort 1), possibly due to the better clinical outcomes of patients that initiate treatment with higher CD4 counts, as well as the longer average follow-up in the London, Birmingham, and Vancouver cohorts. However, the rate of ART responders, defined as patients who had a documented CD4 raise of at least 20% from baseline over the follow-up time, was similar across all cohorts. The overall response to ART initiation was confirmed by the observed rise of median CD4+ T cell count over the observation time, as illustrated in 6-mo intervals in Figure 1 for the two cohorts.

Fig. 1. Distribution of CD4 count.

The distribution of CD4 count at 6-mo time intervals was assessed for both Cohort 1 (left) and Cohort 2 (right). Means were calculated for patients with multiple CD4 count assessments in the same interval. Tab. 2. Baseline characteristics.

Percent of patients with one or more post-baseline visits with CD4 count >1.2×baseline CD4. PBC Application

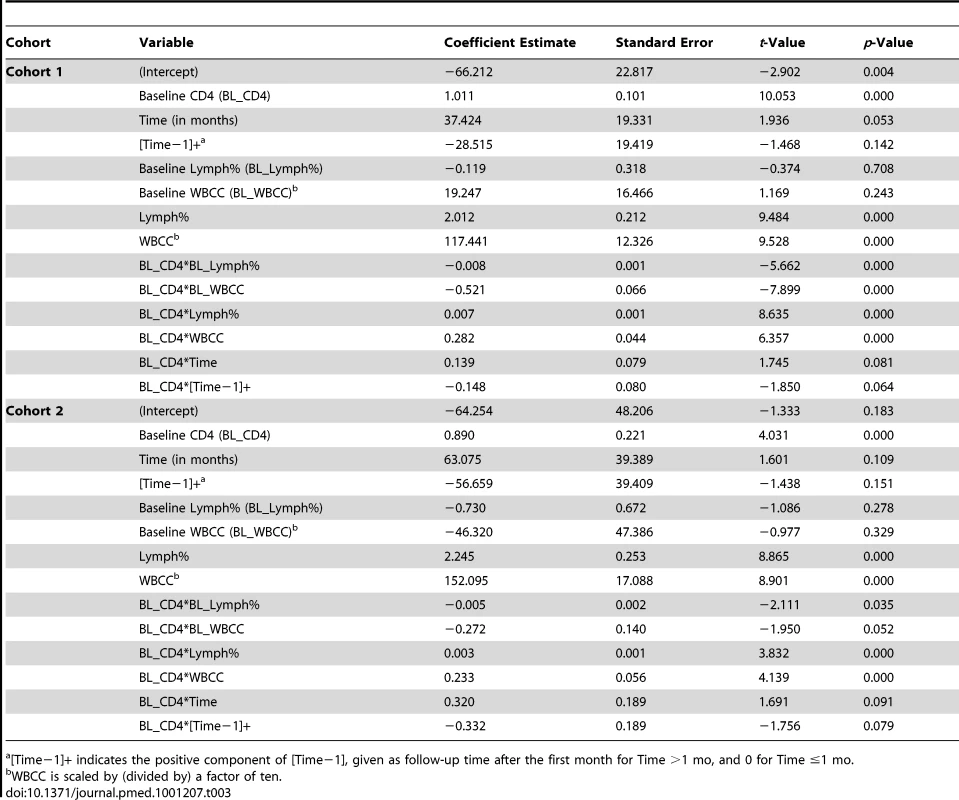

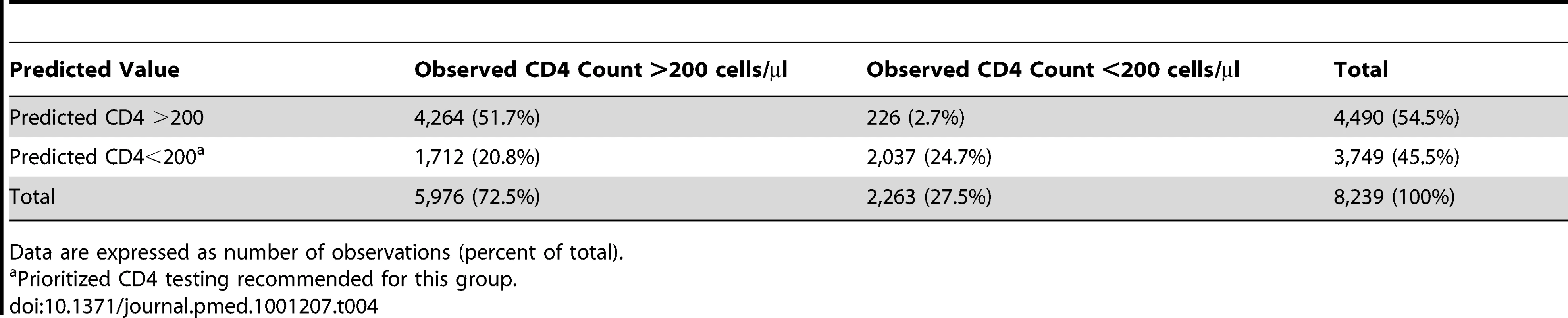

The results of fitting mixed-effects change-point models (as described in step 2 of the PBC algorithm) to Cohorts 1 and 2 are given Table 3. The models suggest that the effects of WBCC, Lymph%, and time (before and after 1 mo on ART) are modified by baseline CD4 count (interaction terms are significant at the 0.10 level), and thus all main effects and interaction terms are included in the final model. The model-based estimates (coefficient estimates in Table 3) are used to derive the optimal prediction rule (as described in steps 3–5 of the PBC algorithm) using maximum FPRs of 5% and 10%. The results of applying the optimal rule to Cohort 1 data are given in Table 4. Of the 8,239 post-baseline observations, 5,976 (72.5%) had CD4 count >200 cells/µl, while 2,263 (27.4%) had CD4 count ≤200 cells/µl. Among observations with CD4 count ≤200 cells/µl, the algorithm correctly classified 2,037 (90%; CV estimate = 91.5%, SD = 4.1%); the corresponding FPR was 226/2,263 = 10% (CV estimate = 8.5%). Prioritized CD4 testing would be recommended for all observations with a predicted CD4 count <200 cells/µl (n = 3,729; 45.5%). The potential capacity savings based on this prioritization scheme, where the likelihood of not detecting a low CD4 count (FPR) is <10%, is 4,490/8,239 = 54.5% (CV estimate = 54.3%, SD = 4.2%). Alternatively, controlling the FPR at 5% would result in the option to prioritize testing for more observations (n = 4,705; 57.1%) and would result in a capacity savings of 42.9% (CV estimate = 44.4%, SD = 4.2%).

Tab. 3. Mixed-effects change-point modeling results for Cohort 1.

[Time−1]+ indicates the positive component of [Time−1], given as follow-up time after the first month for Time >1 mo, and 0 for Time ≤1 mo. Tab. 4. Observed and predicted values resulting from application of PCB to Cohort 1.

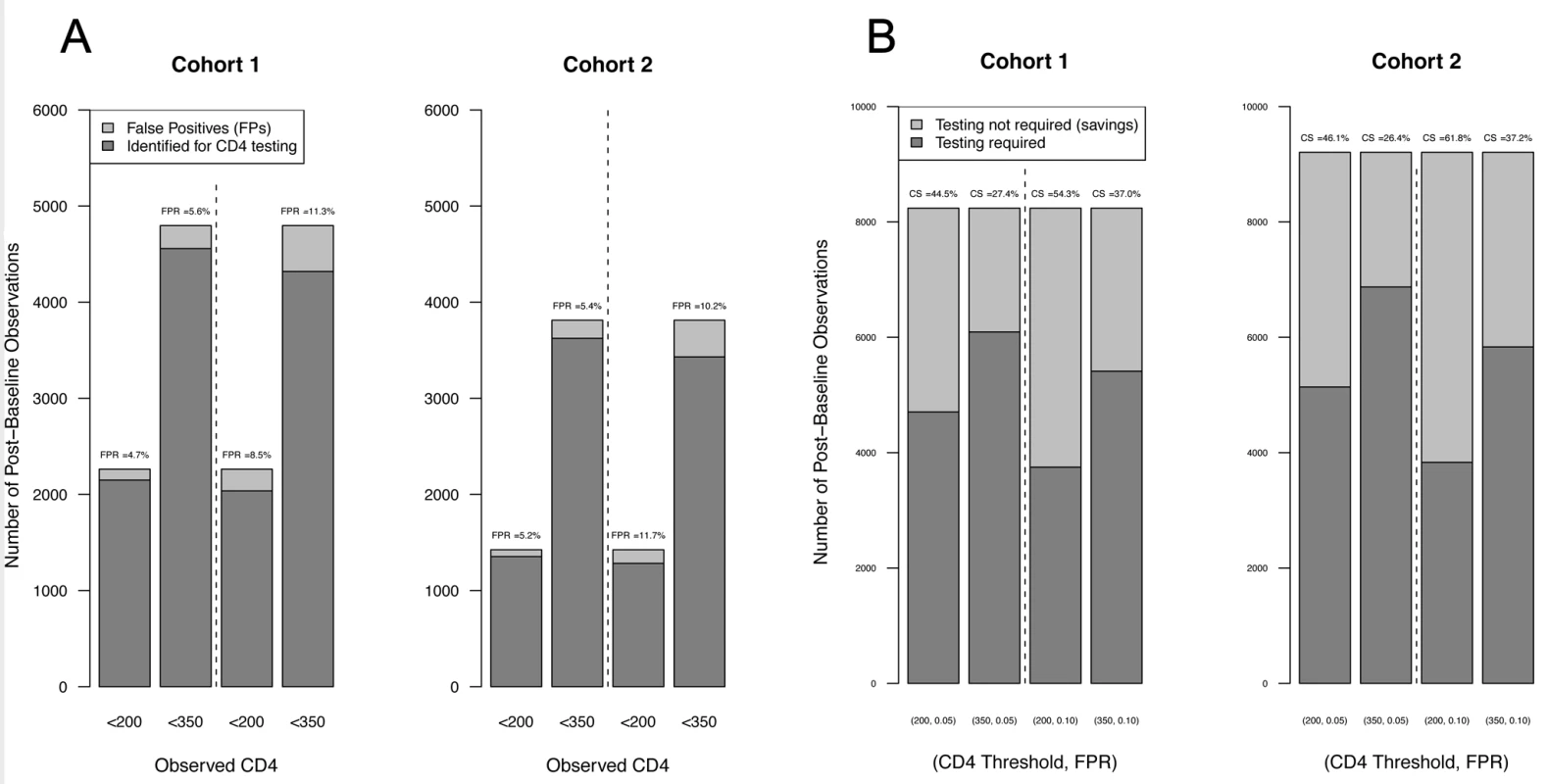

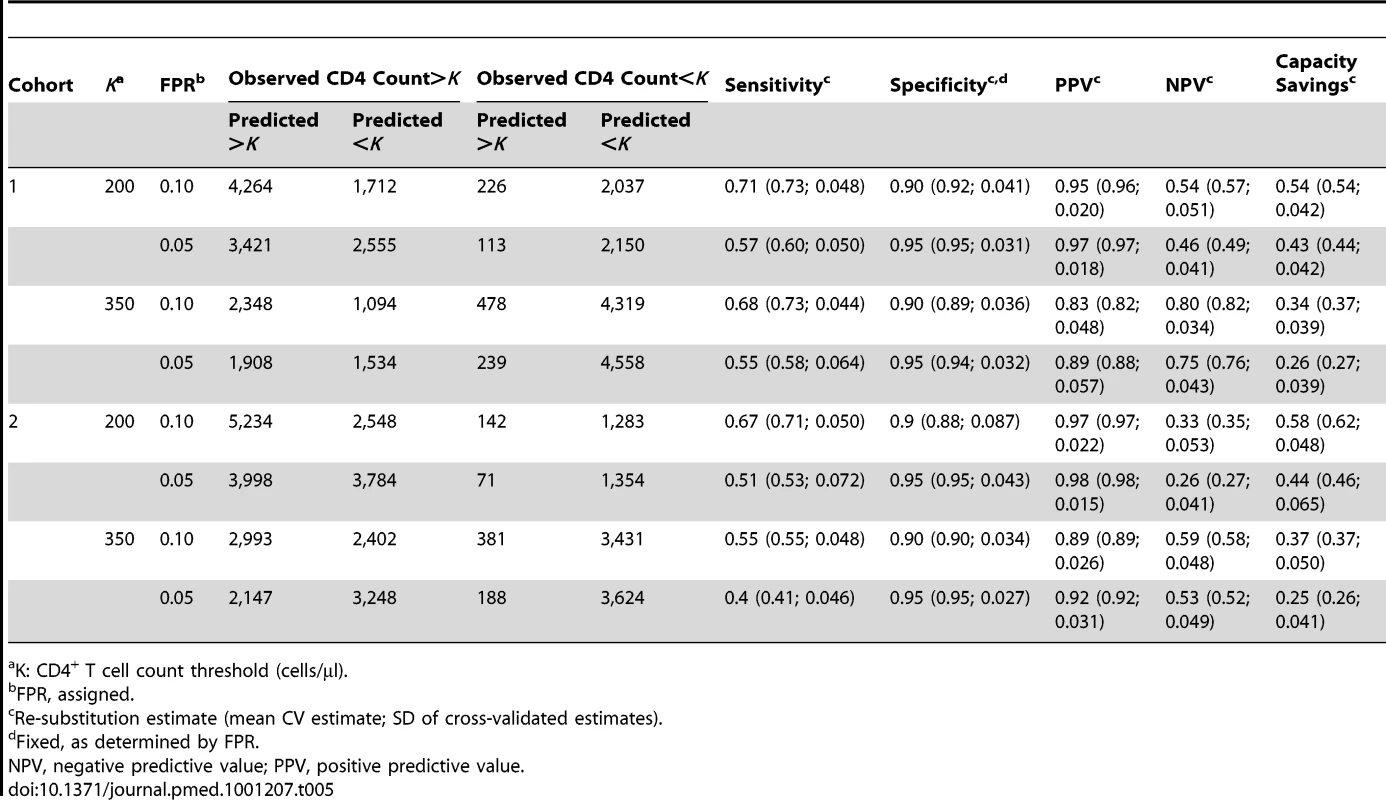

Data are expressed as number of observations (percent of total). These results, as well as the results from applying a 350-cells/µl threshold for CD4 count, are summarized in Figure 2 for both cohorts. Additional details on cross-validated parameter estimates, including sensitivity, specificity, negative predictive value, positive predictive value, and capacity savings, as well as corresponding SDs, are provided in Table 5 for both cohorts and thresholds. Extending this analysis to Cohort 2 (inclusive of 3 y of follow-up) resulted in overall similar capacity savings results, indicating that the model is applicable for at least 3 y from ART initiation without any intervening CD4 count assessment.

Fig. 2. Summary of model performance.

(A) Cross-validated estimates of FPRs. The bars represent the number of observed post-baseline observations below the thresholds indicated on the x-axis and at the indicated FPRs for Cohort 1 (left) and Cohort 2 (right). The dark shading indicates the number of observations correctly identified for laboratory-based CD4 testing (i.e., CD4 counts predicted to be and observed to be below threshold); lighter shading represents false positives (CD4 count incorrectly predicted as above threshold); cross-validated estimates of the FPRs are indicated above each bar. (B) Capacity savings (CS) estimates. Dark shading indicates the number of observations in Cohort 1 (left) and Cohort 2 (right) predicted to require laboratory-based CD4 testing (i.e., CD4 count predicted to be below threshold), and light shading the number of observations predicted to not require laboratory testing (i.e., CD4 count predicted to be above threshold) at the CD4 count threshold and FPR indicated below each bar. Tab. 5. Re-substitution and CV counts and estimates for the PBC model.

K: CD4+ T cell count threshold (cells/µl). Application Examples and Test Cost Comparison

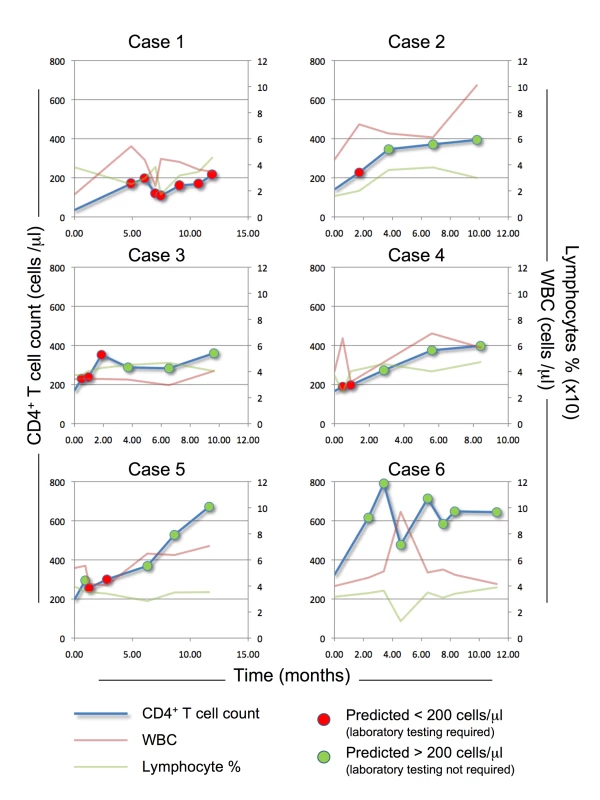

To illustrate the potential use of the model to provide individual predictions, we applied our algorithm to six representative individuals, selected from Cohort 2, based on initial CD4 count and the availability of multiple assessments. In the patients tested (Figure 3), the model performed well in prediction of a CD4 count >200 cells/µl (green dots); in fact, it was always correct in these cases, whereas, as expected, at some of the visits, patients predicted to have CD4 count ≤200 cells/µl (red dots) actually had a CD4 count >200 cells/µl (false negatives). As all cases predicted to be below threshold should be tested using the traditional laboratory-based methods, real time application of the model would not have exposed any of these patients to an undetected dangerous CD4 count, while sparing 20 (57%) of the 35 CD4 laboratory tests performed after baseline.

Fig. 3. PBC predictive model application.

The PBC predictive model (FPR 10%, CD4 threshold = 200 cells/µl) was applied to six patients form Cohort 2 (cases 1–6), selected to represent a range of baseline CD4+ T cell counts (low, case 1 and 2; medium, case 3–5; and high, case 6). The red and green lines represent assessed WBCC (WBC) and Lymph%, respectively; the blue line represents assessed CD4+ T cell count. The PBC algorithm application prediction at the corresponding visits is represented by red dots (predicted CD4+ T cell count ≤200 cells/µl, requiring laboratory-based testing) or green dots (predicted CD4+ T cell count >200 cells/µl, no laboratory-based testing required). To assess the feasibility of the application of our modeling scheme to a healthcare setting scenario, we compared cost estimates for the PBC approach (at 200 - and 350-cells/µl thresholds) to the estimated costs of a high-cost CD4 testing method (dual platform assessment) and a low-cost alternative (Guava platform, Millipore) as applied to a constrained-resource setting (South Asia). The details of this comparison are reported in Table S1. Briefly, according to our literature-based cost estimations, the PBC approach (considering a single initial laboratory-based CD4 assessment, followed by PBC application with a complete blood count performed for each individual, and CD4 assessment only for individuals predicted to have below-threshold CD4 values) could result in test cost savings when complete blood count cost is below “breakeven points” ranging from US$10.90 (PBC with 200-cells/µl threshold, CD4 laboratory testing based on dual platform assessment) to US$1.38 (PBC with 350-cells/µl threshold, Guava-platform-based laboratory CD4 testing). Importantly, the estimated cost of PBC (US$0.80) is well below all of these thresholds, suggesting that the PBC method may prove to be a cost-effective treatment monitoring strategy in future cost-effectiveness analyses.

Discussion

We demonstrate that after obtaining a baseline WBCC and Lymph% and one laboratory-based CD4+ T cell assessment, the response to ART can be monitored relying on relatively low-cost clinical laboratory tests (i.e., WBCC and Lymph%) by using the PBC approach. This method enables us to predict whether CD4+ T cell count remains above predetermined safety thresholds with an estimated cross-validated FPR of 8.5%, and could potentially lead to testing capacity savings as compared to monitoring approaches based solely on repeated laboratory-based CD4 tests.

Given the high economic and capacity cost of laboratory-based CD4 assessments [21],[22], and the limited number of available accredited laboratories [23],[24]—circumstances that tax already stretched health systems as they implement national HIV treatment programs—a number of surrogate assessments (e.g., TLC) have been proposed to assess when HIV-infected patients require treatment, and to monitor them while they are undergoing treatment [12],[13],[15],[16],[25],[26]. Since to date these surrogates have not performed as well as CD4 counts in monitoring response to ART [13]–[19], two other options are open to improve current capacity utilization: (1) reducing the economic and human resource cost of performing laboratory-based CD4 tests, and/or (2) optimizing the use of existing resources to test only patients who are likely to need testing (i.e., patients likely to have dangerously low CD4 counts). The use of approaches that allow triaging patients at highest risk (e.g., patients who are failing treatment) for laboratory-based CD4 testing is expected to be particularly beneficial in resource-constrained settings characterized by high testing volume requirements (e.g., expanding treatment programs in sub-Saharan Africa).

Based on this premise, we conceived an algorithm that is intended as a triage/prioritization tool. To ensure the reasonableness of the approach, we fixed the acceptable FPR at 0.05 or 0.1. Although minimizing the FPR is desirable, there is an inevitable trade-off between the FPR and capacity savings. We believe that this error rate (5%–10%) is acceptable in light of the intrinsic variability of laboratory-based CD4 testing, and therefore clinically relevant.

Notably, erroneously predicting a CD4 count to be below 200 or 350 cells/µl when it is above this level (1−sensitivity) is less relevant to patient safety, as CD4 testing is recommended on predicted failures, thus eliminating the risk associated with this form of misclassification.

Our testing and validation indicate that the proposed model worked well over the 3-y follow-up time in our dataset. The possibility that periodic laboratory-based CD4 testing (e.g., every year) would improve and/or extend the predictive life of the model, to the point that it could be used continuously after ART initiation, remains open, as its determination will require dedicated prospective studies.

Our comparison of CD4 testing cost estimates indicates that the use of the PBC strategy is anticipated to result in a potential capacity and possibly cost savings at all the threshold levels assessed (Table S1). Further prospective healthcare economic studies modeling primary data (inclusive of all monitoring costs) obtained in target countries will be required to perform a net cost comparison, formally assessing the ramifications of the application and limitations of PBC testing for individual countries/regions' testing capacity, as well as long-term cost per outcome. While such studies are beyond the scope of this article, the data presented here provide a strong rationale for such studies.

Notably, the model-derived estimates and predictive rule are first derived based on application of PBC to a large cohort, as described in this article. Through development of publicly accessible web-based tools that incorporate the results presented herein, the above-described scenarios can be applied to single-case analyses. Additional contributions to this data resource will likely allow for further model refinement and improvements in predictive performance.

Applications

In light of the considerations discussed above, our PBC-based tool could be useful in a number of scenarios.

Prioritization/triage of CD4+ T cell count testing at the laboratory level

In this case, a laboratory receiving a request for blood differential and CD4 count would first perform the differential, which requires limited time and commonly available resources; using the information obtained from this differential (WBCC and Lymph%), as well as the historic pre-ART baseline CD4 count (either stored or provided by the clinic), the laboratory could then run the prediction algorithm, and proceed to test only those patients who are predicted to have a CD4 count below a predetermined threshold.

Expansion of ART response monitoring at the clinic level

Due to cost limitations, some rollout programs allow only limited CD4 testing (e.g., every 6 mo). If ART-treated patients are additionally monitored at the rollout clinics for clinical visits and ART medication refills (e.g., every 3 mo), all patients could be monitored at these “non-CD4” visits for ART response by drawing a blood sample for a blood differential. Once the WBCC and Lymph% results are obtained from the laboratory, the clinic could employ the prediction tool to predict whether or not the patient's CD4 count is below a clinically meaningful threshold. Based on this, patients who are predicted to be failing treatment can be counseled for adherence, and/or further monitored by requesting a CD4 count. Because of the anticipated wider availability of complete blood count testing as compared to CD4 testing, this approach may result in shorter result turnaround time, partially reducing the acute need for point-of-care CD4 testing [27].

Reduction of confirmatory CD4 tests

Due to the intrinsic variability of current CD4 count tests, in many circumstances laboratory-based CD4 tests yield unexpected or doubtful results that are not in keeping with clinical observations (e.g., unexpectedly low CD4 count in a patient with increasing WBCC, lymphocytes, hemoglobin, or weight, and controlled viral load). In such cases, the CD4 count test may need to be repeated for confirmation before any clinically relevant action is taken (e.g., adherence counseling or regimen alteration and resistance testing). The use of the PBC method to independently confirm unexpected laboratory-tested CD4 counts could limit the need for repeated CD4 measures.

As indicated throughout this section, our conclusions should be tempered by considering some of the limitations of this work. First, this work is not intended to provide a complete analysis of the economic and healthcare outcomes of the application of PBC. Future prospective studies based in actual resource-constrained settings will be required to demonstrate the feasibility of this approach; here we focus on providing the foundation and rationale for such studies, which will be required to assess whether or not implementation of a PBC-based monitoring approach is a viable alternative to repeated CD4 testing. Second, it remains to be assessed how long this approach can be extended in time, e.g., by adding periodic CD4 testing, and whether or not periodic viral load testing would improve clinical outcomes of PBC-based monitoring. Third, PBC requires specifying an acceptable FPR. While the assessed rate can be lower, depending on the threshold used, the fact remains that the PBC has an intrinsic, small possibility of error. In light of the wide variability of CD4 count testing results, we do not consider this to be problematic, but it's possible that the collection of additional data (and possibly the introduction of other parameters, such as trends over time, into the model) might improve the model fit and improve its accuracy, and should be considered in future study design.

Finally, it is important to remark that the PBC-based method is not intended to substitute for laboratory-based CD4 testing, not to establish a “second tier” of healthcare standard to be applied to developing countries. Rather, we propose that this method is a potentially useful “triage” tool to direct available laboratory testing capacity to high-priority patients. As a tool to optimize the use of existing resources, the implementation of our PBC-based method would be most beneficial in settings where laboratory resources are currently limiting due to funding, human resources, or structural limitations.

Conclusion

We propose a noninvasive, rapid turnaround method that could be applied to predict CD4 failure (i.e., a drop below clinically meaningful thresholds) in HIV-infected patients undergoing ART. By sparing up to 54% of current laboratory-based testing using a CD4 count threshold of 200 cells/µl, the implementation of our method could help focus laboratory-based CD4 count testing capacity on patients with higher likelihood of CD4 failure. This work provides the basis for future prospective testing of the model's overall safety, cost-effectiveness, and clinical outcomes in low-resource settings.

Supporting Information

Zdroje

1. World Health Organization 2010 Antiretroviral therapy for HIV infection in adults and adolescents. recommendations for a public health approach Geneva World Health Organization

2. Delta Coordinating Committee and Virology Group 1999 An evaluation of HIV RNA and CD4 cell count as surrogates for clinical outcome. Delta Coordinating Committee and Virology Group. AIDS 13 565 573

3. LimaVDFinkVYipBHoggRSHarriganPR 2009 Association between HIV-1 RNA level and CD4 cell count among untreated HIV-infected individuals. Am J Public Health 99 Suppl 1 S193 S196

4. BadriMLawnSDWoodR 2008 Utility of CD4 cell counts for early prediction of virological failure during antiretroviral therapy in a resource-limited setting. BMC Infect Dis 8 89

5. ManghamLJHansonK 2010 Scaling up in international health: what are the key issues? Health Policy Plan 25 85 96

6. ClearySM 2010 Commentary: trade-offs in scaling up HIV treatment in South Africa. Health Policy Plan 25 99 101

7. ClearySMMcIntyreDBoulleAM 2008 Assessing efficiency and costs of scaling up HIV treatment. AIDS 22 Suppl 1 S35 S42

8. Van DammeWKoberKKegelsG 2008 Scaling-up antiretroviral treatment in Southern African countries with human resource shortage: how will health systems adapt? Soc Sci Med 66 2108 2121

9. CohenGM 2007 Access to diagnostics in support of HIV/AIDS and tuberculosis treatment in developing countries. AIDS 21 Suppl 4 S81 S87

10. BarnighausenTBloomDEHumairS 2007 Human resources for treating HIV/AIDS: needs, capacities, and gaps. AIDS Patient Care STDS 21 799 812

11. AbimikuAG 2009 Building laboratory infrastructure to support scale-up of HIV/AIDS treatment, care, and prevention: in-country experience. Am J Clin Pathol 131 875 886

12. ChenRYWestfallAOHardinJMMiller-HardwickCStringerJS 2007 Complete blood cell count as a surrogate CD4 cell marker for HIV monitoring in resource-limited settings. J Acquir Immune Defic Syndr 44 525 530

13. CalmyAFordNHirschelBReynoldsSJLynenL 2007 HIV viral load monitoring in resource-limited regions: optional or necessary? Clin Infect Dis 44 128 134

14. SchooleyRT 2007 Viral load testing in resource-limited settings. Clin Infect Dis 44 139 140

15. BagchiSKempfMCWestfallAOMaheryaAWilligJ 2007 Can routine clinical markers be used longitudinally to monitor antiretroviral therapy success in resource-limited settings? Clin Infect Dis 44 135 138

16. MiiroGNakubulwaSWateraCMunderiPFloydS 2010 Evaluation of affordable screening markers to detect CD4+ T-cell counts below 200 cells/mul among HIV-1-infected Ugandan adults. Trop Med Int Health 15 396 404

17. GuptaAGupteNBhosaleRKakraniAKulkarniV 2007 Low sensitivity of total lymphocyte count as a surrogate marker to identify antepartum and postpartum Indian women who require antiretroviral therapy. J Acquir Immune Defic Syndr 46 338 342

18. MarshallSSippyNBroomeHAbayomiA 2006 Quality control of the total lymphocyte count parameter obtained from routine haematology analyzers, and its relevance in HIV management. Afr J Med Med Sci 35 161 163

19. GituraBJoshiMDLuleGNAnzalaO 2007 Total lymphocyte count as a surrogate marker for CD4+ t cell count in initiating antiretroviral therapy at Kenyatta National Hospital, Nairobi. East Afr Med J 84 466 472

20. FoulkesASAzzoniLXiaohongLJohnsonMASmithC 2010 Prediction based classification for longitudinal biomarkers. Ann Appl Stat 4 1476 1497

21. PattanapanyasatKPhuang-NgernYSukapiromKLerdwanaSThepthaiC 2008 Comparison of 5 flow cytometric immunophenotyping systems for absolute CD4+ T-lymphocyte counts in HIV-1-infected patients living in resource-limited settings. J Acquir Immune Defic Syndr 49 339 347

22. PeterTBadrichaniAWuEFreemanRNcubeB 2008 Challenges in implementing CD4 testing in resource-limited settings. Cytometry B Clin Cytom 74 Suppl 1 S123 S130

23. PeterTFRotzPDBlairDHKhineAAFreemanRR 2010 Impact of laboratory accreditation on patient care and the health system. Am J Clin Pathol 134 550 555

24. MarinucciFMedina-MorenoSPaternitiADWattleworthMRedfieldRR 2011 Decentralization of CD4 testing in resource-limited settings: 7 years of experience in six African countries. Cytometry A 79 368 374

25. KumarasamyNMahajanAPFlaniganTPHemalathaRMayerKH 2002 Total lymphocyte count (TLC) is a useful tool for the timing of opportunistic infection prophylaxis in India and other resource-constrained countries. J Acquir Immune Defic Syndr 31 378 383

26. MahajanAPHoganJWSnyderBKumarasamyNMehtaK 2004 Changes in total lymphocyte count as a surrogate for changes in CD4 count following initiation of HAART: implications for monitoring in resource-limited settings. J Acquir Immune Defic Syndr 36 567 575

27. ZachariahRReidSDChailletPMassaquoiMSchoutenEJ 2011 Viewpoint: why do we need a point-of-care CD4 test for low-income countries? Trop Med Int Health 16 37 41

Štítky

Interní lékařství

Článek vyšel v časopisePLOS Medicine

Nejčtenější tento týden

2012 Číslo 4- Berberin: přírodní hypolipidemikum se slibnými výsledky

- Léčba bolesti u seniorů

- Příznivý vliv Armolipidu Plus na hladinu cholesterolu a zánětlivé parametry u pacientů s chronickým subklinickým zánětem

- Jak postupovat při výběru betablokátoru − doporučení z kardiologické praxe

- Červená fermentovaná rýže účinně snižuje hladinu LDL cholesterolu jako vhodná alternativa ke statinové terapii

-

Všechny články tohoto čísla

- Medical Evidence of Human Rights Violations against Non-Arabic-Speaking Civilians in Darfur: A Cross-Sectional Study

- New Methodology for Estimating the Burden of Infectious Diseases in Europe

- Reappraisal of Metformin Efficacy in the Treatment of Type 2 Diabetes: A Meta-Analysis of Randomised Controlled Trials

- Does Conflict of Interest Disclosure Worsen Bias?

- Open Clinical Trial Data for All? A View from Regulators

- The Imperative to Share Clinical Study Reports: Recommendations from the Tamiflu Experience

- Where There Is No Health Research: What Can Be Done to Fill the Global Gaps in Health Research?

- The Role of Public Health Institutions in Global Health System Strengthening Efforts: The US CDC's Perspective

- Long-Term Exposure to Silica Dust and Risk of Total and Cause-Specific Mortality in Chinese Workers: A Cohort Study

- Ovarian Cancer and Body Size: Individual Participant Meta-Analysis Including 25,157 Women with Ovarian Cancer from 47 Epidemiological Studies

- Is Food Insecurity Associated with HIV Risk? Cross-Sectional Evidence from Sexually Active Women in Brazil

- Induction of Labor versus Expectant Management in Women with Preterm Prelabor Rupture of Membranes between 34 and 37 Weeks: A Randomized Controlled Trial

- Prioritizing CD4 Count Monitoring in Response to ART in Resource-Constrained Settings: A Retrospective Application of Prediction-Based Classification

- PLOS Medicine

- Archiv čísel

- Aktuální číslo

- Informace o časopisu

Nejčtenější v tomto čísle- Induction of Labor versus Expectant Management in Women with Preterm Prelabor Rupture of Membranes between 34 and 37 Weeks: A Randomized Controlled Trial

- The Imperative to Share Clinical Study Reports: Recommendations from the Tamiflu Experience

- Long-Term Exposure to Silica Dust and Risk of Total and Cause-Specific Mortality in Chinese Workers: A Cohort Study

- Prioritizing CD4 Count Monitoring in Response to ART in Resource-Constrained Settings: A Retrospective Application of Prediction-Based Classification

Kurzy

Zvyšte si kvalifikaci online z pohodlí domova

Současné možnosti léčby obezity

nový kurzAutoři: MUDr. Martin Hrubý

Všechny kurzyPřihlášení#ADS_BOTTOM_SCRIPTS#Zapomenuté hesloZadejte e-mailovou adresu, se kterou jste vytvářel(a) účet, budou Vám na ni zaslány informace k nastavení nového hesla.

- Vzdělávání