-

Články

Top novinky

Reklama- Vzdělávání

- Časopisy

Top články

Nové číslo

- Témata

Top novinky

Reklama- Videa

- Podcasty

Nové podcasty

Reklama- Kariéra

Doporučené pozice

Reklama- Praxe

Top novinky

ReklamaMicroscopy Quality Control in Médecins Sans Frontières Programs in Resource-Limited Settings

article has not abstract

Published in the journal: . PLoS Med 7(1): e32767. doi:10.1371/journal.pmed.1000206

Category: Health in Action

doi: https://doi.org/10.1371/journal.pmed.1000206Summary

article has not abstract

The Challenge

The international humanitarian medical aid organization Médecins Sans Frontières/Doctors Without Borders (MSF) supports a wide network of medical laboratories in resource-constrained countries. Although MSF has always prioritized quality control (QC) for laboratory testing, prior to 2005 we were constrained by two significant limitations. First the QC workload was unsustainable in many programs, as MSF used the traditional protocol of reexamining 10% of negative slides and all positive slides. This is no longer considered practical [1]–[3]. Second MSF had no system for central data analysis as QC was performed independently at the individual laboratory level without standardized protocols.

In May 2005, MSF Operational Centre Amsterdam (MSF-OCA) developed and implemented a standardized, centrally reporting QC program to monitor the quality of microscopy for malaria, pulmonary tuberculosis (TB), and leishmaniasis. The malaria component of this protocol has been adapted by the World Health Organization (WHO) as the recommended international standard for malaria microscopy QC [4]. Here we present a description of the QC protocol and an analysis over a 3-year period, the latter reflecting how the QC protocol has contributed to improved performance.

The Protocol

The QC protocol was designed to (1) have a small sample size to be feasible across all settings; (2) enable reliable analysis; (3) monitor both false-positive (FP) and false-negative (FN) results; and (4) be applicable to all microscopy testing.

Monthly QC Sample

Sample size

The MSF-OCA protocol is based on a sample size of ten slides/month/site (for each test), as field experience has demonstrated that this QC workload is sustainable in most settings, and on the premise that it is better to perform less QC well than more QC poorly. A small sample size is also important to avoid overloading the limited capacity of the reference laboratory in many resource-constrained settings. Programs are encouraged to include more QC slides if this can be achieved without compromising the quality of the reexamination.

Sample selection and reexamination

In summary, each month for each test: (1) Five weak positive slides are selected randomly from all weak positive slides; or if <5 weak positive slides, then all weak positive slides are selected. (2) Five negative slides are selected randomly from all negative slides; or if <5 negative slides, then all negative slides are selected. (3) If there are <5 weak positive (or negative) slides, then the number of negative (or weak positive) slides is increased to give a total minimum sample size of ten. (4) Strong positive slides are excluded from selection in the QC sample. (5) Laboratories unable to perform QC on a minimum of ten slides are assessed on an individual basis.

Blinded QC slides are reexamined within 4 weeks in the field by either a reference laboratory or an independent skilled laboratory technician.

Weak positive slides are defined as ≤9 trophozoites/acid-fast bacilli (AFB)/10 high power fields. These definitions were consistent across laboratory sites. Postimplementation experience now suggests that the criteria for a weak positive should be reduced to ≤9 trophozoites/AFB/100 high power fields.

Protocol Reliability

While small sample QC has the important advantage of practicality, maintaining reliable analysis is also essential. To compensate for the small number of QC slides reexamined each month, our QC protocol uses analysis of cumulative data over 4-month periods (i.e., 4 months of data), referred to here as “cohort analysis”. These 4-month cohorts are used to increase the sample size analyzed, and as a compromise between the greater statistical stringency of analyzing a larger number of results over a longer duration (e.g., 12 months) versus the greater immediacy of detecting real-time laboratory performance by analyzing QC over a shorter period.

False-Positive and False-Negative Analysis

To enable FP analysis on small samples, our protocol uses biased sampling to increase the number of positive slides available for reexamination, and the targeting of weak positive slides to increase discriminatory power.

Biased sampling

QC protocols that use a small sample size with random sampling of all slides, such as lot quality assurance sampling (LQAS) [2], have the potential disadvantage of being unable to adequately monitor false positivity because of insufficient positive slides at low prevalence rates if QC results are analyzed over short periods of time. To address this, the MSF-OCA protocol uses a biased QC sample of an equal number (whenever possible) of weak positive and negative slides to enable both FP and FN analysis.

Targeting weak positives

The protocol selects only weak positive slides because errors of false positivity are most likely to occur during routine microscopy through microscopists reporting negative findings as weakly positive (to be “on the safe side”) [5], or through the misidentification of artifacts as parasites [1]. Using weak positive slides also has greater discriminatory power than reexamining strongly positive slides [1],[6],[7].

However, because FP results are more likely to occur among weak positive slides, reexamining only weak positive slides (rather than all positive slides) may overestimate the FP frequency in routine microscopy. We correct for this by using the formula:A limitation of this correction is that it assumes a negligible FP frequency for strong positive slides.

Common Protocol for All Microscopy

A primary objective for MSF-OCA was to develop a protocol that could be used for all microscopy testing. Although LQAS is recommended by WHO and others for AFB direct-smear TB analysis [3], we found this methodology unsuitable for malaria microscopy because determining the LQAS sample size is problematic when there is seasonal variation in the positivity rate. Our protocol therefore uses a fixed rather than variable number of QC slides.

Laboratory Performance Analysis

All QC results were reported to the central office in Amsterdam, which enabled comparative monitoring of results across all programs and the identification of poorly performing laboratories. Summarized analysis was reported back to the field to enable individual laboratories to compare their performance to other laboratories in similar settings.

We use percentage agreement because it is simple, direct, and understandable at all levels [1]. Laboratory performance was considered satisfactory if the percentage agreement between the laboratory results and the reexamined results was equal to or exceeded the internal standards set by MSF-OCA (simple cut-off analysis).

Findings

In contrast to stable programs, such as government health laboratory networks, MSF operates as an emergency humanitarian organization, and laboratory programs open and close according to changing priorities. Therefore the QC analysis presented here reflects the overall performance of MSF-OCA programs over 2005–2008 with a changing composition of laboratories.

Because only a limited number of laboratories performed leishmaniasis testing, these findings are not presented here.

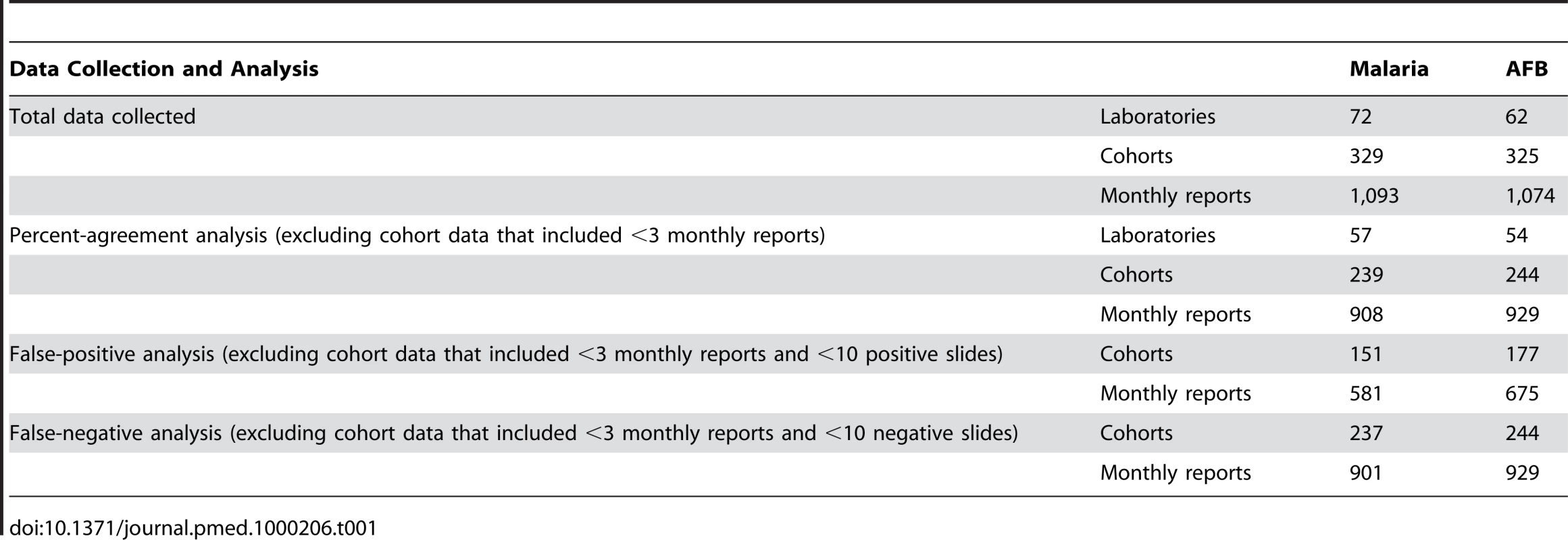

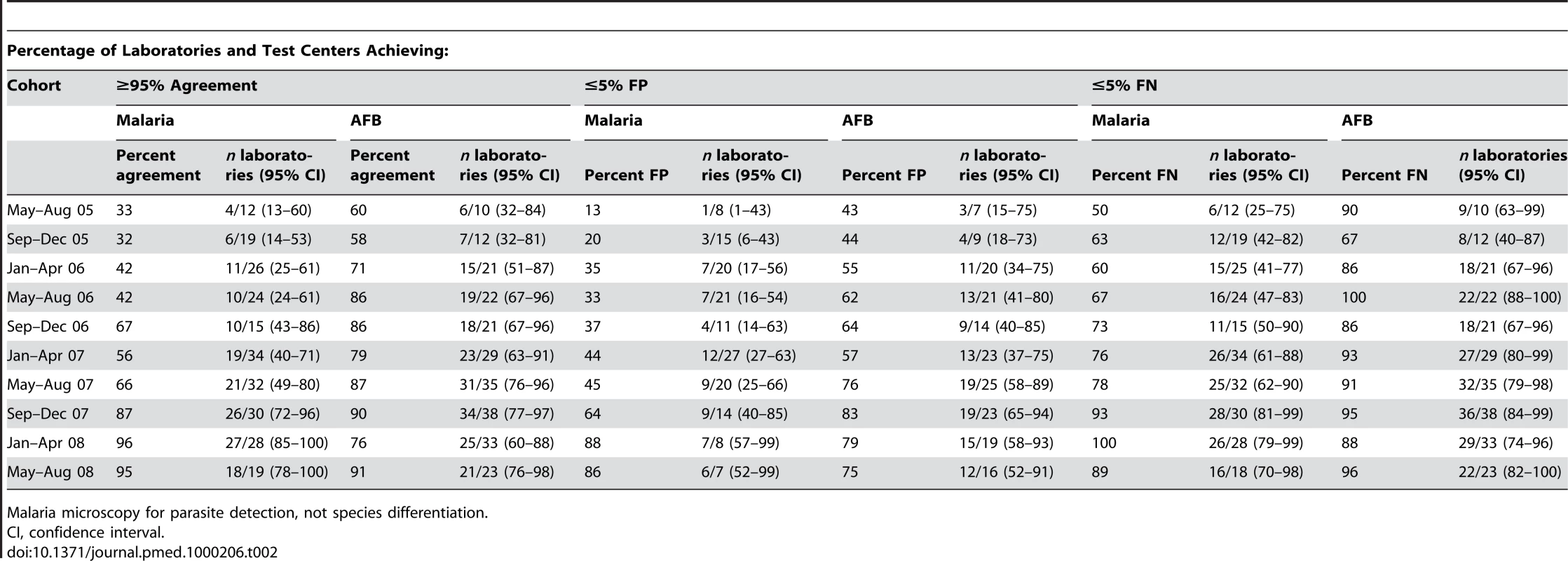

To improve statistical reliability, we only analyzed percent agreement on cohort data that included at least three monthly reports in the 4-month period, and FP and FN on cohort data that included at least ten positive or ten negative slides, respectively (Table 1). Fifty-seven laboratories met these criteria for malaria microscopy QC, and 54 for TB.

Tab. 1. Laboratory QC data collection and analysis.

During the reported period, the internal MSF-OCA standards were set at ≥95% agreement for all slides (percent agreement) and ≤5% FP and FN slides.

Tests of difference between two proportions were performed using the Pearson's Chi-squared test. Analysis was performed using Epi Info 6 (US Centers for Disease Control) and STATA version 8.2 (StataCorp).

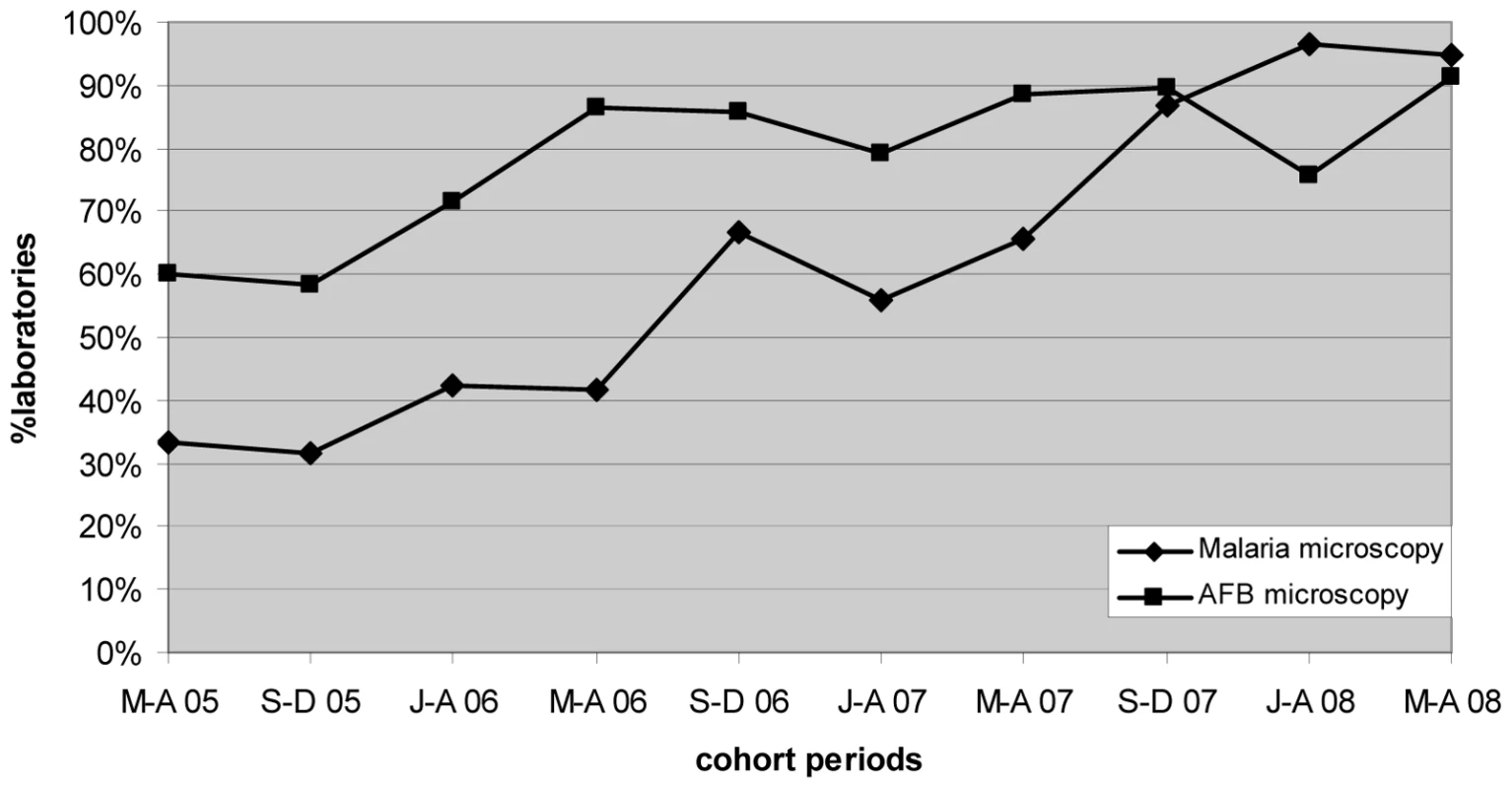

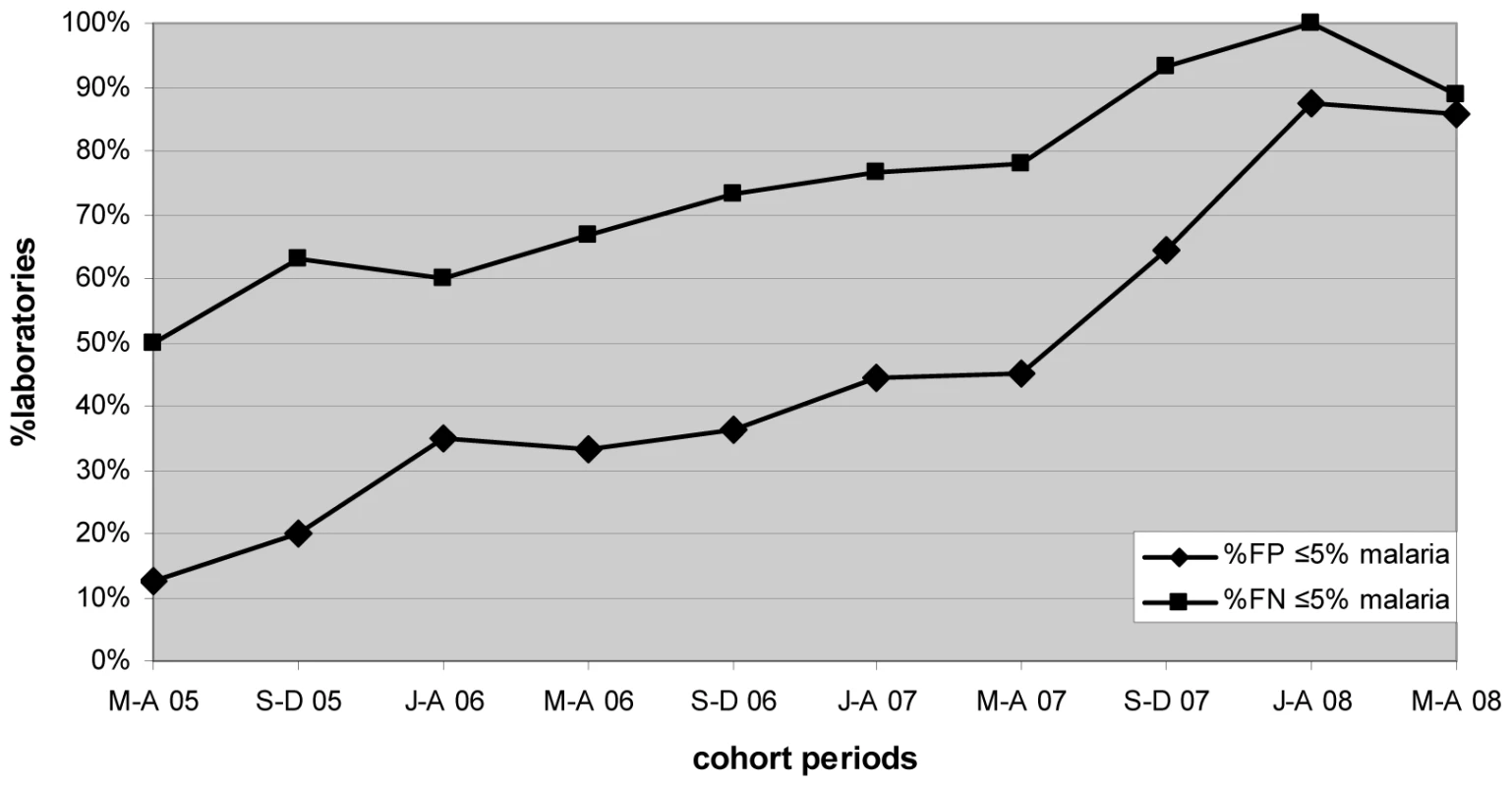

Malaria microscopy

Marked progressive improvement in the overall malaria microscopy QC performance was seen over the period (Figures 1 and 2; Table 2). At the commencement of the QC program for the period May–December 2005 (two cohorts), 32.3% (10/31), 17.4% (4/23), and 58.1% (18/31) of laboratories complied with the percent agreement, FP, and FN targets, respectively. By 2008, for the period January–August (two cohorts), there were significant improvements (p<0.001) in the proportion of laboratories meeting each QC target, with the results of 95.7% (45/47), 86.7% (13/15), and 91.3% (42/46), respectively.

Fig. 1. Percentage of laboratories and test centers achieving ≥95% agreement for malaria and AFB microscopy.

Fig. 2. Percentage of laboratories and test centers achieving ≤5% false-positive and false-negative results for malaria microscopy.

Tab. 2. Performance of malaria and AFB microscopy.

Malaria microscopy for parasite detection, not species differentiation. AFB microscopy

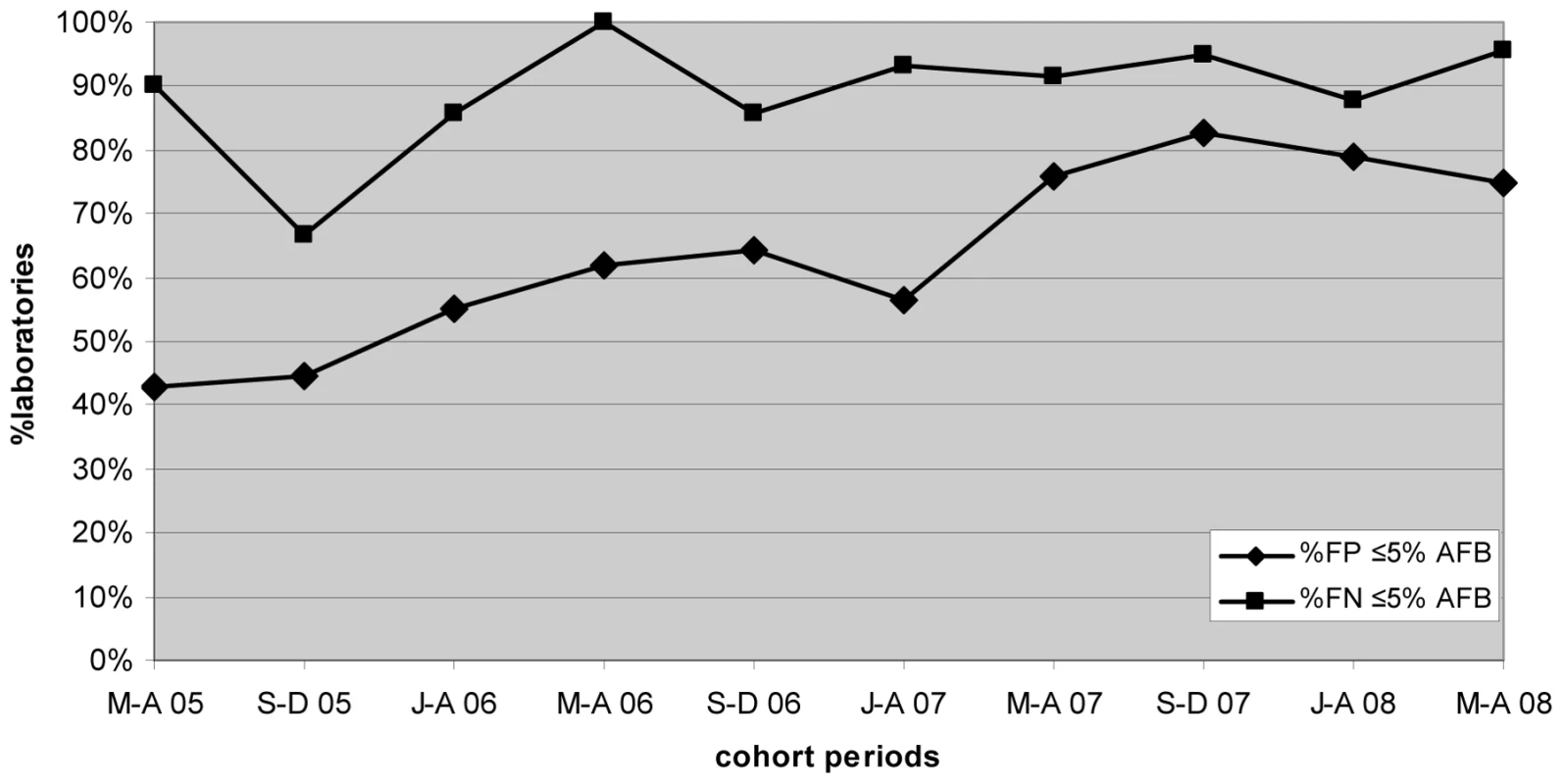

Progressive improvement in the overall AFB microscopy QC performance was seen over the period (Figures 1 and 3; Table 2). At the commencement of the QC program for the period May–December 2005 (two cohorts), 59.1% (13/22) and 43.8% (7/16) of laboratories complied with the percent agreement and FP targets, respectively. By 2008, for the period January–August (two cohorts), 82.1% (46/56; p = 0.033) and 77.1% (27/35; p = 0.019) of laboratories, respectively, met these targets. In contrast, the FN frequency remained relatively constant throughout the period (Figure 3), with no significant difference between the May–August 2005 and May–August 2008 cohorts (90% and 96%, respectively, p = 0.527).

Fig. 3. Percentage of laboratories and test centers achieving ≤5% false-positive and false-negative results for AFB microscopy.

Lessons Learned

We found the design of our QC protocol to be practical in field settings and easily understood and implemented by laboratory staff with limited training. We attribute this to a combination of a small QC sample size, a fixed number of slides independent of the workload, and use of simple percentage agreement for statistical analysis. The small sample size considerably decreased the QC workload while maintaining statistical reliability by using targeted sampling of only weakly positive slides and 4-month cohort analysis.

Our findings show a significant improvement in the accuracy of malaria and AFB microscopy comparing the periods May–December 2005 and January–August 2008. We attribute this improvement to the strengthening of our protocols, field support, and training over this period. However our QC protocol also played a central role by providing key information on a timely basis allowing us to prioritize those laboratory support activities. Also, and we believe critically, the reporting of compiled data back to the field provided the laboratories with clear performance indicators and enabled field laboratories to directly compare their performance against other laboratories working in similar circumstances. In our experience, this generated an environment of positive “competition” among laboratories that we believe has also contributed significantly to the improvement in laboratory quality performance.

For malaria microscopy, the number of FP and FN results decreased markedly. We attribute this to active follow-up of poorly performing laboratories identified by the QC protocol. In contrast, the frequency of FN results for AFB microscopy did not change significantly, and the improvement in percentage agreement reflects the decrease in the frequency of FP results. Laboratories for AFB also entered the analysis period at a higher level of performance compared with malaria microscopy (59.1% of AFB cohorts achieving ≥95% percentage agreement for May–December 2005 compared with 32.3% for malaria). This may be because AFB microscopy is relatively easier to perform than malaria microscopy as accurate malaria microscopy requires greater microscopy resolution and has a technically more demanding staining procedure.

However we also speculate that the random selection of negative AFB smears, which is the standard methodology for AFB QC protocols and is used in our protocol, may be problematic. Saliva smears are in general more likely to be negative or have an AFB density below the threshold of microscopy detection than sputum smears [8],[9]. Therefore there is less opportunity for QC to detect FN results by reexamining saliva slides as they have a higher prior probability of being truly microscopically negative than a sputum smear. With random selection, laboratories with a high proportion of saliva samples in routine practice will also have a high proportion of saliva slides in their QC sample, and therefore the QC FN frequency for such laboratories may be lower than their true FN frequency.

For the future, we are currently incorporating clerical error monitoring into our laboratory QC protocol, as this can also be a major source of error. With the increasing emphasis on disease eradication, we are also developing QC protocols to accommodate low positivity. Finally, we have implemented a pilot study to exclude saliva smears from the AFB QC sample.

Conclusion

From this recent field experience, our laboratory QC protocol was found to be well accepted and understood by all levels of field staff, practical in a wide variety of contexts, able to improve performance, and able to provide valuable program management information. As with all QC, implementation and sustainability requires commitment from field staff and project managers. Ongoing supervision and support are critical for central monitoring, ensuring compliance, and regular feedback reporting. The implementation of this centralized-reporting, standardized QC program has provided the catalyst for MSF-OCA to develop a laboratory “culture of quality” over the past 3 years, which in turn has strengthened the commitment and interest of laboratory field staff to ensure the success of health care programs.

Zdroje

1. World Health Organization 2006 Informal consultation on quality control of malaria microscopy, March 2006. Accessed 4 December 2009. Available: http://www.who.int/malaria/docs/diagnosticsandtreatment/reportQua-mal-m.pdf

2. World Health Organization 2002 External quality assessment for AFB smear microscopy. PHL, CDC, IUATLD, KNCV, RIT, and WHO. Washington (D.C.) Accessed 4 December 2009. Available: http://wwwn.cdc.gov/dls/ILA/documents/eqa_afb.pdf

3. MartinezA

BalandranoS

ParissiA

ZunigaA

SanchezM

2005 Evaluation of new external quality assessment guidelines involving random blinded rechecking of acid-fast bacili smears in a pilot project setting in Mexico. Int J Tuberc Lung Dis 9 301 305

4. World Health Organization 2009 Malaria microscopy quality assurance manual version 1. Accessed 4 December 2009. Available: http://www.wpro.who.int/NR/rdonlyres/D854E190-F1B2-41E4-93B4-EB3D1A0163E4/0/MalariaMicroscopyManualVer1EDITED0609.pdf

5. StowNW

TorrensJK

WalkerJ

1999 An assessment of the accuracy of clinical diagnosis, local microscopy and a rapid immunochromatographic card test in comparison with expert microscopy in the diagnosis of malaria in rural Kenya. Trans R Soc Trop Med Hyg 93 519 520

6. KilianAHD

MetzgerWG

MutschelknaussEJ

KabagambeG

LangiP

2000 Reliability of malaria microscopy in epidemiological studies: results of quality control. Trop Med Int Health 5 3 8

7. MaguireJD

LedermanER

MazieBJ

Prudhomme O'MearaWA

JordonRG

2006 Production and validation of durable, high quality standardized malaria microscopy slides for teaching, testing and quality assurance during an era of declining diagnostic proficiency. Malar J 5 92

8. World Health Organization 1998 Laboratory services in tuberculosis control. Part II: microscopy. Geneva. Accessed 4 December 2009. Available: http://wwwn.cdc.gov/dls/ILA/documents/lstc2.pdf

9. International Union Against Tuberculosis and Lung Disease 2000 Technical guide sputum examination for tuberculosis by direct microscopy in low income countries. Paris. Accessed 4 December 2009. Available: http://www.theunion.org/index.php?option=com_guide&task=OpenDownload&id_download=59&id_guide=32&what=Guide%20Complet%20En

Štítky

Interní lékařství

Článek vyšel v časopisePLOS Medicine

Nejčtenější tento týden

2010 Číslo 1- Berberin: přírodní hypolipidemikum se slibnými výsledky

- Léčba bolesti u seniorů

- Příznivý vliv Armolipidu Plus na hladinu cholesterolu a zánětlivé parametry u pacientů s chronickým subklinickým zánětem

- Červená fermentovaná rýže účinně snižuje hladinu LDL cholesterolu jako vhodná alternativa ke statinové terapii

- Jak postupovat při výběru betablokátoru − doporučení z kardiologické praxe

-

Všechny články tohoto čísla

- Quantifying the Number of Pregnancies at Risk of Malaria in 2007: A Demographic Study

- The Global Health System: Actors, Norms, and Expectations in Transition

- Microscopy Quality Control in Médecins Sans Frontières Programs in Resource-Limited Settings

- The Global Health System: Strengthening National Health Systems as the Next Step for Global Progress

- Meeting the Demand for Results and Accountability: A Call for Action on Health Data from Eight Global Health Agencies

- Relationship between Vehicle Emissions Laws and Incidence of Suicide by Motor Vehicle Exhaust Gas in Australia, 2001–06: An Ecological Analysis

- The Global Health System: Lessons for a Stronger Institutional Framework

- Geographic Distribution of Causing Invasive Infections in Europe: A Molecular-Epidemiological Analysis

- The Global Health System: Linking Knowledge with Action—Learning from Malaria

- The Relationship between Anti-merozoite Antibodies and Incidence of Malaria: A Systematic Review and Meta-analysis

- Neonatal Circumcision for HIV Prevention: Cost, Culture, and Behavioral Considerations

- “Working the System”—British American Tobacco's Influence on the European Union Treaty and Its Implications for Policy: An Analysis of Internal Tobacco Industry Documents

- The Evolution of the Epidemic of Charcoal-Burning Suicide in Taiwan: A Spatial and Temporal Analysis

- Mapping the Distribution of Invasive across Europe

- Science Must Be Responsible to Society, Not to Politics

- Are Patents Impeding Medical Care and Innovation?

- Male Circumcision at Different Ages in Rwanda: A Cost-Effectiveness Study

- PLOS Medicine

- Archiv čísel

- Aktuální číslo

- Informace o časopisu

Nejčtenější v tomto čísle- The Evolution of the Epidemic of Charcoal-Burning Suicide in Taiwan: A Spatial and Temporal Analysis

- Male Circumcision at Different Ages in Rwanda: A Cost-Effectiveness Study

- Geographic Distribution of Causing Invasive Infections in Europe: A Molecular-Epidemiological Analysis

- “Working the System”—British American Tobacco's Influence on the European Union Treaty and Its Implications for Policy: An Analysis of Internal Tobacco Industry Documents

Kurzy

Zvyšte si kvalifikaci online z pohodlí domova

Současné možnosti léčby obezity

nový kurzAutoři: MUDr. Martin Hrubý

Všechny kurzyPřihlášení#ADS_BOTTOM_SCRIPTS#Zapomenuté hesloZadejte e-mailovou adresu, se kterou jste vytvářel(a) účet, budou Vám na ni zaslány informace k nastavení nového hesla.

- Vzdělávání