-

Články

Top novinky

Reklama- Vzdělávání

- Časopisy

Top články

Nové číslo

- Témata

Top novinky

Reklama- Videa

- Podcasty

Nové podcasty

Reklama- Kariéra

Doporučené pozice

Reklama- Praxe

Top novinky

ReklamaReporting Recommendations for Tumor Marker Prognostic Studies (REMARK): Explanation and Elaboration

article has not abstract

Published in the journal: . PLoS Med 9(5): e32767. doi:10.1371/journal.pmed.1001216

Category: Guidelines and Guidance

doi: https://doi.org/10.1371/journal.pmed.1001216Summary

article has not abstract

Summary Points

-

The REMARK (Reporting Recommendations for Tumor Marker Prognostic Studies) guideline includes a checklist which aims to improve the reporting of these types of studies.

-

Here, we expand on the REMARK checklist to enhance its use and effectiveness through better understanding of the intent of each item and why the information is important to report.

-

Each checklist item of the REMARK guideline is explained in detail and accompanied by published examples of good reporting.

-

The paper provides a comprehensive overview to educate on good reporting and provide a valuable reference of issues to consider when designing, conducting, and analyzing tumor marker studies and prognostic studies in medicine in general.

Background

The purpose of this paper is to provide more complete explanations of each of the Reporting Recommendations for Tumor Marker Prognostic Studies (REMARK) checklist items and to provide specific examples of good reporting drawn from the published literature. The initial REMARK paper [1]–[7] recommended items that should be reported in all published tumor marker prognostic studies (Table 1). The recommendations were developed by a committee initially convened under the auspices of the National Cancer Institute and the European Organisation for Research and Treatment of Cancer. They were based on the rationale that more transparent and complete reporting of studies would enable others to better judge the usefulness of the data and to interpret the study results in the appropriate context. Similar explanation and elaboration papers had been written to accompany other reporting guidelines [8]–[11]. No changes to the REMARK checklist items are being suggested here. We hope that the current paper will serve an educational role and lead to more effective implementation of the REMARK recommendations, resulting in more consistent, high quality reporting of tumor marker studies.

Tab. 1. The REMARK checklist [1]–[7]. ![The REMARK checklist <em class="ref">[1]</em>–<em class="ref">[7]</em>.](https://www.prolekarniky.cz/media/cache/resolve/media_object_image_small/media/image/8df84d7aeefd244a423f350d0fb1fae7.png)

Note: we have changed ‘univariate’ to ‘univariable’ in item 15 for consistency with ‘multivariable’. Our intent is to explain how to properly report prognostic marker research, not to specify how to perform the research. However, we believe that fundamental to an appreciation of the importance of good reporting is a basic understanding of how various factors such as specimen selection, marker assay methodology, and statistical study design and analysis can lead to different study results and interpretations. Many authors have discussed the fact that widespread methodological and reporting deficiencies plague the prognostic literature in cancer and other specialties [12]–[21]. Careful reporting of what was done and what results were obtained allows for better assessment of study quality and greater understanding of the relevance of the study conclusions. When available, we have cited published studies presenting empirical evidence of the quality of reporting of the information requested by the checklist items.

We recognize that tumor marker studies are generally collaborative efforts among researchers from a variety of disciplines. The current paper covers a wide range of topics and readers representing different disciplines may find certain parts of the paper more accessible than other parts. Nonetheless, it is helpful if all involved have a basic understanding of the collective obligations of the study team.

We have attempted to minimize distractions from more highly technical material by the use of boxes with supplementary information. The boxes are intended to help readers refresh their memories about some theoretical points or be quickly informed about technical background details. A full understanding of these points may require studying the cited references.

We aimed to provide a comprehensive overview that not only educates on good reporting but provides a valuable reference for the many issues to consider when designing, conducting and analyzing tumor marker studies. Each item is accompanied by one or more examples of good reporting drawn from the published literature. We hope that readers will find the paper useful not only when they are reporting their studies but also when they are planning their studies and analyzing their study data.

This paper is structured as the original checklist, according to the typical sections of scientific reports: Introduction, Materials and Methods, Results, and Discussion. There are numerous instances of cross-referencing between sections reflecting the fact that the sections are interrelated; for example, one must speak about the analysis methods used in order to discuss presentation of results obtained using those methods. These cross-references do not represent redundancies in the material presented and readers are reminded that distinctions in focus and emphasis between different items will sometimes be subtle.

One suggestion in the REMARK checklist is to include a diagram showing the flow of patients through the study (see Item 12). We elaborate upon that idea in the current paper. The flow diagram is an important element of the Consolidated Standards of Reporting Trials (CONSORT) Statement, which was developed to improve reporting of randomized controlled trials (RCTs) [8],[22],[23]. Many papers reporting randomized trial results present a flow diagram showing numbers of patients registered and randomized, numbers of patients excluded or lost to follow-up by treatment arms, and numbers analyzed. Flow diagrams are also recommended in the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) Statement for reporting observational studies, including cohort studies [9]. A diagram would indeed be useful for prognostic studies to clarify the numbers and characteristics of patients included at each stage of the study. There are additional key aspects of prognostic studies that need to be reported and would benefit from standardized presentation. Accordingly we have developed a ‘REMARK profile’ as a proposed format for describing succinctly key aspects of the design and analysis of a prognostic marker study; we discuss the profile in detail in Item 12 below.

The original scope of the REMARK recommendations focused on studies of prognostic tumor markers that reported measurement of biological molecules found in tissues, blood, and other body fluids. The recommendations also apply more generally to prognostic factors other than biological molecules that are often assessed in cancer patients, including the size of the tumor, abnormal features of the cells, the presence of tumor cells in regional lymph nodes, age, and gender among others. Prognostic research includes study of the wide variety of indicators that help clinicians predict the course of a patient's disease in the context of standard care. REMARK generally applies to any studies involving prognostic factors, whether those prognostic factors are biological markers, imaging assessments, clinical assessments, or measures of functional status in activities of daily living. REMARK applies to other diseases in addition to cancer. The processes of measuring and reporting the prognostic factors may differ, but the same study reporting principles apply.

We suggest that most of the recommendations also apply to studies looking at the usefulness of a marker for the prediction of benefit from therapy (typically called a predictive marker in oncology). Traditionally, predictive markers are evaluated by determination of whether the benefit of the treatment of interest compared to another standard treatment depends on the marker status or value. (See also Items 3 and 9 and Box 1.) A logical corollary to such a finding is that the prognostic value of that marker depends on the treatment the patient receives; for this reason, some view predictive markers as a special class of prognostic markers. Consequently, REMARK items apply to many aspects of these studies. In the explanations that follow for each of the checklist items, we attempted to make note of some special considerations for studies evaluating predictive markers. We hope that authors who report predictive marker studies will therefore find our recommendations useful. As predictive markers are usually evaluated in randomized trials, CONSORT [11] will also apply to reporting of predictive marker studies.

Box 1. Subgroups and Interactions: The Analysis of Joint Effects

It is often of interest to consider whether the effect of a marker differs in relation to a baseline variable, which may be categorical or continuous. Categorical variables, such as stage of disease, naturally define subgroups and continuous variables are often categorized by using one or more cutpoints. Investigating whether the marker effect is different (modified) in subgroups is popular. Epidemiologists speak about effect modification; more generally this phenomenon refers to the interaction between two variables.

In the context of randomized trials, one of these variables is the treatment and the other variable defines subgroups of the population. Here the interaction between treatment and the marker indicates whether the marker is predictive of treatment effect (that is, a predictive marker) [185]. This analysis is easiest for a binary marker. Subgroup analyses are often conducted. The interpretation of their results depends critically on whether the subgroup analyses were pre-specified or conducted post hoc based on results seen in the data. Subgroup differences are far more convincing when such an effect had been postulated; unanticipated significant effects are more likely to be chance findings and should be interpreted as being interesting hypotheses needing confirmation from similar trials. The same principles apply to consideration of subgroups in prognostic marker studies.

Subgroup analyses need to be done properly and interpreted cautiously. It is common practice to calculate separate P values for the prognostic effect of the marker in separate subgroups, often followed by an erroneous judgment that the marker has an effect in one subgroup but not in the other. However, a significant effect in one group and a non-significant effect in the other is not sound evidence that the effect of the marker differs by subgroup [186],[187]. First, a single test of interaction is required to rigorously assess whether effects are different in subgroups [188]. Interactions between two variables are usually investigated by testing the multiplicative term for significance (for example, in a Cox model). In many studies the sample size is too small to allow the detection of other than very large (and arguably implausible) interaction effects [189]. If the test of interaction is significant, then further evaluation may be required to determine the nature of the interaction, particularly whether it is qualitative (effects in opposite directions) or quantitative (effects in same direction but differing in magnitude). Because of the risk of false positive findings, replication is critical [190].

For continuous variables, categorization is a popular approach, but it has many disadvantages: the results depend on the chosen cutpoints (see Item 11 and Box 4), and it reduces the power to detect associations between marker variables and outcome [191]. The multivariable fractional polynomial interaction approach is an alternative that uses full information from the data and avoids specification of cutpoints. It allows investigation of interactions between a binary and a continuous variable, with or without adjustment for other variables [191],[192].

Another approach to assess the effect of treatment in relation to a continuous variable is the Subpopulation Treatment Effect Pattern Plot [193].

Both approaches were developed in the context of randomized trials, but they readily apply to observational prognostic studies investigating the interaction of a continuous marker with a binary or a categorical variable such as sex or stage [110],[194].

Although REMARK was primarily aimed at the reporting of studies that have evaluated the prognostic value of a single marker, the recommendations are substantially relevant to studies investigating more than one marker, including studies investigating complex markers that are composed of a few to many components, such as multivariable classification functions or indices, or are based on prognostic decision algorithms. These reporting recommendations do not attempt to address reporting of all aspects of the development or validation of these complex markers, but several key elements of REMARK do also apply to these developmental studies. Moreover, once these complex markers are fully defined, their evaluation in clinical studies is entirely within the scope of REMARK.

The development of prognostic markers generally involves a series of studies. These begin with identification of a relationship between a biological feature (for example, proliferative index or genetic alteration) and a clinical characteristic or outcome. To establish a clear and possibly causal relationship, a series of studies are conducted to address increasingly demanding hypotheses. The REMARK recommendations attempt to recognize these stages of development. For example, the discussion of Item 9 acknowledges that sample size determination may not be under the investigator's control but recommends that authors make clear whether there was a calculated sample size or, if not, consider the impact of the sample size on the reliability of the findings or precision of estimated effects. We anticipate that more details will be available in later stage studies, but many of the recommendations are also applicable to earlier stage studies. When specific items of information recommended by REMARK are not available, these situations should be fully acknowledged in the report so that readers may judge in context whether these missing elements are critical to study interpretation. Adherence to these reporting recommendations as much as possible will permit critical evaluation of the full body of evidence supporting a marker.

Checklist Items

Discussion and explanation of the 20 items in the REMARK checklist (Table 1) are presented. For clarity we have split the discussion of a few items into multiple parts. Each explanation is preceded by examples from the published literature that illustrate types of information that are appropriate to address the item. Our use of an example from a study does not imply that all aspects of the study were well reported or appropriately conducted. The example suggests only that this particular item, or a relevant part of it, was well reported in that study. Some of the quoted examples have been edited by removing citations or spelling out abbreviations, and some tables have been simplified.

Each checklist item should be addressed somewhere in a report even if it can only be addressed by an acknowledgment that the information is unknown. We do not prescribe a precise location or order of presentation as this may be dependent upon journal policies and is best left to the discretion of the authors of the report. We recognize that authors may address several items in a single section of text or in a table. In the current paper, we address reporting of results under a number of separate items to allow us to explain them clearly and provide examples, not to prescribe a heading or location. Authors may find it convenient to report some of the requested items in a supplementary material section, for example on a journal website, rather than in the body of the manuscript, to allow sufficient space for adequate detail to be provided. One strategy that has been used successfully is to provide the information in a supplementary table organized according to the order of the REMARK items [24]. The elements of the supplementary table may either provide the information directly in succinct form or point the reader to the relevant section of the main paper where the information can be found. Authors wishing to supply such a supplementary table with their paper may find it helpful to use the REMARK reporting template that is supplied as Text S1; it can also be downloaded from http://www.equator-network.org/resource-centre/library-of-health-research-reporting/reporting-guidelines/remark.

Introduction

Item 1

State the marker examined, the study objectives, and any pre-specified hypotheses.

Examples

Marker examined:

‘Using the same cohort of patients, we investigated the relationship between the type, density, and location of immune cells within tumors and the clinical outcome of the patients.’ [25]

Objectives:

‘The purpose of this study was to determine whether CpG island hypermethylation in the promoter region of the APC gene occurs in primary esophageal carcinomas and premalignant lesions, whether freely circulating hypermethylated APC DNA is detectable in the plasma of these patients, and whether the presence and quantity of hypermethylated APC in the plasma have any relationship with outcome.’ [26]

‘The goal of this study was to develop a sensitive and specific method for CTC [circulating tumor cell] detection in HER-2-positive breast cancer, and to validate its ability to track disease response and progression during therapy.’ [27]

Hypotheses:

‘The prespecified hypotheses tested were that TS expression level and p53 expression status are markers of overall survival (OS) in potentially curatively resected CRC.’ [28]

Explanation

Clear indication of the particular markers to be examined, the study objectives, and any pre-specified hypotheses should be provided early in the study report. Objectives are goals one hopes to accomplish by conducting the study. Typical objectives for tumor marker prognostic studies include, among others, an evaluation of the association between tumor marker value and clinical outcome, or determination of whether a tumor marker contributes additional information about likely clinical outcome beyond the information provided by standard clinical or pathologic factors.

The description of the marker should include both the biological aspects of the marker as well as the time in a patient's clinical course when it is to be assessed. The biological aspects should include the type of molecule or structure examined (for example, protein, RNA, DNA, or chromosomes) and the features assessed (for example, expression level, copy number, mutation, or translocation). Most prognostic marker studies are performed on specimens obtained at the time of initial diagnosis. The marker could also be assessed on specimens collected at completion of an initial course of therapy (for example, detection of minimal residual disease or circulating tumor cells to predict recurrence or progression) or at the time of recurrence or progression. A thorough description of the marker and timing of specimen collection is necessary for an understanding of the biological rationale and potential clinical application.

The stated objectives often lead to the development of specific hypotheses. Hypotheses should be formulated in terms of measures that are amenable to statistical evaluation. They represent tentative assumptions that can be supported or refuted by the results of the study. An example of a hypothesis is ‘high expression levels of the protein measured in the tumor at the time of diagnosis are associated with shorter disease-free survival’.

Pre-specified hypotheses are those that are based on prior research or an understanding of a biological mechanism, and they are stated before the study is initiated. Ideally, a systematic review of the literature should have been performed. New hypotheses may be suggested by inspection of data generated in the study. Analyses performed to address the new hypotheses are exploratory and should be reported as such. The distinction between analysis of the pre-specified hypotheses and exploratory analyses is important because it affects the interpretation (see Item 19) [9].

Materials and Methods

Patients

Item 2

Describe the characteristics (for example, disease stage or co-morbidities) of the study patients, including their source and inclusion and exclusion criteria.

Examples

‘Inclusion criteria for the 2810 patients from whom tumour or cytosol samples were stored in our tumour bank (liquid nitrogen) were: primary diagnosis of breast cancer between 1978 and 1992 (at least 5 years of potential follow-up); no metastatic disease at diagnosis; no previous diagnosis of carcinoma, with the exception of basal cell skin carcinoma and cervical cancer stage I; no evidence of disease within 1 month of primary surgery … Patients with inoperable T4 tumours and patients who received neoadjuvant treatment before primary surgery were excluded.’ [29]

‘We studied 196 adults who were younger than 60 years and who had untreated primary CN-AML. The diagnosis of CN-AML was based on standard cytogenetic analysis that was performed by CALGB-approved institutional cytogenetic laboratories as part of the cytogenetic companion study 8461. To be considered cytogenetically normal, at least 20 metaphase cells from diagnostic bone marrow (BM) had to be evaluated, and the karyotype had to be found normal in each patient. All cytogenetic results were confirmed by central karyotype review. All patients were enrolled on two similar CALGB treatment protocols (i.e., 9621 or 19808).’ [30]

‘These analyses were conducted within the context of a completed clinical trial for breast cancer (S8897), which was led by SWOG within the North American Breast Cancer Intergroup (INT0102) … Complete details of S8897 have been reported elsewhere [citation].’ [31]

Relevant text in the reference cited by Choi et al. [31]: ‘Patients were registered from the Southwest Oncology Group, Eastern Cooperative Oncology Group, and Cancer and Leukemia Group B … Eligible patients included premenopausal and postmenopausal women with T1 to T3a node negative invasive adenocarcinoma of the breast.’ [32]

Explanation

Each prognostic factor study includes data from patients drawn from a specific population. A description of that population is needed to place the study in a clinical context. The source of the patients should be specified, for example from a clinical trial population, a healthcare system, a clinical practice, or all hospitals in a certain geographic area.

Patient eligibility criteria, usually based on clinical or pathologic characteristics, should be clearly stated. As a minimum, eligibility criteria should specify the site and stage of cancer of the cases to be studied. Stage is particularly important because many tumor markers have prognostic value in early stage disease but not in advanced stage disease. For example, if a marker is indicative of metastatic potential, it may have strong prognostic value in patients with early stage disease but be less informative for patients who already have advanced or metastatic disease. For this reason, many studies are restricted to certain stages. Additional selection criteria may relate to factors such as patient age, treatment received (see Item 3), or the histologic type of cancer.

Exclusion criteria might be factors such as prior cancer, prior systemic treatment for cancer, nonstandard treatment (for example, rarely used, non-approved or ‘off-label’ use of a therapy), failure to obtain informed consent, insufficient tumor specimen, or a high proportion of missing critical clinical or pathologic data. It is generally not appropriate to exclude a case just because it has a few missing data elements if those data elements are not critical for assessment of primary inclusion or exclusion criteria (see Item 6a) [33]. In some studies, deaths that have occurred very early after the initiation of follow-up are excluded. If this is done, the rationale and timeframe for exclusion should be specified. To the extent possible, exclusion criteria should be specified prior to initiation of the study to avoid potential bias introduced by exclusions that could be partly motivated by intermediate analysis results.

When a prognostic study is performed using a subset of cases from a prior ‘parent’ study (for example, from a RCT or a large observational study cohort), there may be a prior publication or other publicly available document such as a study protocol that lists detailed eligibility and inclusion and exclusion criteria for the parent study. In these cases, the prior document can be referenced rather than repeating all of the details in the prognostic study paper. However, it is preferable that at least the major criteria (for example, the site and stage of the cancer) for the parent study still be mentioned in the prognostic study paper, and it is essential that any additional criteria imposed specifically for the prognostic study (such as availability of adequate specimens) be stated in the prognostic study paper.

Specification of inclusion and exclusion criteria can be especially challenging when the study is conducted retrospectively. The real population that the cases represent is often unclear if the starting point is all cases with accessible medical records or all cases with specimens included in a tumor bank. A review of 96 prognostic studies found that 40 had the availability of tumor specimens or data as an inclusion criterion [33]. In some studies, unknown characteristics may have governed whether cases were represented in the medical record system or tumor bank, making it impossible to specify exact inclusion and exclusion criteria. If the specimen set was assembled primarily on the basis of ready availability (that is, a ‘convenience’ sample), this should be acknowledged.

A flow diagram is very useful for succinctly describing the characteristics of the study patients. The entrance point to the flow diagram is the source of patients and successive steps in the diagram can represent inclusion and exclusion criteria. Some of the information from this diagram can also be given in the upper part of the REMARK profile (see Item 12 for examples).

After the study population has been defined, it is important to describe how the specific cases included in the study were sampled from that population. Item 6a discusses reporting of case selection methods.

Item 3

Describe treatments received and how chosen (for example, randomized or rule-based).

Examples

‘Patients were treated with surgery by either modified radical mastectomy (637 cases) or local tumour resection (683 cases), with axillary node dissection followed by postoperative breast irradiation (695 cases). Adjuvant therapy with chemotherapy and/or hormone therapy was decided according to nodal status and hormone receptor results. Treatment protocols varied over time. From 1975 to 1985, node-negative patients had no chemotherapy. After 1985, node-negative patients under 50 years of age, with ER and PR negative and SBR [Scarff-Bloom-Richardson] grade 3 tumours, had chemotherapy.’ [34]

‘Details of the treatment protocols have been previously reported. Briefly, patients on CALGB 9621 received induction chemotherapy with cytarabine, daunorubicin, and etoposide with (ADEP) or without (ADE) the multidrug resistance protein modulator PSC-833, also called valspodar. Patients who had CN-AML and who achieved a CR received high-dose cytarabine (HiDAC) and etoposide for stem-cell mobilization followed by myeloablative treatment with busulfan and etoposide supported by APBSCT. Patients unable to receive APBSCT received two additional cycles of Hi-DAC. Patients enrolled on CALGB 19808 were treated similarly to those on CALGB 9621. None of the patients received allogeneic stem-cell transplantation in first remission.’ [30]

Explanation

A patient's disease-related clinical outcome is determined by a combination of the inherent biological aggressiveness of a patient's tumor and the response to any therapies received. The influence of biological characteristics on disease outcome would ideally be assessed in patients who received no treatment, but usually most patients will have received some therapy. Many patients with solid tumors will receive local-regional therapy (for example, surgery and possibly radiotherapy). For some types and stages of cancer, patients would almost always receive systemic therapy (for example, chemotherapy or endocrine therapy). Sometimes all patients included in a study will have received a standardized therapy, but more often there will be a mix of treatments that patients have received. The varied treatments that patients might receive in standard care settings can make study of prognostic markers especially challenging.

Because different treatments might alter the disease course in different ways, it is important to report what treatments the patients received. The impact of a treatment might also depend on the biological characteristics of the tumor. This is the essence of predictive marker research where the goal is to identify the treatment that leads to the best clinical outcome for each biological class of tumor (for example, defined by markers) (see Box 1).

The basis for treatment selection, if known, should be reported. If not known, as will often be the case for retrospective specimen collections, one must be cautious in interpreting prognostic and predictive analyses. This concern derives from the possibility that the value of the marker or patient characteristics associated with the marker played a role in the choice of therapy, thereby leading to a potential confounding of effects of treatment and marker. If sufficient numbers of patients are treated with certain therapies, assessment of the prognostic value of the marker separately by treatment group (see Box 1) could be considered. However, predictive markers should generally be evaluated in randomized clinical trials to ensure that the choice of treatment was not influenced by the marker or other biological characteristics of the tumor.

It is also important to report the timing of therapy relative to specimen collection since biological characteristics of a tumor may be altered by the therapies to which it was exposed prior to specimen collection (see Item 4). The prognostic value of a marker may be different depending on whether it was present in the tumor at the time of initial diagnosis, was present only after the patient received therapy or whether it is in the presence of other biological characteristics that emerged as a consequence of therapy.

Specimen Characteristics

Item 4

Describe type of biological material used (including control samples) and preservation and storage methods.

Examples

Positive and negative controls:

‘Tumor specimens were obtained at the time of surgery and snap frozen in liquid nitrogen, then stored at −80°C. Blood samples were collected 24 hours or less before surgery by peripheral venous puncture and were centrifuged at 1500×g at 4°C for 10 minutes. The separated plasma was aliquoted and stored at −80°C for future analysis. Normal endometrial tissue specimens were obtained from patients undergoing hysterectomy for benign gynecologic pathologies. Control plasma specimens were derived from health check examinees at Yongdong Severance Hospital who showed no history of cancer or gynecologic disease and had no abnormalities in laboratory examinations or gynecologic sonography.’ [35]

Preservation and storage methods:

‘Fixation of tumor specimens followed standard protocols, using either 10% nonbuffered or 10% buffered formalin for 12 hours. Storage time of the archival samples was up to 15 years. Of the 57 independent MCL cases, 42 tumors had amplifiable cDNA.’ [36]

‘Tissue samples were fixed in 10% buffered formalin for 24 h, dehydrated in 70% EtOH and paraffin embedded. Five micrometer sections were cut using a cryostat (Leica Microsystems, UK) and mounted onto a histological glass slide. Ffpe [formalin-fixed, paraffin-embedded] tissue sections were stored at room temperature until further analysis.’ [37]

Explanation

Most tumor marker prognostic studies have focused on one or more of the following types of specimens: tumor tissue (formalin fixed and paraffin-embedded or frozen); tumor cells or tumor DNA isolated from blood, bone marrow, urine, or sputum; serum; or plasma. Authors should report what types of specimens were used for the marker assays. As much information about the source of the specimen as possible should be included, for example, whether a tumor sample was obtained at the time of definitive surgery or from a biopsy procedure such as core needle biopsy or fine needle aspirate. For patients with advanced disease, it should be clearly stated whether tumor samples assayed came from the primary tumor site (perhaps collected years earlier at the time of an original diagnosis of early stage disease) or from a current metastatic lesion and whether the patient had been exposed to any prior cancer-directed therapies (see Item 3).

Much has been written about the potential confounding effects of pre-analytical handling of specimens, and several organizations have recently published articles addressing best practices for specimen handling [38]–[40]. Although the way specimens are collected is often not under the control of investigators studying prognostic markers, it is important to report as much as possible about the types of biological materials used in the study and the way these materials were collected, processed, and stored. The time of specimen collection will often not coincide with the time when the marker assay is performed, as it is common for marker assays to be performed after the specimens have been stored for some period of time. It is important to state how long and how the specimens had been stored prior to performing the marker assay.

The Biospecimen Reporting for Improved Study Quality (BRISQ) guidelines provide comprehensive recommendations for what information should be reported regarding specimen characteristics and methods of specimen processing and handling when publishing research involving the use of biospecimens [41]. It is understood that reporting extensive detail is difficult if not impossible, especially when retrospective collections are used. In recognition of these difficulties, the BRISQ guidelines are presented in three tiers, according to the relative importance and feasibility of reporting certain types of biospecimen information.

Criteria for acceptability of biospecimens for use in marker studies should be established prior to initiating the study. Depending on the type of specimen and particular assay to be performed, criteria could be based on metrics such as percentage tumor cellularity, RNA integrity number, percentage viable cells, or hemolysis assessment. These criteria should be reported along with a record of the percentage of specimens that met the criteria and therefore were included in the study. The numbers of specimens examined at each stage in the study should be recorded in the suggested flowchart and, particularly, in the REMARK profile (see Item 12). This information permits the reader to better assess the feasibility of collecting the required specimens and might indicate potential biases introduced by the specimen screening criteria.

Often, the specific handling of a particular set of specimens may not be known, but if the standard operating procedures of the pathology department are known, it is helpful to report information such as type of fixative used and approximate length of fixation time; both fixative and fixation time have been reported to dramatically affect the expression of some markers evaluated in tissue [42],[43].

Information should be provided about whether tissue sections were cut from a block immediately prior to assaying for the marker. If tissue sections have been stored, the storage conditions (for example, temperature and air exposure) should be noted, if known. Some markers assessed by immunohistochemistry have shown significant loss of antigenicity when measured in cut sections that had been stored for various periods of time [44],[45]. The use of stabilizers (for example, to protect the integrity of RNA) should be reported. For frozen specimens, it is important to report how long they were stored, at what temperature and whether they had been thawed and re-frozen. If the specimen studied is serum or plasma, information should be provided about how the specimen was collected, including anticoagulants used, the temperature at which the specimen was maintained prior to long-term storage, processing protocols, preservatives used, and conditions of long-term storage.

Typically, some control samples will be assayed as part of the study. Control samples may provide information about the marker in non-diseased individuals (biological controls) or they may provide a means to monitor assay performance (assay controls).

Biological control samples may be obtained from healthy volunteers or from other patients visiting a clinic for medical care unrelated to cancer. Apparently normal tissue adjacent to the tumor tissue (in the same section) may be used or normal tissue taken during the surgical procedure but preserved in a separate block may also be used as a control. It is important to discuss the source of the biological controls and their suitability with respect to any factors that might differ between the control subjects and cancer patients (for example, other morbidities and medications, sex, age, and fasting status) and have an impact on the marker [46]. Information about the comparability of handling of control samples should also be provided.

Information about assay control or calibrator samples should also be reported. For example, if dilution series are used to calibrate daily assay runs or control samples with known marker values are run with each assay batch, information about these samples should be provided (see Item 5).

Assay Methods

Item 5

Specify the assay method used and provide (or reference) a detailed protocol, including specific reagents or kits used, quality control procedures, reproducibility assessments, quantitation methods, and scoring and reporting protocols. Specify whether and how assays were performed blinded to the study endpoint.

Examples

‘Immunohistochemistry was used to detect the presence of p27, MLH1, and MSH2 proteins in primary tumor specimens using methods described in previous reports. Positive controls were provided by examining staining of normal colonic mucosa from each case; tumors known to lack p27, MLH1, or MSH2 were stained concurrently and served as negative controls … In this report, we scored the tumors using a modification of our previous methods that we believe provides best reproducibility and yields the same outcome result as that using our previous scoring method (data not shown). Nuclear expression of p27 was evaluated in a total of 10 randomly selected high-power fields per tumor. A tumor cell was counted as p27 positive when its nuclear reaction was equal to or stronger than the reaction in surrounding lymphocytes, which were used as an internal control. All cases were scored as positive (>10% of tumor cells with strong nuclear staining), negative (<10% of tumor cells with strong nuclear staining), or noninformative.’ [47]

‘Evaluation of immunostaining was independently performed by two observers (KAH and PDG), blinded to clinical data. The agreement between the two observers was >90%. Discordant cases were reviewed with a gynaecological pathologist and were re-assigned on consensus of opinion.’ [48]

Explanation

Assay methods should be reported in a complete and transparent fashion with a level of detail that would enable another laboratory to reproduce the measurement technique. The term ‘assay’ is used broadly to mean any measurement process applied to a biological specimen that yields information about that specimen. For example, the assay may involve a single biochemical measurement or multiple measurements, or it may involve a semi-quantitative and possibly subjective scoring based on pathologic assessment. It has been demonstrated for many markers that different measurement techniques can produce systematically different results. For example, different levels of human epidermal growth factor receptor 2 expression have been found using different methods [49],[50]. Variations of p53 expression were observed in bladder tumors due to different staining techniques and scoring methods in a reproducibility study comparing immunohistochemical assessments performed in five different laboratories [51].

Although a complete listing of the relevant information to report for every class of assay is beyond the scope of this paper, examples of the general types of technical details that should be reported are as follows. Specific antibodies, antigen retrieval steps, standards and reference materials, scoring protocol, and score reporting and interpretation (for example, if results are reported as positive or negative) should be described for immunohistochemical assays. For DNA - and RNA-based assays, specific primers and probes should be identified along with any scoring or quantitation methods used. If another widely accessible document (such as a published paper) details the exact assay method used, it is acceptable to reference that other document without repeating all the technical details. If a commercially available kit is used for the assay, it is important to state whether the kit instructions were followed exactly; any deviations from the kit's recommended procedures must be fully acknowledged in the report.

It is important to report the minimum amount of specimen that was required to perform the assay (for example, a 5 µm section or 5 µg DNA) and whether there were any other assessments that were performed to judge the suitability of the specimen for use in the study (see Item 4). Assays requiring a large amount of specimen may not be feasible for broader clinical application, and study results may be biased toward larger tumors. If there were any additional specimen pre-processing steps required (for example, microdissection or polymerase chain reaction amplification), these should be stated as well.

It is helpful to report any procedures, such as use of blinded replicate samples or control reference samples, that are employed to assess or promote consistency of assay results over time or between laboratory sites. For assays in a more advanced state of development, additional examples could include qualification criteria for new lots of antibodies or quantitative instrument calibration procedures. If reproducibility assessments have been performed, it is helpful to report the results of those studies to provide a sense of the overall variability in the assay and identify major sources contributing to the variability.

Despite complete standardization of the assay technique and quality monitoring, random variation (measurement error) in assay results may persist due to assay imprecision, variation between observers or intratumoral biological heterogeneity. For example, many immunohistochemical assays require selection of ‘best’ regions to score, and subjective assessments of staining intensity and percentage of stained cells. The impact of measurement error is attenuation of the estimated prognostic effect of the marker. Good prognostic performance of a marker cannot be achieved in the presence of a large amount of imprecision. It is important to report any strategies that were employed to reduce the measurement error, such as taking the average of two or three readings to produce a measurement with less error, potentially increasing the power of the study and hence the reliability of the findings. In multicenter studies, single reviewers or reference laboratories are often used to reduce variability in marker measurements, and such efforts should be noted.

There may be a risk of introducing bias when a patient's clinical outcome is known by the individual making the marker assessment, particularly when the marker evaluation involves considerable subjective judgment. Therefore, it is important to report whether marker assessments were made blinded to clinical outcome.

Study Design

Item 6

State the method of case selection, including whether prospective or retrospective and whether stratification or matching (for example, by stage of disease or age) was used. Specify the time period from which cases were taken, the end of the follow-up period, and the median follow-up time.

To clarify the discussion we have split this item into two parts.

a. Case selection

Examples

‘We retrospectively analysed tumour samples from patients who were prospectively enrolled in phase II and III trials of HDC for HRPBC at the University of Colorado between 1990 and 2001.’ [52]

‘Seven hundred and seventy female patients with primary invasive breast cancer, diagnosed between 1992 and 1997 at the Institute of Oncology, Ljubljana, were included in the study. The patients had not been previously treated, had no proven metastatic disease at the time of diagnosis and no synchronous or metachronous occurring cancer. The primary inclusion criterion was an adequate histogram obtained from an FNA sample (see below). The diagnosis of carcinoma was therefore first established by FNA and subsequently confirmed and specified by histological examination in 690 primarily resected tumours (80 patients were not treated surgically).’ [53]

‘Of the 165 patients, all patients who had a pathology report of a non-well-differentiated (defined as moderately - to poorly-differentiated) SCC were identified. A matched control group of well-differentiated SCC was identified within the database. Matching criteria were (1) age (±5 y), (2) gender, and (3) site.’ [54]

Explanation

The reliability of a study depends importantly on the study design. An explanation of how patients were selected for inclusion in the study should be provided. Reliance on a label of ‘prospective’ or ‘retrospective’ is inadequate because these terms are ill-defined [55]. It should be clearly stated whether patients were recruited prospectively as part of a planned marker study, represent the full set or a subset of patients recruited prospectively for some other purpose such as a clinical trial, or were identified retrospectively through a search of an existing database, for example from hospital or registry records or from a tumor bank. Whether patients were selected with stratification according to clinicopathologic factors such as stage, based on survival experience or according to a matched design (for example, matched pairs of patients who did and did not recur) has important implications for the analysis and interpretation, so details of the procedures used should be reported.

Authors should describe exactly how and when clinical, pathologic, and follow-up data were collected for the identified patients. It should be stated whether the marker measurements were extracted retrospectively from existing records, whether assays were newly performed using stored specimens, or whether assays were performed in real time using prospectively collected specimens.

In truly prospective studies, complete baseline measurements (marker or clinicopathologic factors) can be made according to a detailed protocol using standard operating procedures, and the patients can be followed for an adequate length of time to allow a comparison of survival and other outcomes in relation to baseline tumor marker values. Prospective patient identification and data collection are preferable because the data will be higher quality. Prospective studies specifically designed to address marker questions are rare, although some prognostic studies are embedded within randomized treatment trials. Aside from a potential sample size problem, a prognostic marker study may be restricted to only some of the centers from a multicenter RCT. Case selection within participating centers (for example, inclusion of only younger patients or those with large tumors) may introduce bias and details of any such selection should be reported.

Most prognostic factor studies are retrospective in the sense that the assay of interest is performed on stored samples. The benefit of these retrospective studies is that there is existing information about moderate or long-term patient follow-up. Their main disadvantage is the lower quality of the data - clinical information collected retrospectively is often incomplete and clinicopathologic data may not have been collected in a standardized fashion (except perhaps if the data were collected as part of a clinical trial). Eligible patients should be considered to be part of the study cohort and not excluded because of incomplete data or loss to follow-up, with the amount of missing data reported for each variable. That allows readers to judge the representativeness of the patients whose data were available for analysis. (See also Item 10e, Item 12, and Box 2.)

Box 2. Missing Data

Missing data occur in almost all studies. The most common approach to dealing with missing data is to restrict analyses to individuals with complete data on all variables required for a particular analysis. These complete-case analyses can be biased if individuals with missing data are not typical of the whole sample. Furthermore, a small number of missing values in each of several variables can result in a large number of patients excluded from a multivariable analysis. The smaller sample size leads to a reduction in statistical power.

Imputation, in which each missing value is replaced with an estimated value, is a way to include all patients in the analysis. However, simple forms of imputation (for example, replacing values by the stage-specific mean) are likely to produce standard errors that are too small.

Data are described as missing completely at random (MCAR) if the probability that a specific observation is missing does not depend on the value of any observable variables. Data are missing at random (MAR) if missingness depends only on other observed variables. Data are missing not at random (MNAR) if the probability of being missing depends on unobserved values including possibly the missing value itself.

Small amounts of missing data can be imputed using simple methods, but when multiple variables have missing values, multiple imputation is the most common approach [130],[195],[196]. Most imputation methods assume data are MAR, but this cannot be proved, and these methods require assuming models for the relationship between missing values and the other observed variables. Use of a separate category indicating missing data has been shown to bias results [195].

The plausibility of assumptions made in missing data analyses is generally unverifiable. When more than minimal amounts of data are imputed it is valuable to present results obtained with imputation alongside those from complete case analyses, and to discuss important differences (Item 18).

In situations where more complex case selection strategies are used, those approaches must be carefully described. Given the small size of most prognostic studies (see Item 9), it is sometimes desirable to perform stratified sampling to ensure that important subgroups (for example, different stages of disease or different age groups) are represented. The stratified sampling may be in proportion to the prevalences of the subgroups in the population, or more rare subgroups may be oversampled (weighted with a higher sampling probability), especially if subgroup analyses are planned.

Occasionally, patients are sampled in relation to their survival experience - for example, taking only patients with either very short or very long survival (excluding some patients who were censored). Simulation studies have shown that sampling which excludes certain subgroups of patients leads to bias in estimates of prognostic value and thus should be avoided [56]. If a large number of patients is available for study but few patients had events, case-control (a case being a patient with an event, a control being a patient without an event) sampling methods (matched or unmatched) may offer improved efficiency.

If standard survival analysis methods are used, unselected cases or random samples of cases from a given population are necessary to produce unbiased survival estimates. If more complex stratified, weighted, or case-control sampling strategies are used, then specialized analysis methods appropriate for those sampling designs (for example, stratified and weighted analyses or conditional logistic regression) should have been applied and should be described [57],[58] (see Item 10).

b. Time period

Examples

‘… 1143 primary invasive breast tumors collected between 1978 and 1989 … All patients were examined routinely every 3–6 months during the first 5 years of follow-up and once a year thereafter. The median follow-up period of patients alive (n = 584) was 124 months (range, 13–231 months). Patients with events after 120 months were censored at 120 months because after 10 years of observation, patients frequently are redirected to their general practitioner for checkups and mammography and cease to visit our outpatient breast cancer clinic.’ [59]

‘The estimated median follow-up time, as calculated by the reverse Kaplan-Meier method, was 4.3 years.’ [60]

Explanation

Knowing when a study took place and over what period participants were recruited places a study in historical context. Medical and surgical therapies evolve continuously and may affect the routine care given to patients over time. In most studies where the outcome is the time to an event, follow-up of all participants is ended on a specific date. This date should be given, and it is also useful to report the median duration of follow-up.

The method of calculating the median follow up should be specified. The preferred approach is the reverse Kaplan-Meier method, which uses data from all patients in the cohort [61]. Here, the standard Kaplan-Meier method is used with the event indicator reversed so that censoring becomes the outcome of interest. Sometimes it may be helpful to also give the median follow-up of those patients who did not have the event (in other words, those with censored survival times). The amount of follow-up may vary for different endpoints, for example when recurrence is assessed locally but information about deaths comes from a central register.

It may also be useful to report how many patients were lost to follow-up for a long period (for example, over one year) or the completeness of the data compared to that if no patient was lost to follow-up [62],[63].

In a review of 132 reports in oncology journals in 1991 that used survival analysis, nearly 80% included the starting and ending dates for accrual of patients, but only 24% also reported the date on which follow-up ended [64]. A review of articles published in 2006 found those dates reported in 74% and 18% of articles, respectively. Of 331 studies included in 20 published meta-analyses, the time period during which patients were selected was precisely defined in 232 (70%) [18].

Item 7

Precisely define all clinical endpoints examined.

Examples

‘Survival time was defined to be the period of time in months from the date of diagnosis to the date of death from breast cancer. Patients who died from causes other than those relating to breast cancer were included for the study, and data for these records were treated as right-censored cases for evaluation purposes. Relapse time was defined as the period of time in months from the date of diagnosis to the date at which relapse was clinically identified. Data on patients who dropped out of the study for reasons other than a breast-cancer relapse were considered right-censored for these analyses.’ [65]

‘The primary end point was tumour recurrence or death of a patient. RFS was defined as time from mastectomy to the first occurrence of either locoregional or distant recurrence, contralateral tumour, secondary tumour or death; overall survival as time from operation to death.’ [66]

Explanation

Survival analysis is based on the elapsed time from a relevant time origin, often the date of diagnosis, surgery, or randomization, to a clinical endpoint. That time origin should always be specified.

Most prognostic studies in cancer examine few endpoints, mainly death, recurrence of disease, or both, but these endpoints are often not clearly defined (see Box 3). Analyses of time to death may be based on either deaths from any cause or only cancer related deaths. The endpoint should be defined precisely and not referred to just as ‘survival’ or ‘overall survival’. If deaths from cancer are analyzed, it is important to indicate how the cause of death was classified. If known, it can also be helpful to indicate what records (such as death certificate or tumor registry) were examined to determine the cause of death.

Box 3. Clinical Outcomes

It is important to clearly define any endpoints examined (see Item 7). Events typically considered in tumor marker prognostic studies include death due to any cause, death from cancer, distant recurrence, local recurrence, tumor progression, new primary tumor, or tumor response to treatment. The clinical endpoint is reached when the event occurs. For death, recurrence, progression, and new primary tumor, there is usually interest not only in whether the event occurs (endpoint reached), but also the time elapsed (for example, from the date of surgery or date of randomization in a clinical trial) until it occurs. Time until last evaluation is used for patients without an event (time censored). The clinical outcome is the combination of the attainment or non-attainment of the endpoint and the time elapsed. Such clinical outcomes are referred to as time-to-event outcomes. Commonly examined outcomes in tumor marker prognostic studies are disease-free survival (DFS), distant DFS, and overall survival (OS). Different event types are sometimes combined to define a composite endpoint, for example DFS usually includes any recurrence (local, regional, or distant) and death due to any cause. For composite endpoints, the time-to-event is the time elapsed until the first of any of the events comprising the composite endpoint occurs. As recently shown, a majority of articles failed to provide a complete specification of events included in endpoints [197].

Many clinical endpoints do not have standard definitions, although there have been some recent efforts to standardize definitions for some disease sites. The STandardized definitions for Efficacy End Points (STEEP) system [67] proposed standardized endpoint definitions for adjuvant breast cancer trials to address inconsistencies such as the fact that new primary tumors, non-cancer death, and in situ cancers may or may not be included as events in DFS for breast cancer. Different names may be used interchangeably for one survival time outcome, for example, recurrence-free survival and DFS. Furthermore, there is not always agreement on which endpoint is the most relevant endpoint to consider in a particular disease setting. For example, reliable information about cause of death is sometimes not available, so considering death due to any cause is often preferred. In some situations, for example, in an older patient population with small risk of dying from the cancer, it can be argued that death due to cancer is more relevant because it is expected that many deaths will be unrelated to the cancer and including them in the endpoint could make the estimated prognostic effect of the marker difficult to interpret.

The endpoints to be examined should be decided on the basis of clinical relevance. The results for all endpoints that were examined should be reported regardless of the statistical significance of the findings (see Items 15 to 17 and Box 5). A demonstrated association of a marker with one of these endpoints does not guarantee its association with all of the endpoints. For example, local recurrence may be an indication of insensitivity to local or regional therapy (such as radiation therapy) whereas distant recurrence requires that tumor cells have the ability to metastasize. Different markers may be indicative of these distinct characteristics.

If there was a specific rationale for choosing the primary clinical endpoint, it should be stated. For example, if the studied marker is believed to be associated with the ability of a cell to metastasize, an endpoint that focuses on distant recurrences might be justified. For a marker believed to be associated with sensitivity to radiation therapy, local-regional recurrences in a population of patients who received radiotherapy following primary surgery might be relevant.

The lack of standardized definitions also affects the analysis of recurrence of disease. Relapse-free survival, disease-free survival (DFS), remission duration, and progression-free survival are the terms most commonly used; however, they are rarely defined precisely. The first three imply that only patients who were disease-free after initial intervention were analyzed (although this is not always the case), while for progression-free survival all patients are generally included in the analysis. If authors analyze disease recurrence they should precisely define that endpoint, in particular with respect to how deaths are treated. Similarly, outcomes such as distant DFS should be defined precisely. Further, standardized definitions across studies would be desirable [67].

Some endpoints require subjective determination (for example, progression-free survival determined by a review of radiographic images). For this reason, it can also be helpful to report, if known, whether the endpoint assessments were made blinded to the marker measurements. It is helpful to report any additional steps taken to confirm the endpoint assessments (for example, a central review of images for progression determination).

The time origin was not stated for at least one endpoint in 48% of 132 papers in cancer journals reporting survival analyses [64]. At least one endpoint was not clearly defined in 62% of papers. Among the 106 papers with death as an endpoint, only 50 (47%) explicitly described the endpoint as either any death or only cancer death. In 64 papers that reported time to disease progression, the treatment of deaths was unclear in 39 (61%). Outcomes were precisely defined in 254 of 331 studies (77%) included in 20 published meta-analyses [18]. The authors noted, however, that ‘this percentage may be spuriously high because we considered all mortality definitions to be appropriate regardless of whether any level of detail was provided’.

Item 8

List all candidate variables initially examined or considered for inclusion in models.

Example

‘Cox survival analyses were performed to examine prognostic effects of vitamin D univariately (our primary analysis) and after adjustment for each of the following in turn: age (in years), tumor stage (T2, T3, or TX v T1), nodal stage (positive v negative), estrogen receptor status (positive or equivocal v negative), grade (3 v 1 or 2), use of adjuvant chemotherapy (any v none), use of adjuvant hormone therapy (any v none), body mass index (BMI; in kilograms per square meter), insulin (in picomoles per liter), and season of blood draw (summer v winter). Simultaneous adjustment for age, tumor stage, nodal stage, estrogen receptor status, and grade was then performed.’ [68]

Explanation

It is important for readers to know which marker measurements or other clinical or pathological variables were initially considered for inclusion in models, including variables not ultimately used. The reasons for lack of inclusion of variables should be addressed; for example, variables with large amounts of missing data (see Box 2). Authors should fully define all variables and, when relevant, they should explain how they were measured.

All of the variables considered for standard survival analyses should be measured at or before the study time origin (for example, the date of diagnosis) [69],[70]. (For tumor markers, this means the measurements are made on specimens collected at or before study time origin even if the actual marker assays are performed at a later time on stored specimens.) Variables measured after the time origin, such as experiencing an adverse event, should more properly be considered as outcomes, not predictors [71]. Another example is tumor shrinkage when the time origin is diagnosis or start of treatment. Statistical methods exist to allow inclusion of variables measured at times after the start of follow-up (‘time-dependent covariates’) [72], but they are rarely used and require strong assumptions [73],[74].

A list of the considered candidate variables was presented in 71% of a collection of 331 prognostic studies [18]. Of 132 articles published in cancer journals, 18 (13%) analyzed variables that were not measurable at the study time origin [64], of which 15 compared the survival of patients who responded to treatment to survival of those who did not respond. Out of 682 observational studies in clinical journals that used a survival analysis, 127 (19%) included covariates not measurable at baseline [69].

Item 9

Give rationale for sample size; if the study was designed to detect a specified effect size, give the target power and effect size.

Examples

‘Cost and practical issues restricted the sample size in our study to 400 patients. Only 30 centres entered ten or more patients in AXIS, so for practical reasons, retrieval of samples began with these centres within the UK, continuing until the target sample size of 400 had been reached.’ [75]

‘Assuming a control survival rate of 60% and 50% of patients with high TS expression or p53 overexpression, then analysis of tissue samples from 750 patients will have 80% power to detect an absolute difference of 10% in OS associated with the expression of either of these markers.’ [76]

‘Although it was a large trial, FOCUS still lacked power to be split into test and validation data sets. It was therefore treated as a single test-set, and positive findings from this analysis need to be validated in an independent patient population. A 1% significance level was used to allow for multiple testing. The number of assessable patients, variant allele frequencies, and consequent power varied by polymorphism; however, with an overall primary outcome event rate of 20%, we could detect differences of 10% (eg, 14% v 24%) between any two treatment comparisons, and we could detect a linear trend in genotype subgroups varying by 6% (eg, 13% v 19% v 25%) with a significance level of 1% and 90% power … Even with a dropout rate of 14% for incomplete clinical data, there was 85% power at a significance level of 1% to detect a 10% difference from 14% to 24% in toxicity for any two treatment comparisons or a linear trend in genotype subgroups from 13% to 19% to 25%.’ [77]

Explanation

Sample size has generally received little attention in prognostic studies, perhaps because these studies are often performed using pre-existing specimen collections or data sets. For several reasons, the basis for a sample size calculation in these studies is less clear than for a randomized trial. For example, the minimum effect size of interest for a prognostic marker study may be quite different from that of an intervention study, and the effect of the marker adjusted for other standard variables in a multivariable model may be of greater interest than the unadjusted effect. Authors should explain the considerations that led to the sample size. Sometimes a formal statistical calculation will have been performed, for example calculation of the number of cases required to obtain an estimated hazard ratio with prescribed precision or to have adequate power to detect an effect of a given size. More often sample size will be determined by practical considerations, such as the availability of tumor samples or cost. Even in this situation, it is still helpful to report what effect size will be detectable with sufficient power given the pre-determined sample size.

Several authors have addressed the issue of sample size calculations applicable to prognostic studies [78]–[80]. The most important factor influencing power and sample size requirement for a study with a time-to-event outcome is the number of observed events (effective sample size), not the number of patients. For a binary outcome, the effective sample size is the smaller of the two frequencies, ‘event’ or ‘non-event’. Additional factors, such as the minimum detectable effect size, distribution of the marker (or the prevalence of a binary marker), coding of the marker (whether treated as a continuous variable or dichotomous; see Item 11 and Box 4), and type of analysis method or statistical test also have an impact. As a consequence of the importance of the number of events, studies of patients with a relatively good prognosis, such as lymph node negative breast cancer, require many more patients or longer follow-up than studies of metastatic disease in which events are more frequently observed. Choice of an endpoint that includes recurrence as an event in addition to death will also result in more observed events and higher power, an important reason as to why DFS is often preferred as an endpoint [81].

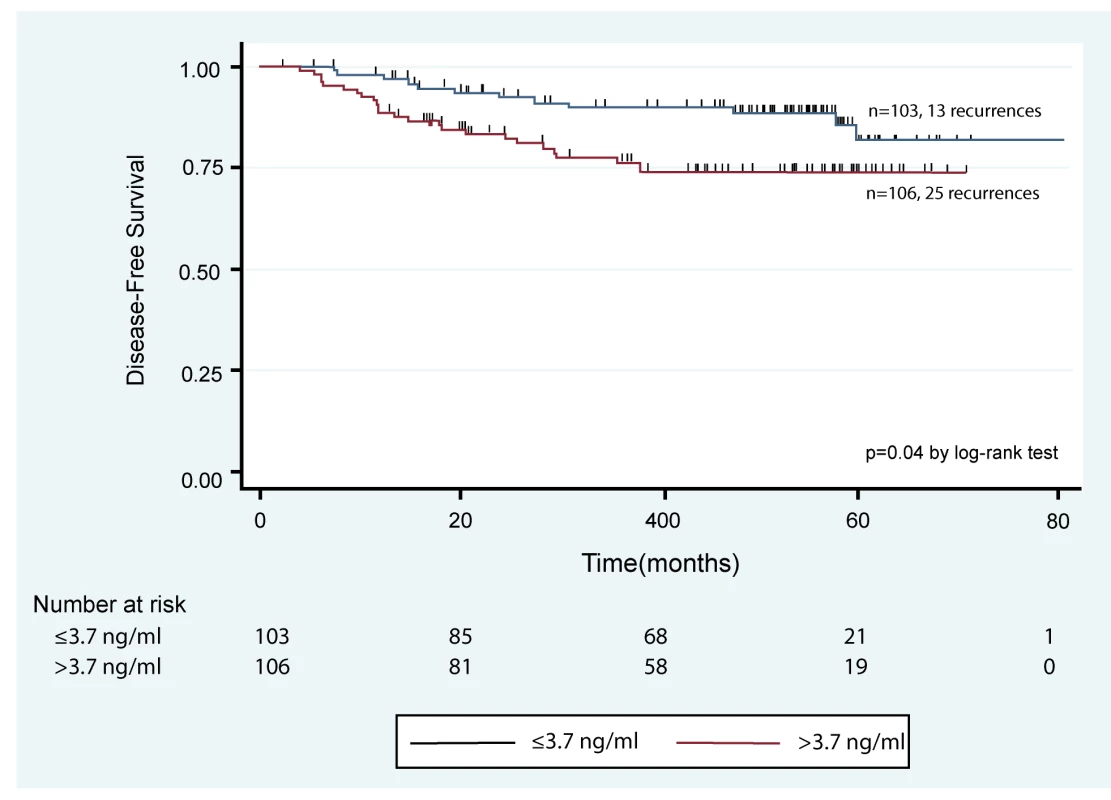

Box 4. Continuous Variables

Many markers are recorded as continuous measurements, but in oncology it is common to convert them into categorical form by using one or more cutpoints (Item 11). Common reasons are to simplify the analysis, to make it easier for clinicians to use marker information in decision making, because the functional form of the influence of a marker is often unknown, and to facilitate graphical presentation (for example, Kaplan-Meier curves). Although categorization is required for issues such as decision making, it has to be stressed that categorization of continuous data is unnecessary for statistical analysis. The perceived advantages of a simpler analysis come at a high cost, as explained below. The same considerations apply to both the marker being studied and other continuous variables.

Categorization

Categorization allows researchers to avoid strong assumptions about the relationship between the marker and risk. However, this comes at the expense of throwing away information. The information loss is greatest when the marker is dichotomized (two categories).

It is well known that the results of analyses can vary if different cutpoints are used for splitting. Dichotomizing does not introduce bias if the split is at the median or some other pre-specified percentile, as is often done. If, however, the cutpoint is chosen based on multiple analyses of the data, in particular taking the value which produced the smallest P value, then the P value will be much too small and there is a large risk of a false positive finding [198]. An analysis based on the so-called optimal cutpoint will also heavily overestimate the prognostic effect, although bias correction methods are available [199].

Even with a pre-specified cutpoint, dichotomization is statistically inefficient and is thus strongly discouraged [153],[200],[201]. Further, prognosis is usually estimated from multivariable models so if cutpoints are needed as an aid in classifying people into distinct risk groups this is best done after modeling [153],[202].

Categorizing a continuous variable into three or more groups reduces the loss of information but is rarely done in clinical studies (by contrast to epidemiology). Even so, cutpoints result in a model with step functions which is inadequate to describe a smooth relationship [110].

Keeping variables continuous

A linear functional relationship is the most popular approach for keeping the continuous nature of the covariate. Often that is an acceptable assumption, but it may be incorrect, leading to a mis-specified final model in which a relevant variable may not be included or in which the assumed functional form differs substantially from the unknown true form.

A check for linearity can be done by investigating possible improvement of fit by allowing some form of nonlinearity. For a long time, quadratic or cubic polynomials were used to model non-linear relationships, but the more general family of fractional polynomial (FP) functions provide a rich class of simple functions which often provide an improved fit [203]. Determination of FP specification and model selection can be done simultaneously with a simple and understandable presentation of results [108],[110].

Spline functions are another approach to investigate the functional relationship of a continuous marker [101]. They are extremely flexible, but no procedure for simultaneously selecting variables and functional forms has found wide acceptance. Furthermore, even for a univariable spline model, reporting is usually restricted to the plot of the function because presentation of the parameter estimates is too complicated.

When the full information from continuous variables is used in the analysis, the results can be presented in categories to allow them to be used for tasks such as decision making.

Sample size requirements will differ depending on the goal of the study and stage of development of the marker. For markers early in the development process, investigators may be most interested in detecting large effects unadjusted for other variables and may be willing to accept higher chances of false positive findings (that is, a higher type I error) to avoid missing interesting marker effects. Targeting larger effect sizes and allowing higher error rates will result in a smaller required sample size. As a prognostic marker advances in the development process, it will typically be studied in the context of regression models containing other clinically relevant variables, as discussed in Item 10d. These situations will require larger sample sizes to account for the diminished size of marker effects adjusted for other (potentially correlated) variables and to offer some stability even when multiple variables will be examined and model selection methods will be used.

When the goal is to identify the most relevant variables in a model, various authors have suggested that at least 10 to 25 events are required for each of the potential prognostic variables to be investigated [82]–[85]. Sometimes the primary focus is estimation of the marker effect after adjustment for a set of standard variables, so correctly identifying which of the other variables are really important contributors to the model is of less concern. In this situation, sample size need not be as large as the 10 to 25 events per variable rule would recommend [86] and other sample size calculation methods that appropriately account for correlation of the marker with the other variables are available [78],[87]. Required sample sizes are substantially larger if interactions are investigated. For example, an interaction between a marker and a treatment indicator may be examined to assess whether a marker is predictive for treatment benefit (see Box 3).

Several studies have noted the generally small sample size of published studies of prognostic markers. In a review of lung cancer prognostic marker studies, the median number of patients per study was 120 [88], while three quarters of studies in a review of osteosarcoma prognostic marker literature included fewer than 100 patients [89]. In a systematic review of tumor markers for neuroblastoma, 122 (38%) of 318 eligible reports were excluded because the sample size was 25 or lower [90]. As mentioned above, the number of events is a more relevant determinant of power of a study, and it is usually much smaller and often not even reported (see Item 12).

Twenty meta-analyses that included 331 cancer prognostic studies published between 1987 and 2005 were assessed to determine the quality of reporting for the included studies [18]. Only three (0.9%) of the 331 studies reported that a power calculation had been performed to determine sample size.

Statistical Analysis Methods

Item 10

Specify all statistical methods, including details of any variable selection procedures and other model-building issues, how model assumptions were verified, and how missing data were handled.

After some broad introductory observations about statistical analyses, we consider this key item under eight subheadings.

All the statistical methods used in the analysis should be reported. A sound general principle is to ‘describe statistical methods with enough detail to enable a knowledgeable reader with access to the original data to verify the reported results’ [91]. It is additionally valuable if the reader can also understand the reasons for the approaches taken.

Moreover, for prognostic marker studies there are many possible analysis strategies and choices are made at each step of the analysis. If many different analyses are performed, and only those with the best results are reported, this can lead to very misleading inferences. Therefore, it is essential also to give a broad, comprehensive view of the range of analyses that have been undertaken in the study (see also the REMARK profile in Item 12). Details can be given in supplementary material if necessary due to publication length limitations.