-

Články

Top novinky

Reklama- Vzdělávání

- Časopisy

Top články

Nové číslo

- Témata

Top novinky

Reklama- Videa

- Podcasty

Nové podcasty

Reklama- Kariéra

Doporučené pozice

Reklama- Praxe

Top novinky

ReklamaGlobal Genetic Variations Predict Brain Response to Faces

We measured brain response to facial expressions in a large sample of typically developing adolescents (n = 1,620) and assessed “heritability” of the response using common genetic variations across the genome. In a subset of brain regions, we explained 40–50% of phenotypic variance by genetic variance. These brain regions appear to differ from the rest of the face network in the degree of inter-individual variations in their functional connectivity. We propose that these regions, including the prefrontal and premotor cortex, represent “Optional” part of the network co-opted by its “Obligatory” members, including the posterior part of the superior temporal sulcus, fusiform face area and the lateral occipital cortex, concerned with processing complex visual stimuli.

Published in the journal: . PLoS Genet 10(8): e32767. doi:10.1371/journal.pgen.1004523

Category: Research Article

doi: https://doi.org/10.1371/journal.pgen.1004523Summary

We measured brain response to facial expressions in a large sample of typically developing adolescents (n = 1,620) and assessed “heritability” of the response using common genetic variations across the genome. In a subset of brain regions, we explained 40–50% of phenotypic variance by genetic variance. These brain regions appear to differ from the rest of the face network in the degree of inter-individual variations in their functional connectivity. We propose that these regions, including the prefrontal and premotor cortex, represent “Optional” part of the network co-opted by its “Obligatory” members, including the posterior part of the superior temporal sulcus, fusiform face area and the lateral occipital cortex, concerned with processing complex visual stimuli.

Introduction

Interactions with peers are of high relevance to our mental health. Patients with various psychological disorders show impairments in face perception and emotion recognition [1]–[4]. Similarly, differential neural responses to faces have been reported in various psychological disorders including depression [5], psychopathy and/or aggressive tendencies [6], autism [7], and schizophrenia [8].

Our ability to process faces is modulated by both environment and genes. Using a twin design, Zhu and colleagues observed that inter-individual variations in face perception are heritable, with the genetic component as high as 50% in adolescents performing various face tasks [9]. Although the key elements of the neural network underlying face processing are well known [10], whether or not brain response to facial expressions show comparable levels of heritability is unknown.

Here we address this question using genomic-relationship-matrix restricted maximum likelihood (GREML), applied using Genome-wide Complex Trait Analysis (GCTA) software [11] to a dataset of functional magnetic resonance images (fMRI) obtained in over 1,600 typically developing adolescents while they were observing videoclips of ambiguous or angry facial expressions [The IMAGEN Study; 12]. The GREML approach allows one to estimate how much phenotypic variance is attributable to the genetic variance captured by all common genetic variations (single nucleotide polymorphisms; SNPs) assayed in a typical Genome Wide Association Study (GWAS). We will ask here whether “heritability” of the response to facial expressions – as estimated using the GREML approach – varies across these regions. We will then examine possible reasons for such regional variations.

Results

The GREML-based metrics were calculated from a total of 1,620 unrelated adolescents (age M(SD) = 14.4(0.39) range 12.7–16.3 years, n = 879 male, n = 945 female) with complete, quality controlled fMRI and genomic data (511,089 SNPs).

In fMRI, brain response to a stimulus is inferred from the variations in hemodynamics detected as the blood oxygenation-level dependent (BOLD) signal on T2*-weighted MR images. This signal relies on the fact that brain activity is associated with an oversupply of oxygenated blood to the brain region engaged by the stimulus; consequently, small veins that drain this region contain some of the unused oxygenated blood. Thus, the BOLD signal reflects the proportion of oxygenated and de-oxygenated blood in a given brain region at a given moment; most likely, this hemodynamic signal is proportional to the local field potentials generated by (excitatory) inputs.

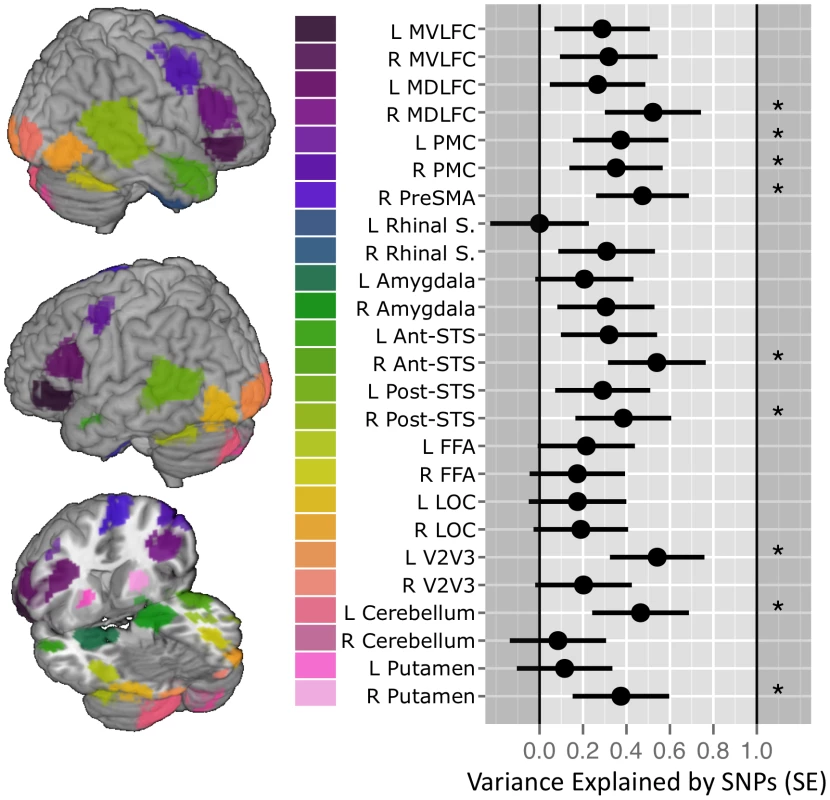

Here, summary measures for the BOLD response (%BSC) were calculated for two face viewing conditions (Ambiguous movements and Angry Expressions), each compared with a non-biological motion control condition; this was done for 25 brain regions of interest (ROIs), as defined previously using a probabilistic map of the brain response to facial expressions [13]. GREML-based estimates of “heritability” were calculated using the GCTA package [13][11]. We observed significant estimates of “heritability” for the Ambiguous vs. Control contrast %BSC in 9 out of these 25 ROIs (Fig. 1, Supplementary Table S1). No significant estimates were observed for any ROI in the Angry vs. Control contrast (Supplementary Table S2). By chance we would expect 25*0.05 = 1.25 false positives when examining 25 ROIs (in each contrast), or 2.5 when examining 50 ROIs (both contrasts combined) at the α = 0.05 level. As we found 9 ROIs with P<0.05 this appears to be evidence against the null hypothesis. To provide a P-value for this count-rate approach, we conducted a Monte Carlo simulation for each contrast using the observed correlation matrix (see Supplementary Figure S1 for the phenotypic correlation matrix between all 25 ROIs) [14]. Using 50,000 realizations, we simulated the null-hypothesis test statistics for each ROI (using correlated outcomes) and tabulated the distribution of the number of P-values significant at 0.05; this empirical distribution can be used to compute P-values for this count statistic. For the Ambiguous Facial Expressions contrast, this simulation confirmed that we could reject the null hypothesis of observing 9 significant tests by chance at P value of 0.03. For Angry Facial Expressions contrast, the P-value must be 1.0 for a count of 0 significant tests.

Fig. 1. SNP-based estimates of heritability in the brain response to ambiguous faces.

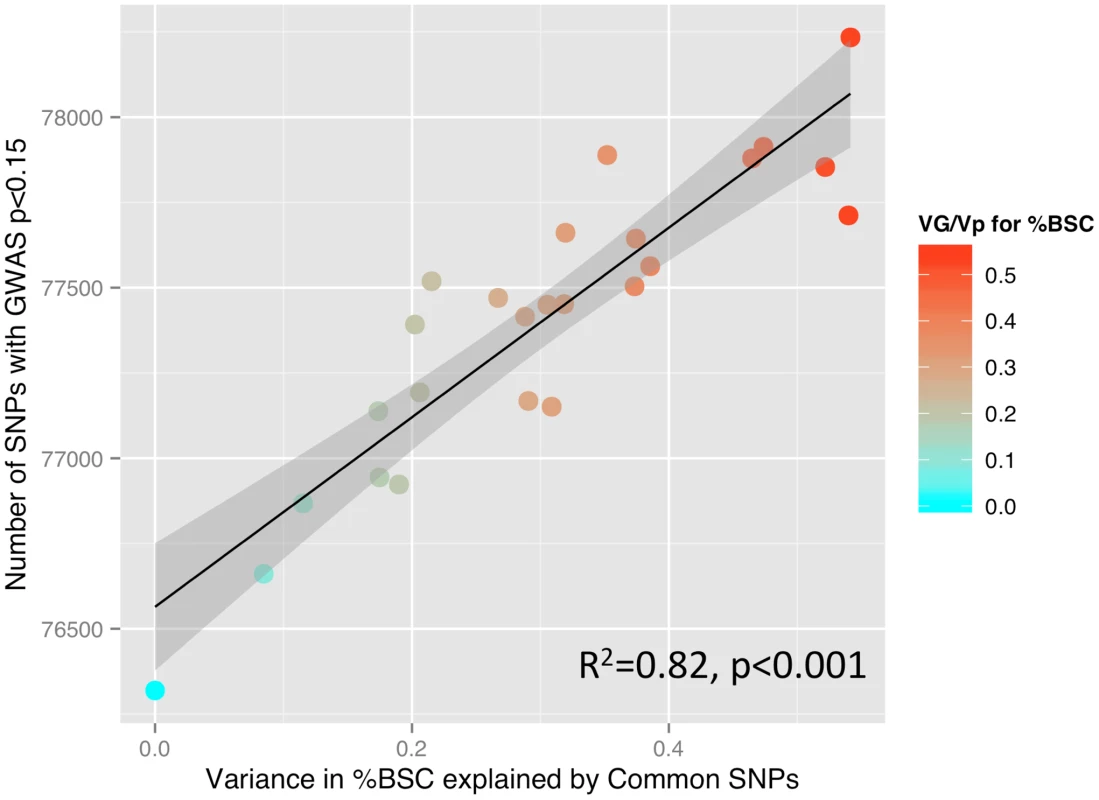

(Left) Locations of the 25 functional Regions of Interest (ROI) defined for the Dynamic Ambiguous Face vs. Control contrast [13]. (Right) Proportion of variance in mean percent BOLD signal change (%BCS) explained by common genetic variance for each ROI when viewing facial expressions in 1,620 unrelated adolescents. Error bars indicate the standard Error of the estimate. Stars indicate those estimates significant at an alpha 0.05 (uncorrected). Vertical gridlines are at intervals of 0.2. Abbreviations: Mid-ventrolateral frontal cortex (MVLFC); Mid-dorsolateral frontal cortex (MDLFC); premotor cortex (PMC), pre supplementary motor area (PreSMA); superior temporal sulcus (STS); fusiform face area (FFA); lateral occipital cortex (LOC); left (L); right (R). SNP, single nucleotide polymorphisms. To illustrate the relationship between the GREML-based estimates of heritability (VG/Vp) and the number of SNPs with p values lower than a certain threshold, we have calculated Pearson's correlation coefficients between these two measures across 25 ROIs, as done by Yang and colleagues in their GREML-based study of 47 different traits [15]. We obtained the following results: p<0.001: R2 = 0.08; p<0.01: R2 = 0.47; p<0.05: R2 = 0.54; p<0.1: R2 = 0.74; p<0.15: R2 = 0.82; p<0.2: R2 = 0.76; p<0.25: R2 = 0.74; and p<0.3: R2 = 0.64. In Figure 2, we plot the number of SNPs with p<0.15 and the VG/Vp values across the 25 ROIs.

Fig. 2. SNP-based estimates of heritability and the number of SNPs detected in a GWAS.

Relationship between GREML-based heritability estimates (Genetic Variance/Phenotypic Variance) and the number of single nucleotide polymorphisms (SNPs) showing a significant relationship with percent BOLD signal change (%BSC) at a threshold of p<0.15 (see the Results section for rationale). P values for this analysis were obtained using linear regression. Given the high heritability of general intelligence [16], we have examined the possibility that intelligence correlates with the inter-individual variations in the brain response to facial expressions in the Ambiguous condition. In a subset of 1,772 individuals with available scores on four subtests of the Wechsler Intelligence Scale for Children – IV (similarities, vocabulary, block design and matrix reasoning), we found no correlation between these scores and the mean %BSC across all ROIs (p>0.3) or between the scores and the mean %BCS in the Optional (p>0.2) and Obligatory (p>0.4) networks. The lack of the relationship between general intelligence and %BSC suggests that the former does not contribute to the above heritability estimates of the brain response to the ambiguous facial expressions.

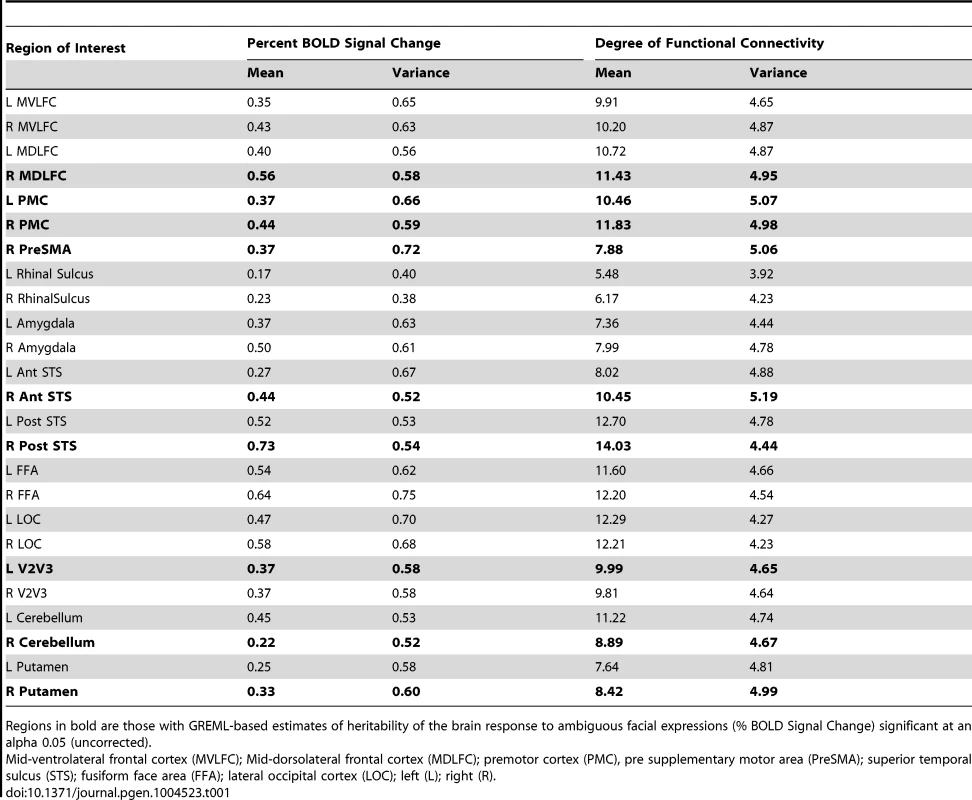

Next, we asked whether the above GREML-based estimates of heritability reflect any properties of the brain response across the examined ROIs. For example, are the ROIs with stronger response to facial expressions more heritable? Table 1 provides the population means and standard deviations for %BSC for all ROIs in the ambiguous contrast. Neither the population means (r = −0.28, p = 0.18) nor the population variance (r = 0.07, p = 0.73) of %BSC values across the 25 ROIs predicts the GREML-based (Genetic Variance [VG]/Phenotypic Variance [Vp]) estimates of heritability.

Tab. 1. Population mean (Mean) and population variance (Standard deviation, SD) for Percent BOLD Signal Change (Ambiguous Facial expressions vs. Control Stimuli) and the Degree of Functional Connectivity (number of regions correlated with an r>0.3).

Regions in bold are those with GREML-based estimates of heritability of the brain response to ambiguous facial expressions (% BOLD Signal Change) significant at an alpha 0.05 (uncorrected). The brain regions considered here may be viewed as nodes of a “face” network. The strength of each region's contribution to this network may differ across regions (ROIs) and across individuals. To quantify this phenomenon, we extracted mean time-courses in the BOLD signal from all ROIs in each participant and used these to calculate matrices of functional connectivity for all participants. From these matrices, we estimated the number of connections of a given region with the other members of the face network using the graph-theory metric of nodal “degree” [17].

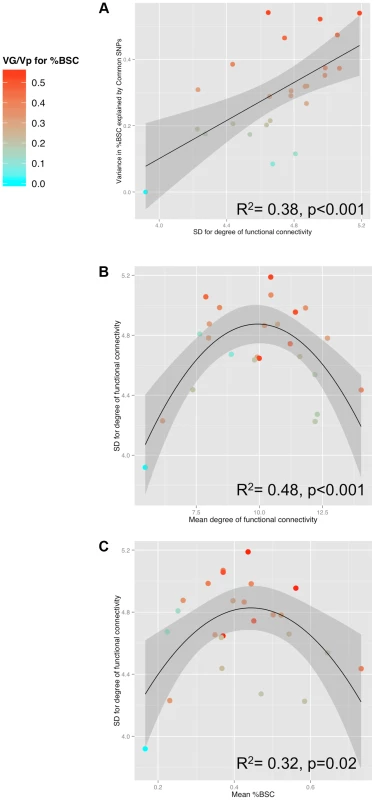

Table 1 provides the population means and standard deviations for the nodal degree for all ROIs (Ambiguous Facial expressions). We then examined whether differences in this measure of functional connectivity across the 25 ROIs constituting the face network predict their GREML-based estimates of heritability (VG/Vp estimates). This is the case: the population (inter-individual) variance in the nodal degree predicts strongly the “heritability” (R2 = 0.38, p<0.001, Fig. 3a). Furthermore, the population variance in the nodal degree shows an inverse U-shaped relationship with the mean nodal degree (2nd order polynomial fit: R2 = 0.48, F(2,22) = 10.32, p<0.001, Fig. 3b) and the mean %BSC (2nd order polynomial fit: R2 = 0.32, F(2,22) = 5.07 p = 0.02, Fig. 3c). Similarity of this inverse U-shaped relationship for the mean nodal degree and the mean %BSC is not surprising given a strong correlation between these two measures (r = 0.84, p<0.001). Note, however, that the population means of nodal degree do not predict their GREML-based estimates of heritability across the 25 brain regions (R2 = 0.06, p = 0.23). This is likely related to the fact that the mean nodal degree shows an inverted-U relationship with the population variance of this measure across these regions (Fig. 3b). Finally, we have repeated these analyses for the Angry Facial expressions; the only significant relationship observed in this condition was that between the population variance and the population mean in nodal degree (Supplementary Figure S2). Population means and standard deviations for %BSC and nodal degree for all ROIs of the Angry condition are given in Supplemental Table S3.

Fig. 3. Relationships between SNP-based estimates of heritability, population variance in functional connectivity and the BOLD response.

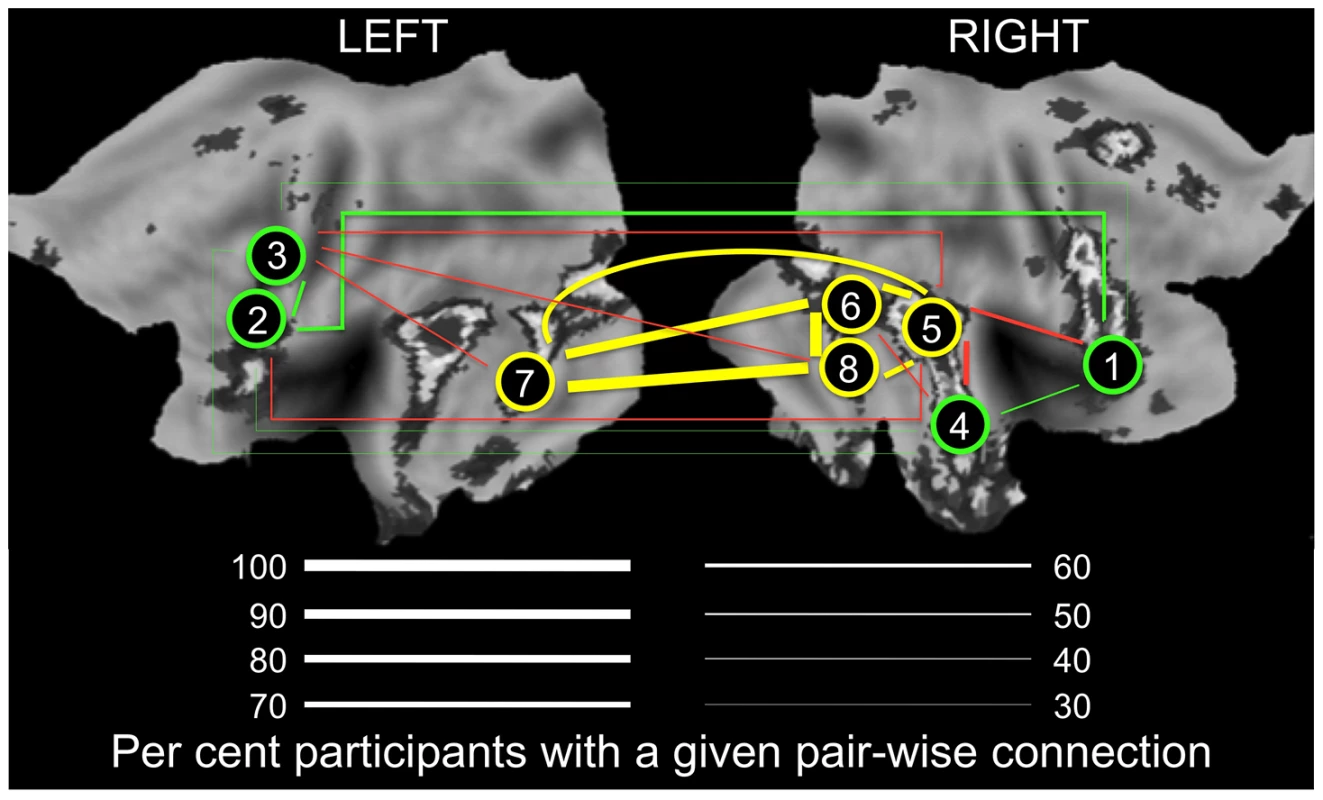

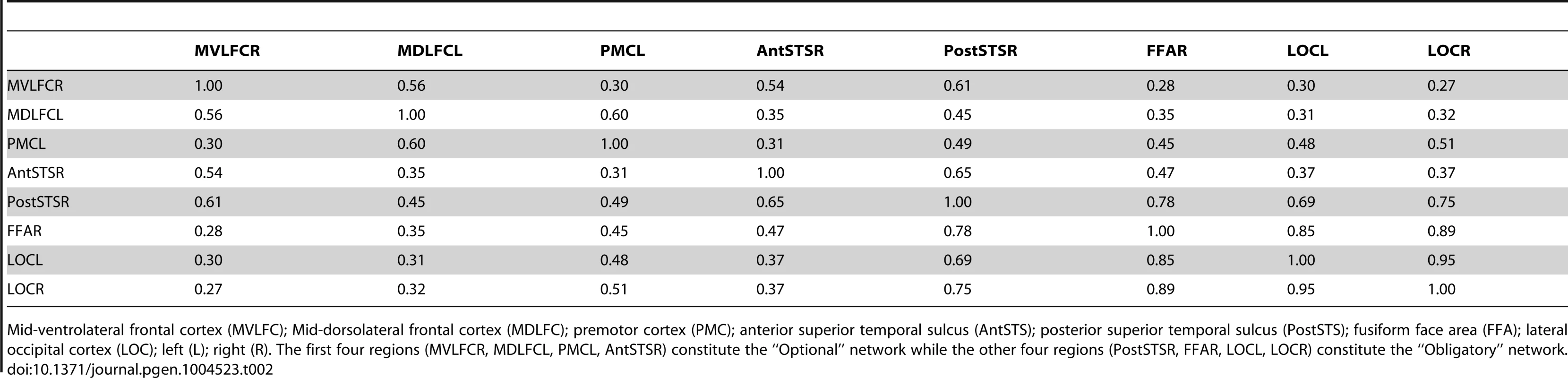

A) Relationship between GREML-based heritability estimates (Genetic Variance/Phenotypic Variance) and the population variance (standard deviation, SD) of functional connectivity (node degree) across 25 ROIs for the Ambiguous face viewing contrast. B) Relationship between the population variance (SD) and the population mean of degree across the 25 ROIs. C) Relationship between population mean of the brain response (percent BOLD signal change, %BSC) and the population variance (SD) of degree across the 25 ROIs. For all three plots, colour is scaled according to the GREML results for %BSC (cyan for low values and red for high values). VG, Genetic Variance; Vp, Phenotypic Variance. To illustrate the relationship between population variance and mean in the number of connections (nodal degree) in the Ambiguous contrast, we selected two groups of ROIs that differ in the combination of these two measures of degree: (1) ROIs with the highest variance and an intermediate mean; and (2) ROIs with the highest mean and the lowest variance. As shown in Figure 4 (and Table 2), proportion of individuals with connections between a given pair of ROIs (i.e., pair-wise correlations with r >0.3) is intermediate within the first subnetwork (30 to 60% of participants) and very high within the second subnetwork (70 to 96% of participants). Importantly, the posterior STS (region #5 in Fig. 4) appears to act as a “bridge” between the two subnetworks: it is the only member of the second subnetwork with connections to all four nodes of the first subnetwork in 50% (or more) of participants. We then examined genetic covariance within and between the two subnetworks, which we term “Optional” and “Obligatory” (see Discussion for comparison with the “Extended” and “Core” systems of Haxby and colleagues [10]). Using a bivariate GCTA approach [18], we observed significant (p<0.05) genetic covariances in three pairs of ROIs: [R MVLFC - R Ant STS], [R MVLFC – R Post STS] and [R AntSTS – R Post STS]. Furthermore, we found marginal (p<0.1) covariances in four additional pairs of ROIs, three within the “Optional” network and one between the “Optional” and “Obligatory” network (L PMC - R Post STS). The full genetic-covariance matrix for the eight ROIs constituting the two subnetworks is provided in Supplemental Table S4.

Fig. 4. Connectivity in the “Obligatory” (yellow) and “Optional” (green) nodes of the face network.

Thickness of lines indicates proportion of participants (%) with a given pair-wise connection, defined as a pair-wise correlation r>0.3. Yellow and green lines denote connections within the “Obligatory” and “Optional” networks, respectively. Red lines denote connections across the two subnetworks; for clarity, only connections present in 50% or more participants are shown. For all pair-wise values, see Supplementary Table S2. 1, mid-ventrolateral frontal cortex (right); 2, mid-dorsolateral frontal cortex (left); 3, premotor cortex (left); 4, anterior portion of the superior temporal sulcus (right); 5, posterior portion of the superior temporal sulcus (right); 6, fusiform face area (right); 7, lateral occipital cortex (left); 8, lateral occipital cortex (right). LEFT, the left hemisphere; RIGHT, the right hemisphere. The flat maps of the cerebral cortex contain the probability map of the face network adapted from Tahmasebi et al. [13]. Tab. 2. Proportion of individuals with connections between a given pair of regions of interest.

Mid-ventrolateral frontal cortex (MVLFC); Mid-dorsolateral frontal cortex (MDLFC); premotor cortex (PMC); anterior superior temporal sulcus (AntSTS); posterior superior temporal sulcus (PostSTS); fusiform face area (FFA); lateral occipital cortex (LOC); left (L); right (R). The first four regions (MVLFCR, MDLFCL, PMCL, AntSTSR) constitute the “Optional” network while the other four regions (PostSTSR, FFAR, LOCL, LOCR) constitute the “Obligatory” network. Discussion

Using the GREML approach, we show that the aggregate of common genetic variations across the entire genome predicts brain response to facial expressions. This is the case, however, only for some brain regions when viewing ambiguous facial expressions. The fact that inter-individual variations in certain brain responses to certain faces can be predicted by genetic variations (across the entire genome) is in keeping with other evidence supporting heritability of many behavioral aspects of face perception. Indeed, prosopagnosia - an inability of recognize faces - is transmitted in some family pedigrees carrying an autosomal dominant pattern of transmission [19]. Using a twin design in adults, Wilmer et al. [20] reported that facial recognition has a strong heritable component. Greenwood et al. [21] found that memory for faces, as well as emotional recognition, were heritable phenotypes in a study of adult patients with schizophrenia and their non-affected relatives. Most relevant to the current study, Zhu et al. [9] showed that several aspects of face perception, including facial recognition and the face-inversion effect, were heritable and that the heritability increased from childhood to adolescence.

Heritability of the brain response to faces has been estimated in a couple of twin studies. Thus, Anokhin et al. [22] used event-related potentials to changes in emotional expression in children and reported heritability values varying between 42 and 64% [22]. Moreover, Polk et al. [23] showed that fMRI responses to faces in the ventral visual stream were more similar within pairs of adult monozygotic (13 pairs) than dizygotic (11 pairs) twins; sample sizes were too small to calculate heritability in this study [23].

In the ambiguous viewing condition, we observed that brain regions with the most population variance in their contribution to the face-processing network, as indexed by the “nodal degree” metric, were the most heritable. In other words, the amount of population variance in functional connectivity of a given region was related to the probability of explaining this region's response (to faces) by global genetic variations. Furthermore, we also observed that – across the 25 ROIs - brain regions with highest estimates of heritability (and highest population variance in connectivity) appear to coincide with ROIs characterized by intermediate values of their response to facial expressions (and their connectivity). As illustrated in Figure 4, the combination of these two properties (i.e., population mean and variance in nodal degree) sets apart two “subnetworks” we term “Obligatory” and “Optional” to denote their hypothetical role in processing faces. The proposed model builds on the distinction between the “Core” and “Extended” neural systems for face perception, as outlined originally by Haxby and colleagues [10]. In their model, the “Core” system consists of the inferior occipital gyri, fusiform gyri and a set of cortical areas along the superior temporal sulcus; this system includes all “Obligatory” regions identified here. The “Extended” system encompasses the anterior temporal cortex (e.g., face identity) and amygdala (emotion), as well as the auditory cortex (speech-related mouth movements) and cortical areas in the intra-parietal sulcus (spatial attention); with the exception of the anterior temporal cortex, this system is different from the “Optional” system consisting primarily of fronto-cortical regions. Nonetheless, both the “Extended” system of Haxby and colleagues and the Optional one identified here are viewed as being “…comprised of regions from neural systems for other cognitive functions that can be recruited to act in concert with the regions in the core system to extract meaning from faces” [10].

We suggest that the “Obligatory” regions are brought online in most participants when viewing human facial expressions; hence the high mean number of connections but low inter-individual variance in connectivity. These regions coincide with visual areas located in the occipital and temporal cortex, known to respond robustly to complex visual stimuli in general and human faces in particular. On the other hand, the “Optional” regions are co-opted to the face network by some but not all participants (hence the high population variance in their connectivity). Given the putative role of these regions in different aspects of working memory (mid-dorsolateral and mid-ventrolateral prefrontal cortex) and motor resonance (premotor cortex), we speculate that individuals co-opting these regions while viewing faces do so in the context of their cognitive evaluation engaged spontaneously (no instructions were given to this effect). It is of interest to note that a number of “Optional” regions show shared genetic covariance with each other (albeit at marginal significance levels); this is not the case for any pair of “Obligatory” regions.

Finally, it appears that such a recruitment of the “Optional” regions may related to their connectivity with the posterior part of the STS (region #5 in Fig. 4), the only “Obligatory” region with a high number of connections to all four “Optional” regions. Of course, the posterior STS is an ideal candidate for bridging these two subnetworks, given its well-established role in extracting and processing social signals in non-biological motion [24]. This view is supported by our observation that the posterior STS is the only “Obligatory” region that shows genetic covariance with a number of “Optional” regions.

Overall, it is possible that regions with either low (floor effect) or high (ceiling effect) engagement by the stimulus (dynamic facial expressions) do not provide large enough range of (phenotypic) values across individuals and, in turn, are less likely to capture co-variations with genetic variance. Note that given the modest sample size (1,620 unrelated individuals), we are powered here to detect only relatively high values of heritability [25]. Recent studies suggest that more than half of narrow-sense heritability (h2), as estimated through studies of related individuals, can be explained by common genetic variations assessed with SNPs included in most DNA microarrays [26]. Furthermore, the degree of GREML-based estimates of heritability predicts strongly the number of SNPs that reach nominal significance in a GWAS across 47 quantitative traits [15]. We have observed similar relationship for the brain response to faces across the 25 brain regions.

The low GREML-based estimates of heritability in the Angry condition were unexpected but clear cut: VG/Vp values were close to 0 in 19/25 ROIs, with values in the remaining ROIs varying between 0.12 and 0.28 (Supplemental Table S2). As explained in the “Limitations and Significance” section, we have power to detect only relatively high values of “heritability”, albeit with fairly large confidence intervals. If true heritability of the brain response to Angry Facial expressions is low (this is unknown), we are underpowered to estimate such low values using the GREML-approach in the current study. An alternative possibility is that, in general, neural processing of angry facial expressions is as heritable as that of any other facial expressions but our paradigm fails to elicit an “adequate” brain response. Given our observation of the strong relationship between GREML-based estimates of heritability (VG/Vp) for Ambiguous Facial expressions and the population variance in functional connectivity (Figure 2), it is important to note that the latter is lower in the Angry (vs. Ambiguous) contrast. Furthermore, unlike the Ambiguous contrast (Figure 3c), there is no relationship between the mean BOLD response and the population variance in functional connectivity across the 25 ROIs in the Angry contrast (Supplemental Figure S2c). Thus, it is possible that angry facial expressions did not engage the face network in the same manner as the ambiguous facial expressions, resulting in a suboptimal phenotype.

Limitations and Significance

The key limitation of the present report is sample size; with 1,620 unrelated individuals, we are at the lower limit of the GREML-based approach for estimating contributions of common SNPs to phenotypic variations. Therefore, we were able to detect only relatively high values of heritability; note that these estimates have fairly large confidence intervals (standard error [SE]; e.g., R-MDLFC: VG/Vp = 0.52±0.22) and must be therefore interpreted cautiously. Simulations conditional on empirical GWAS data are consistent with these observations; sample size of 1,999 individuals is adequate for estimating correctly high (h2 = 0.5) narrow-sense heritability [25]. The limited sample size also affected the significance values. Nonetheless, the uneven distribution of the nominally significant results between the Ambiguous (9/25) and Angry (0/25) speaks against a chance nature of these findings, as confirmed by the Monte-Carlo simulations.

The above sample-size limitation must be viewed in the context of the phenotype under study, however. The previous twin-based studies of fMRI-based phenotypes employed between 20 and 141 twin pairs, with heritability (a2) estimates varying widely between 0 and 65 [27]. A total of 333 related individuals were included in a pedigree-based study of heritability of resting-state fMRI; h2 for functional connectivity of the different components of so-called default-mode network varied between 10 (SE = 13) and 42 (SE = 17) [28]. Working with unrelated individuals, only a few other studies are acquiring functional brain phenotypes with a sample size comparable to the present report. For example, the Human Connectome Project plans to collect paradigm-based and resting-state fMRI datasets in 1,200 individuals [29]. In the Generation R cohort, scanning is under way to collected resting-state fMRI in up to 5,000 children (White, personal communication). Given the challenges related to test-retest reliability of fMRI data in general, and resting-state fMRI in particular, we suggest that the GREML approach provides an excellent test-bed for evaluating various approaches aimed at improving the fidelity of functional brain phenotypes. Such a GREML-based approach would be particularly powerful for fine-tuning functional phenotypes for meta-analyses of genome-wide association studies (similar to those carried out with structural brain phenotypes [30]), which require pooling of fMRI datasets (paradigm-based or resting) collected under varied conditions and on different scanners; GREML-based estimates of “heritability” would provide a useful metric for selecting appropriate post-processing steps and/or modifying inclusion criteria before launching the GWAS.

Overall, this report indicates that GREML-based estimates of heritability of the brain response to facial expressions vary across regions and paradigms, possibly as a function of inter-regional differences in the population variance of functional connectivity. As such, it demonstrates the usefulness of this approach in identifying functional phenotypes with properties suitable for genetic studies.

Materials and Methods

Participants

As part of the IMAGEN project [12], 2,000 adolescents (∼14 years of age) were recruited through local high schools in eight European cities across four countries: France (Paris), Germany (Mannheim, Hamburg, Dresden and Berlin), Ireland (Dublin) and United Kingdom (London and Nottingham). Local ethics boards approved the study protocol: Comité de protection des personnes Ile de France (CPP IDF VII); Ethics Committee of the German Psychological Society (DPG); Hamburg Chamber of Physicians Ethics Board (Hamburg Medical Association); Medical Ethics Commission of the Faculty of Clinical Medicine Mannheim; Medical Faculty Carl Gustav Carus Ethics Commission, Technical University Dresden; Nottingham University Medical School Research Ethics Committee; Psychiatry, Nursing & Midwifery Research Ethics Committee, King's College London; Ruprecht-Karls-University of Heidelberg; and School of Psychology Ethics Committee, Trinity College Dublin. The parents and adolescents provided written informed consent and assent, respectively.

MRI Acquisition and Initial Quality Control

Scanning was performed on 3 Tesla scanners from four different manufacturers (Siemens: 4 sites, Philips: 2 sites, General Electric: 1 site, and Bruker: 1 site). High-resolution T1-weighted anatomical images were acquired using 3D Magnetization Prepared Rapid Acquisition Gradient Echo (MPRAGE) sequence (TR = 2,300 ms; TE = 2.8 ms; flip angle = 9°; voxel size: 1.1×1.1×1.1 mm3). Functional T2*-weighted images were acquired using Gradient-Echo Echo-Planar-Imaging (GE-EPI) sequences (field of view: 22 cm; pixel size: 3.4×3.4 mm2; slice thickness of 2.4 mm; slice gap 1.0 mm; effective final voxel size 3.4×3.4×3.4 mm3; TE = 30 ms and TR = 2,200 ms; flip angle = 75°).

During the fMRI session participants viewed short videoclips displaying ambiguous facial expressions (gestures such as nose twitching), angry facial expression or control stimuli (non-biological motion). The control stimuli were adapted from a study of Beauchamp and colleagues [31]. The face stimuli were created as follows. Eight actors (four females) were filmed for the face movements. They were instructed to express different emotions starting from a neutral point. We also extracted short video-clips from the periods when the actors were not expressing the emotions but were nonetheless moving their face (e.g. twitching their nose, opening their mouth, blinking their eyes). Twenty video-clips were selected for the angry and ambiguous face movements respectively. Four raters judged the intensity of each of emotion from those clips. The average rating for the angry face movements, on a scale of 1 (not angry at all) to 9 (very angry) was 7.94 (Standard Deviation [SD] = 0.77). The average rating for the ambiguous facial expressions was 2.18 (SD = 0.84) for anger, 2.97 (SD = 1.07) for sadness and 3.49 (S = 1.03) for happiness; combined across the three scales, the rating of ambiguous facial expressions was 2.92 (SD = 1.18). The control stimuli consisted of black-and-white concentric circles of various contrasts, expanding and contracting at various speeds, roughly matching the contrast and motion characteristics of the faces and hands clips [32]. We presented dynamic video clips of faces because, compared with static faces, they elicit more robust responses in brain regions critical for face processing, such as the fusiform gyrus and amygdala, and engage a more elaborate network for face processing, including regions in the frontal cortex and along the superior temporal sulcus [33]. The three viewing conditions were organized into 18-second blocks (5 Ambiguous, 5 Angry, 9 control) for a total of 160 EPI volumes in a single six-min fMRI run.

From 2,000 participants, a total of 1,926 completed both the Face paradigm and T1-weighted scan. Data from 79 participants were excluded due to excessive head movement during functional MR scanning (more than 2 mm in translation or 2 degrees in rotation errors in either direction), 8 participants were excluded due to unknown age, 5 participants had poor quality of fMRI data, and 1 participant was excluded because of abnormal ventricles. Scans from 1,831 participants were preprocessed using SPM8 toolbox (Statistical Parameter Mapping: Wellcome Department of Cognitive Neurology, London, UK) in MATLAB 7.0 (www.mathworks.com). Functional (EPI) images were motion-corrected with respect to the first volume. Subsequently, the EPI images were aligned to the corresponding high-resolution T1-weighted images (co-registration). The co-registered EPI images were transformed to the ICBM152 template space using the deformation parameters from the nonlinear registration of the corresponding structural image to the ICBM152 template. The nonlinear registration was achieved using the Unified Segmentation tool in SPM package. Further details of the pre-processing pipeline is provided in Tahmasebi et al. [13].

Face Network: Definition and Analysis

Regions of interest (ROIs) relevant for face processing were defined from a probabilistic map computed in a subsample (n = 1,110) of the IMAGEN dataset, as reported in Tahmasebi et al [13]. From this map, 25 ROIs were defined that are consistently (population probability >0.5) engaged during the ambiguous and angry face processing, relative to control (non-biological motion) condition. For each ROI, mean percent BOLD signal change (%BSC) for each ROI was extracted for all participants, as in Tahmasebi et al. [13], and analyzed as phenotypes of interest in GREML analyses. Values of %BSC were standardized (Z-Scored) for each acquisition site to account for scanner effects. Sex was added as a covariate.

The connectivity matrix for each face condition was calculated as follows. Nuisance covariates including white matter (WM) signals, and cerebrospinal fluid (CSF) signals were regressed out from the BOLD signals; WM and CSF voxels were identified by thresholding (at 90%) the WM and CSF tissue probabilistic maps from the ICBM152 standard template. For each ROI, the mean BOLD signal time-series was calculated by averaging the BOLD signal from all voxels constituting the ROI at every time point (160 time points in total). The BOLD time-series for each face condition were then realized by concatenating the mean-centered signal from the corresponding blocks (5 blocks per face condition and 9 blocks for control; each block consists of 8 time points), shifted by 2 TRs (4.4 s) to accommodate for the rise in the hemodynamic response. The correlation matrix was calculated between the time-series from every pair of the 25 ROIs. This yielded a 25-by-25 symmetric functional-connectivity matrix for each participant and face condition. We reduce these matrices into undirected graphs by thresholding each pair-wise correlation at r>0.3. This creates a graph (network) with ROI's as nodes and edges between them representing functional connections. Within each participant, we calculate node degree for each ROI (node) to summarize the graph. Node degree is simply the count of other ROI's in which the BOLD time series correlates (r>0.3) with the given ROI. This analysis was performed with the Brain Connectivity Toolbox [17].

Given the importance of measurement error in estimating heritability, we have evaluated reproducibility of the brain response to facial expressions by correlating – across the 25 ROIs - the %BSC values obtained in two randomly selected subsamples: Group A (434 males, 483 females) and Group B (448 males, 459 females). In the absence of test-retest reliability measurements, such a cross-group comparison provides an indirect index of measurement error. As shown in Supplemental Figure S3, variations of the %BSC across the 25 ROIs were highly predictable in Group B from measures obtained in Group A (R2 = 0.96).

Genotyping, Genomic-Relationship-Matrix REstricted Maximum Likelihood (GREML) and Genome-Wide Association Study (GWAS) Analyses

Whole genome data were acquired from 2,089 participants using Illumina Human610-Quad Beadchip and Illumina Human660-Quad Beadchip. Quality control of the genotypes was accomplished using Plink software [34]. Of the 588,875 SNPs overlapping present on both chips, a total of 42,506 single nucleotide polymorphisms (SNPs) were excluded for missingness of more than 5%, 15 individuals excluded for low genotyping rate (less than 97%), 16,385 SNPs were excluded for failing to reach Hardy-Weinberg equilibrium (p< = 0.0001), and 20,131 SNPs were excluded for low minor allele frequency (MAF<0.01).

In total, 511,089 SNPs were used to calculate genetic relationship matrices using GCTA (http://www.complextraitgenomics.com/software/gcta). We excluded adolescents with a genetic relationship >0.05 (i.e., more related than 2nd degree cousins) to remove the influence of potential shared environment effects or familial causal variants not captured by SNPs. We included the top 10 principal components of the identity-by-state matrix as a covariate in all analyses to control for population stratification in our cohort. For each ROI, we have calculated GREML-based estimates of “heritability”, defines as Genetic Variance [VG]/Phenotypic Variance [Vp], for the brain response to facial expressions (%BSC).

In order to examine the relationship between the VG/Vp estimates and the number of SNPs reaching a nominal level of significance [15] across the 25 ROIs, we have carried out Genome-wide Association Studies (GWAS) of %BSC using the same set of 1,620 unrelated adolescents. To do so, we used PLINK software [34]. Mean %BSC values where standardized (Z-Scores) in order to control for effects of Sex and Scanning Site. The top 10 principal components of the identity-by-state matrix as a covariate in all analyses to control for population stratification in our cohort.

Supporting Information

Zdroje

1. BourkeC, DouglasK, PorterR (2010) Processing of facial emotion expression in major depression: a review. Aust N Z J Psychiatry 44 : 681–696 doi:10.3109/00048674.2010.496359

2. Machado-de-SousaJP, ArraisKC, AlvesNT, ChagasMHN, de Meneses-GayaC, et al. (2010) Facial affect processing in social anxiety: tasks and stimuli. J Neurosci Methods 193 : 1–6 doi:10.1016/j.jneumeth.2010.08.013

3. MarwickK, HallJ (2008) Social cognition in schizophrenia: a review of face processing. Br Med Bull 88 : 43–58 doi:10.1093/bmb/ldn035

4. TohWL (2011) Current visual scanpath research: a review of investigations into the psychotic, anxiety, and mood disorders. Compr Psychiatry 52 : 567–579.

5. LeppänenJM (2006) Emotional information processing in mood disorders: a review of behavioral and neuroimaging findings. Curr Opin Psychiatry 19 : 34–39 doi:10.1097/01.yco.0000191500.46411.00

6. BlairRJR (2010) Neuroimaging of psychopathy and antisocial behavior: a targeted review. Curr Psychiatry Rep 12 : 76–82 doi:10.1007/s11920-009-0086-x

7. HarmsMB, MartinA, WallaceGL (2010) Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychol Rev 20 : 290–322 doi:10.1007/s11065-010-9138-6

8. TaylorSF, MacDonaldAW3rd Cognitive Neuroscience Treatment Research to Improve Cognition in Schizophrenia (2012) Brain mapping biomarkers of socio-emotional processing in schizophrenia. Schizophr Bull 38 : 73–80 doi:10.1093/schbul/sbr105

9. ZhuQ, SongY, HuS, LiX, TianM, et al. (2010) Heritability of the specific cognitive ability of face perception. Curr Biol CB 20 : 137–142 doi:10.1016/j.cub.2009.11.067

10. Haxby Hoffman, Gobbini (2000) The distributed human neural system for face perception. Trends Cogn Sci 4 : 223–233.

11. YangJ, LeeSH, GoddardME, VisscherPM (2011) GCTA: a tool for genome-wide complex trait analysis. Am J Hum Genet 88 : 76–82 doi:10.1016/j.ajhg.2010.11.011

12. SchumannG, LothE, BanaschewskiT, BarbotA, BarkerG, et al. (2010) The IMAGEN study: reinforcement-related behaviour in normal brain function and psychopathology. Mol Psychiatry 15 : 1128–1139 doi:10.1038/mp.2010.4

13. TahmasebiAM, ArtigesE, BanaschewskiT, BarkerGJ, BruehlR, et al. (2012) Creating probabilistic maps of the face network in the adolescent brain: a multicentre functional MRI study. Hum Brain Mapp 33 : 938–957 doi:10.1002/hbm.21261

14. Robert CP CG (2005) Monte Carlo Statistical Methods. 2nd ed. Springer.

15. YangJ, LeeT, KimJ, ChoM-C, HanB-G, et al. (2013) Ubiquitous polygenicity of human complex traits: genome-wide analysis of 49 traits in Koreans. PLoS Genet 9: e1003355 doi:10.1371/journal.pgen.1003355

16. DearyIJ, YangJ, DaviesG, HarrisSE, TenesaA, et al. (2012) Genetic contributions to stability and change in intelligence from childhood to old age. Nature 482 : 212–215 doi:10.1038/nature10781

17. RubinovM, SpornsO (2010) Complex network measures of brain connectivity: uses and interpretations. NeuroImage 52 : 1059–1069 doi:10.1016/j.neuroimage.2009.10.003

18. LeeSH, DeCandiaTR, RipkeS, YangJ, SullivanPF, et al. (2012) Estimating the proportion of variation in susceptibility to schizophrenia captured by common SNPs. Nat Genet 44 : 247–250 doi:10.1038/ng.1108

19. GrueterM, GrueterT, BellV, HorstJ, LaskowskiW, et al. (2007) Hereditary prosopagnosia: the first case series. Cortex J Devoted Study Nerv Syst Behav 43 : 734–749.

20. WilmerJB, GermineL, ChabrisCF, ChatterjeeG, WilliamsM, et al. (2010) Human face recognition ability is specific and highly heritable. Proc Natl Acad Sci 107 : 5238–5241 doi:10.1073/pnas.0913053107

21. GreenwoodTA, BraffDL, LightGA, et al. (2007) Initial heritability analyses of endophenotypic measures for schizophrenia: The consortium on the genetics of schizophrenia. Arch Gen Psychiatry 64 : 1242–1250 doi:10.1001/archpsyc.64.11.1242

22. AnokhinAP, GolosheykinS, HeathAC (2010) Heritability of individual differences in cortical processing of facial affect. Behav Genet 40 : 178–185 doi:10.1007/s10519-010-9337-1

23. PolkTA, ParkJ, SmithMR, ParkDC (2007) Nature versus nurture in ventral visual cortex: a functional magnetic resonance imaging study of twins. J Neurosci Off J Soc Neurosci 27 : 13921–13925 doi:10.1523/JNEUROSCI.4001-07.2007

24. Allison, Puce, McCarthy (2000) Social perception from visual cues: role of the STS region. Trends Cogn Sci 4 : 267–278.

25. ZaitlenN, KraftP (2012) Heritability in the genome-wide association era. Hum Genet 131 : 1655–1664 doi:10.1007/s00439-012-1199-6

26. ZaitlenN, KraftP, PattersonN, PasaniucB, BhatiaG, et al. (2013) Using extended genealogy to estimate components of heritability for 23 quantitative and dichotomous traits. PLoS Genet 9: e1003520 doi:10.1371/journal.pgen.1003520

27. BloklandGAM (2012) Genetic and Environmental Influences on Neuroimaging Phenotypes: A Meta-Analytical Perspective on Twin Imaging Studies. Twin Res Hum Genet 15 : 351–371.

28. GlahnDC, WinklerAM, KochunovP, AlmasyL, DuggiralaR, et al. (2010) Genetic control over the resting brain. Proc Natl Acad Sci U A 107 : 1223–1228 doi:10.1073/pnas.0909969107

29. Van EssenDC, SmithSM, BarchDM, BehrensTE, YacoubE, et al. (2013) The WU-Minn Human Connectome Project: an overview. Neuroimage 80 : 62–79 doi:10.1016/j.neuroimage.2013.05.041

30. SteinJL, MedlandSE, VasquezAA, HibarDP, SenstadRE, et al. (2012) Identification of common variants associated with human hippocampal and intracranial volumes. Nat Genet 44 : 552–561 doi:10.1038/ng.2250

31. BeauchampMS, LeeKE, HaxbyJV, MartinA (2003) fMRI responses to video and point-light displays of moving humans and manipulable objects. J Cogn Neurosci 15 : 991–1001.

32. GrosbrasM-H, PausT (2006) Brain Networks Involved in Viewing Angry Hands or Faces. Cereb Cortex 16 : 1087–1096 doi:10.1093/cercor/bhj050

33. FoxCJ (2009) Defining the face processing network: Optimization of the functional localizer in fMRI. Hum Brain Mapp 30 : 1637–1651.

34. PurcellS, NealeB, Todd-BrownK, ThomasL, FerreiraMAR, et al. (2007) PLINK: a tool set for whole-genome association and population-based linkage analyses. Am J Hum Genet 81 : 559–575 doi:10.1086/519795

Štítky

Genetika Reprodukční medicína

Článek vyšel v časopisePLOS Genetics

Nejčtenější tento týden

2014 Číslo 8

-

Všechny články tohoto čísla

- DNA Double Strand Break Repair in Mitosis Is Suppressed by Phosphorylation of XRCC4

- Inference of Transposable Element Ancestry

- The Population Genetics of Evolutionary Rescue

- Retinoic Acid Activates Two Pathways Required for Meiosis in Mice

- Pooled Segregant Sequencing Reveals Genetic Determinants of Yeast Pseudohyphal Growth

- Comprehensive Identification of Single Nucleotide Polymorphisms Associated with Beta-lactam Resistance within Pneumococcal Mosaic Genes

- SMA-Causing Missense Mutations in Display a Wide Range of Phenotypes When Modeled in

- Branch Migration Prevents DNA Loss during Double-Strand Break Repair

- Transcriptome Sequencing from Diverse Human Populations Reveals Differentiated Regulatory Architecture

- Genetic Deletion of SEPT7 Reveals a Cell Type-Specific Role of Septins in Microtubule Destabilization for the Completion of Cytokinesis

- Tethering Sister Centromeres to Each Other Suggests the Spindle Checkpoint Detects Stretch within the Kinetochore

- Global Genetic Variations Predict Brain Response to Faces

- Demography and the Age of Rare Variants

- The Response to High CO Levels Requires the Neuropeptide Secretion Component HID-1 to Promote Pumping Inhibition

- Sp6 and Sp8 Transcription Factors Control AER Formation and Dorsal-Ventral Patterning in Limb Development

- The Groucho Co-repressor Is Primarily Recruited to Local Target Sites in Active Chromatin to Attenuate Transcription

- A Transposable Element Insertion Confers Xenobiotic Resistance in Drosophila

- The Genomic Architecture of Population Divergence between Subspecies of the European Rabbit

- Human Social Genomics

- Gene Expansion Shapes Genome Architecture in the Human Pathogen : An Evolutionary Genomics Analysis in the Ancient Terrestrial Mucorales (Mucoromycotina)

- Canonical Non-Homologous End Joining in Mitosis Induces Genome Instability and Is Suppressed by M-phase-Specific Phosphorylation of XRCC4

- Roles of Type 1A Topoisomerases in Genome Maintenance in

- The TRIM-NHL Protein LIN-41 Controls the Onset of Developmental Plasticity in

- Wnt-Mediated Repression via Bipartite DNA Recognition by TCF in the Hematopoietic System

- KDM6 Demethylase Independent Loss of Histone H3 Lysine 27 Trimethylation during Early Embryonic Development

- Integration of UPR and Oxidative Stress Signaling in the Control of Intestinal Stem Cell Proliferation

- miR171-Targeted Scarecrow-Like Proteins Bind to GT -Elements and Mediate Gibberellin-Regulated Chlorophyll Biosynthesis under Light Conditions

- Syndecan-1 Is Required to Maintain Intradermal Fat and Prevent Cold Stress

- LIN-3/EGF Promotes the Programmed Cell Death of Specific Cells in by Transcriptional Activation of the Pro-apoptotic Gene

- A System for Genome-Wide Histone Variant Dynamics In ES Cells Reveals Dynamic MacroH2A2 Replacement at Promoters

- Multiple Regulation of Rad51-Mediated Homologous Recombination by Fission Yeast Fbh1

- A Genome-Wide Association Study of the Maize Hypersensitive Defense Response Identifies Genes That Cluster in Related Pathways

- The RNA Helicases AtMTR4 and HEN2 Target Specific Subsets of Nuclear Transcripts for Degradation by the Nuclear Exosome in

- Asymmetric Division and Differential Gene Expression during a Bacterial Developmental Program Requires DivIVA

- A Model-Based Approach for Identifying Signatures of Ancient Balancing Selection in Genetic Data

- Chromatin Insulator Factors Involved in Long-Range DNA Interactions and Their Role in the Folding of the Drosophila Genome

- Conditional Inactivation of Upstream Binding Factor Reveals Its Epigenetic Functions and the Existence of a Somatic Nucleolar Precursor Body

- Evidence for Divisome Localization Mechanisms Independent of the Min System and SlmA in

- Patterns of Admixture and Population Structure in Native Populations of Northwest North America

- Response Regulator Heterodimer Formation Controls a Key Stage in S Development

- A Genetic Strategy to Measure Circulating Insulin Reveals Genes Regulating Insulin Production and Secretion

- EVA-1 Functions as an UNC-40 Co-receptor to Enhance Attraction to the MADD-4 Guidance Cue in

- Dysfunction of the CNS-Heart Axis in Mouse Models of Huntington's Disease

- An Otx/Nodal Regulatory Signature for Posterior Neural Development in Ascidians

- Phosphorylation of a Central Clock Transcription Factor Is Required for Thermal but Not Photic Entrainment

- Genome-Wide Patterns of Genetic Variation within and among Alternative Selective Regimes

- EF-P Dependent Pauses Integrate Proximal and Distal Signals during Translation

- Ku-Mediated Coupling of DNA Cleavage and Repair during Programmed Genome Rearrangements in the Ciliate

- Functional Specialization Among Members Of Knickkopf Family Of Proteins In Insect Cuticle Organization

- Playing RNase P Evolution: Swapping the RNA Catalyst for a Protein Reveals Functional Uniformity of Highly Divergent Enzyme Forms

- The Translational Regulators GCN-1 and ABCF-3 Act Together to Promote Apoptosis in

- Meta-Analysis of Genome-Wide Association Studies in African Americans Provides Insights into the Genetic Architecture of Type 2 Diabetes

- A -Regulatory Mutation of Causes Silky-Feather in Chickens

- VIB1, a Link between Glucose Signaling and Carbon Catabolite Repression, Is Essential for Plant Cell Wall Degradation by

- A Population Genetic Signal of Polygenic Adaptation

- A Conserved Dopamine-Cholecystokinin Signaling Pathway Shapes Context–Dependent Behavior

- The MAP Kinase p38 Is Part of Circadian Clock

- The Cohesin Subunit Rad21 Is Required for Synaptonemal Complex Maintenance, but Not Sister Chromatid Cohesion, during Drosophila Female Meiosis

- PLOS Genetics

- Archiv čísel

- Aktuální číslo

- Informace o časopisu

Nejčtenější v tomto čísle- Meta-Analysis of Genome-Wide Association Studies in African Americans Provides Insights into the Genetic Architecture of Type 2 Diabetes

- KDM6 Demethylase Independent Loss of Histone H3 Lysine 27 Trimethylation during Early Embryonic Development

- The RNA Helicases AtMTR4 and HEN2 Target Specific Subsets of Nuclear Transcripts for Degradation by the Nuclear Exosome in

- EF-P Dependent Pauses Integrate Proximal and Distal Signals during Translation

Kurzy

Zvyšte si kvalifikaci online z pohodlí domova

Současné možnosti léčby obezity

nový kurzAutoři: MUDr. Martin Hrubý

Všechny kurzyPřihlášení#ADS_BOTTOM_SCRIPTS#Zapomenuté hesloZadejte e-mailovou adresu, se kterou jste vytvářel(a) účet, budou Vám na ni zaslány informace k nastavení nového hesla.

- Vzdělávání