-

Články

Top novinky

Reklama- Vzdělávání

- Časopisy

Top články

Nové číslo

- Témata

Top novinky

Reklama- Videa

- Podcasty

Nové podcasty

Reklama- Kariéra

Doporučené pozice

Reklama- Praxe

Top novinky

ReklamaMethods for Specifying the Target Difference in a Randomised Controlled Trial: The Difference ELicitation in TriAls (DELTA) Systematic Review

Background:

Randomised controlled trials (RCTs) are widely accepted as the preferred study design for evaluating healthcare interventions. When the sample size is determined, a (target) difference is typically specified that the RCT is designed to detect. This provides reassurance that the study will be informative, i.e., should such a difference exist, it is likely to be detected with the required statistical precision. The aim of this review was to identify potential methods for specifying the target difference in an RCT sample size calculation.Methods and Findings:

A comprehensive systematic review of medical and non-medical literature was carried out for methods that could be used to specify the target difference for an RCT sample size calculation. The databases searched were MEDLINE, MEDLINE In-Process, EMBASE, the Cochrane Central Register of Controlled Trials, the Cochrane Methodology Register, PsycINFO, Science Citation Index, EconLit, the Education Resources Information Center (ERIC), and Scopus (for in-press publications); the search period was from 1966 or the earliest date covered, to between November 2010 and January 2011. Additionally, textbooks addressing the methodology of clinical trials and International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use (ICH) tripartite guidelines for clinical trials were also consulted. A narrative synthesis of methods was produced. Studies that described a method that could be used for specifying an important and/or realistic difference were included. The search identified 11,485 potentially relevant articles from the databases searched. Of these, 1,434 were selected for full-text assessment, and a further nine were identified from other sources. Fifteen clinical trial textbooks and the ICH tripartite guidelines were also reviewed. In total, 777 studies were included, and within them, seven methods were identified—anchor, distribution, health economic, opinion-seeking, pilot study, review of the evidence base, and standardised effect size.Conclusions:

A variety of methods are available that researchers can use for specifying the target difference in an RCT sample size calculation. Appropriate methods may vary depending on the aim (e.g., specifying an important difference versus a realistic difference), context (e.g., research question and availability of data), and underlying framework adopted (e.g., Bayesian versus conventional statistical approach). Guidance on the use of each method is given. No single method provides a perfect solution for all contexts.

Please see later in the article for the Editors' Summary

Published in the journal: . PLoS Med 11(5): e32767. doi:10.1371/journal.pmed.1001645

Category: Research Article

doi: https://doi.org/10.1371/journal.pmed.1001645Summary

Background:

Randomised controlled trials (RCTs) are widely accepted as the preferred study design for evaluating healthcare interventions. When the sample size is determined, a (target) difference is typically specified that the RCT is designed to detect. This provides reassurance that the study will be informative, i.e., should such a difference exist, it is likely to be detected with the required statistical precision. The aim of this review was to identify potential methods for specifying the target difference in an RCT sample size calculation.Methods and Findings:

A comprehensive systematic review of medical and non-medical literature was carried out for methods that could be used to specify the target difference for an RCT sample size calculation. The databases searched were MEDLINE, MEDLINE In-Process, EMBASE, the Cochrane Central Register of Controlled Trials, the Cochrane Methodology Register, PsycINFO, Science Citation Index, EconLit, the Education Resources Information Center (ERIC), and Scopus (for in-press publications); the search period was from 1966 or the earliest date covered, to between November 2010 and January 2011. Additionally, textbooks addressing the methodology of clinical trials and International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use (ICH) tripartite guidelines for clinical trials were also consulted. A narrative synthesis of methods was produced. Studies that described a method that could be used for specifying an important and/or realistic difference were included. The search identified 11,485 potentially relevant articles from the databases searched. Of these, 1,434 were selected for full-text assessment, and a further nine were identified from other sources. Fifteen clinical trial textbooks and the ICH tripartite guidelines were also reviewed. In total, 777 studies were included, and within them, seven methods were identified—anchor, distribution, health economic, opinion-seeking, pilot study, review of the evidence base, and standardised effect size.Conclusions:

A variety of methods are available that researchers can use for specifying the target difference in an RCT sample size calculation. Appropriate methods may vary depending on the aim (e.g., specifying an important difference versus a realistic difference), context (e.g., research question and availability of data), and underlying framework adopted (e.g., Bayesian versus conventional statistical approach). Guidance on the use of each method is given. No single method provides a perfect solution for all contexts.

Please see later in the article for the Editors' SummaryIntroduction

A randomised controlled trial (RCT) is widely regarded as the preferred study design for comparing the effectiveness of health interventions [1]. Central to the design and validity of an RCT is a calculation of the number of participants needed: the sample size. This provides reassurance that the study will be informative. Using the Neyman-Pearson method (a conventional approach to sample size calculation), a (target) difference that the RCT is designed to detect is typically specified.

Selecting an appropriate target difference is critical. If too small a target difference is estimated, the trial may be a wasteful and an unethical use of data and resources. If too large a target difference is hypothesized, there is a risk that a clinically relevant difference will be overlooked because the study is too small. Both extremes could therefore have a detrimental impact on decision-making [2]. Additionally, through its impact on sample size, the choice of target difference has substantial implications in terms of study conduct and associated cost.

However, unlike the statistical considerations involved in sample size calculation, research on how to specify the target difference has been greatly neglected, with no substantive guidance available [3],[4]. While a variety of potential approaches have been proposed, such as specifying what an important difference would be (e.g., the “minimal clinically important difference”) or what a realistic difference would be given the results of previous studies, the current state of the evidence base is unclear. Although some reviews of different types of methods have been conducted [2],[5], there is still a need for a comprehensive review of available methods. The aim of this systematic review was to identify potential methods for specifying the target difference in an RCT sample size calculation, whether addressing an important difference (a difference viewed as important by a relevant stakeholder group [e.g., clinicians]) and/or realistic difference (a difference that can be considered to be realistic given the interventions to be evaluated). The methods are described, and guidance offered on their use.

Methods

A comprehensive search of both biomedical and selected non-biomedical databases was undertaken. Search strategies and databases searched were informed by preliminary scoping work. The final databases searched were MEDLINE, MEDLINE In-Process, EMBASE, the Cochrane Central Register of Controlled Trials, the Cochrane Methodology Register, PsycINFO, Science Citation Index, EconLit, Education Resources Information Center (ERIC), and Scopus (for in-press publications) from 1966 or earliest date coverage; the searches were undertaken between November 2010 and January 2011. Given the magnitude of the literature identified by this initial search and the belief that updating the search would not lead to additional approaches of specifying the target difference, an update of this search was not carried out. There was no language restriction. It was anticipated that reporting of methods in the titles and abstracts would be of variable quality and that therefore a reliance on indexing and text word searching would be inadvisable. Consequently, several other methods were used to complement the electronic searching and included checking of reference lists, citation searching for key articles using Scopus and Web of Science, and contacting experts in the field. The protocol and details of the search strategies used are available in Protocol S1 and Search Strategy S1.

Additionally, textbooks covering methodological aspects of clinical trials were consulted. These textbooks were identified by searching the integrated catalogue of the British Library and the catalogues (for the most recent 5 y) of several prominent publishers of statistical texts. The project steering group was also asked to suggest key clinical trial textbooks that could be assessed. Because of the nature of the review, ethical approval was unnecessary.

To be included in this review, each study had to report a formal method that had been used or could be used to specify a target difference. Any study design for original research was eligible, provided its assessment was based on at least one outcome of relevance to a clinical trial. Studies were excluded only if they were reviews, failed to report a method for specifying a target difference, reported only on statistical sample size considerations rather than clinical relevance, or assessed an outcome measure (e.g., number needed to treat) without reference to how a difference could be determined.

Potentially relevant titles and abstracts were screened by either or both of two reviewers (J. H. or T. G.), with any uncertainties or disagreements discussed with a third party (J. A. C.). Full-text articles were obtained for the titles and abstracts identified as potentially relevant. These were provisionally categorised according to method of specifying the target difference (if detailed in the abstract). One of four reviewers (J. H., T. G., K. H., or T. E. A.) screened the full-text articles and extracted information, after having screened and extracted information from a practice sample of articles and compared results to ensure consistency in the screening process. Where there was uncertainty regarding whether or not a study should be included for data extraction, the opinion of a third party (J. A. C.) was sought, and the study discussed until consensus was reached.

Data were extracted on the methodological details and any noteworthy features such as unique variations not found in other studies reporting the same method. Specific information relevant to each particular method was recorded, and no generic data extraction form was used across all methods. It was felt that a generic data extraction form that included all fields of relevance to all methods would be too cumbersome, because the methods varied in conception and implementation.

Narrative descriptions of each method were produced, summarising the key characteristics based on extracted data on the similarities and differences in each application of the same method, frequency with which each variant of the method was used, and strengths and weaknesses of the method, either identified by the review team as potentially important, or extracted from study authors' own points about the strengths and limitations of their method (or methods) as reported in the articles. Methods were assessed according to criteria developed by the steering group prior to undertaking the evidence synthesis; the criteria covered the validity, implementation, statistical properties, and applicability of each method. The initial assessment was carried out by J. A. C. and revised by the steering group.

Results

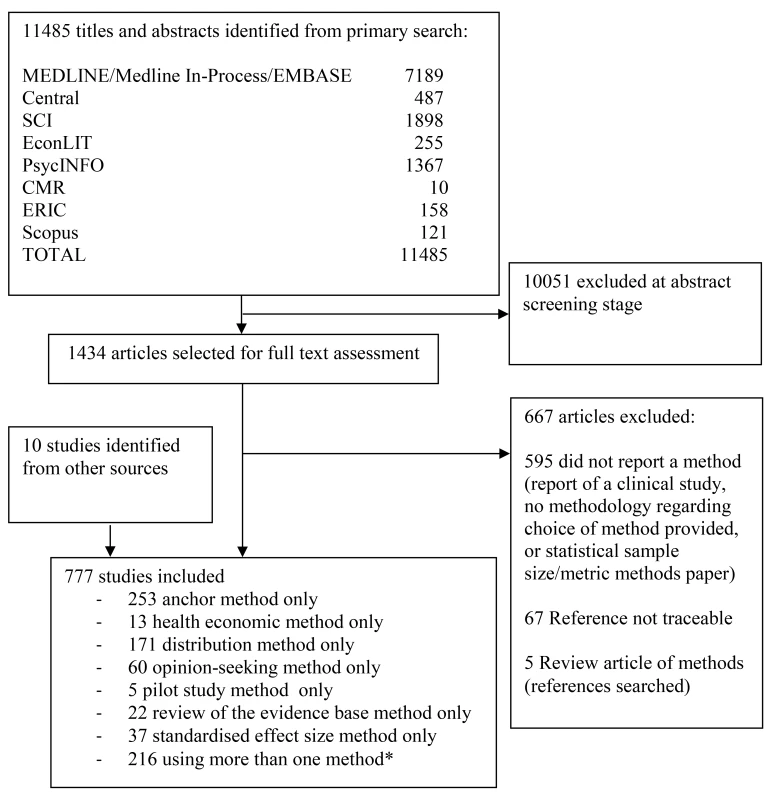

We identified 11,485 potentially relevant studies from the databases searched. The number of studies found within each database is detailed in Figure 1 (PRISMA flow diagram), showing the number of studies for each method.

Fig. 1. PRISMA flow diagram.

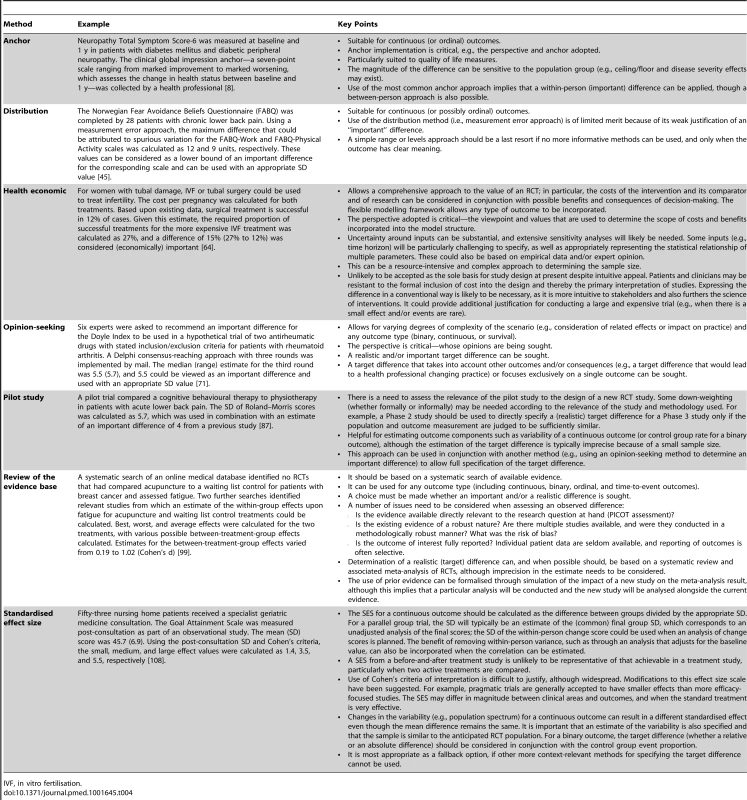

*For a breakdown of studies that used more than one method in combination, please see Table 1. Central, Cochrane Central Register of Controlled Trials; CMR, Cochrane Methodology Register; ERIC, Education Resources Information Center; SCI, Science Citation Index. Of the potentially relevant studies identified, 1,434 were selected for full-text assessment; a further nine were identified from other sources. Fifteen clinical trial textbooks and the International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use tripartite guidelines were also reviewed, though none identified a method that had not already been identified from the journal database searches. In total, 777 studies were included. Seven methods were identified—anchor, distribution, health economic, opinion-seeking, pilot study, review of the evidence base, and standardised effect size (SES). Descriptions of these methods are provided in Box 1. No methods were identified by this review beyond those already known to the reviewers. The anchor, distribution, opinion-seeking, review of the evidence base, and SES methods were used in studies in varied clinical and treatment areas, but predominantly in those pertaining to chronic diseases. Although the number of included studies for both the health economic and pilot study methods was much smaller, real or hypothetical trial examples covered pharmacological and non-pharmacological treatments for both acute and chronic conditions.

Box 1. Methods for Specifying an Important and/or Realistic Difference

Methods for specifying an important difference

-

Anchor: The outcome of interest can be “anchored” by using either a patient's or health professional's judgement to define an important difference. This may be achieved by comparing a patient's health before and after treatment and then linking this change to participants judged to have shown improvement/deterioration. Alternatively, a more familiar outcome, for which patients or health professionals more readily agree on what amount of change constitutes an important difference, can be used. Alternatively, a contrast between patients can be made to determine a meaningful difference.

-

Distribution: Approaches that determine a value based upon distributional variation. A common approach is to use a value that is larger than the inherent imprecision in the measurement and therefore likely to represent a minimal level for a meaningful difference.

-

Health economic: Approaches that use principles of economic evaluation. These typically include both resource cost and health outcomes, and define a threshold value for the cost of a unit of health effect that a decision-maker is willing to pay, to estimate the overall net benefit of treatment. The net benefit can be analysed in a frequentist framework or take the form of a (typically Bayesian) decision-theoretic value of information analysis.

-

Standardised effect size: The magnitude of the effect on a standardised scale defines the value of the difference. For a continuous outcome, the standardised difference (most commonly expressed as Cohen's d “effect size”) can be used. Cohen's cutoffs of 0.2, 0.5, and 0.8 for small, medium, and large effects, respectively, are often used. Thus a “medium” effect corresponds simply to a change in the outcome of 0.5 SDs. Binary or survival (time-to-event) outcome metrics (e.g., an odds, risk, or hazard ratio) can be utilised in a similar manner, though no widely recognised cutoffs exist. Cohen's cutoffs approximate odds ratios of 1.44, 2.48, and 4.27, respectively. Corresponding risk ratio values vary according to the control group event proportion.

Methods for specifying a realistic difference

-

Pilot study: A pilot (or preliminary) study may be carried out where there is little evidence, or even experience, to guide expectations and determine an appropriate target difference for the trial. In a similar manner, a Phase 2 study could be used to inform a Phase 3 study.

Methods for specifying an important and/or a realistic difference

-

Opinion-seeking: The target difference can be based on opinions elicited from health professionals, patients, or others. Possible approaches include forming a panel of experts, surveying the membership of a professional or patient body, or interviewing individuals. This elicitation process can be explicitly framed within a trial context.

-

Review of evidence base: The target difference can be derived using current evidence on the research question. Ideally, this would be from a systematic review or meta-analysis of RCTs. In the absence of randomised evidence, evidence from observational studies could be used in a similar manner. An alternative approach is to undertake a review of studies in which an important difference was determined.

Substantial variation between studies was found in the way the seven methods were implemented. In addition, some studies used several methods, although the combinations used varied, as did the extent to which results were triangulated. The anchor method was the most popular, used by 447 studies, of which 194 (43%) used it in combination with another method. The distribution method was used by 324 studies, of which 153 (47%) used it alongside another method. Eighty studies used an opinion-seeking method, of which 20 (25%) also used additional methods. Twenty-seven studies used a review of the evidence base method, of which five (19%) also used another method. Six studies used a pilot study method, of which one (17%) also used another method. The SES method was used by 166 studies, of which 129 (78%) also used another method. Thirteen studies used a health economic method.

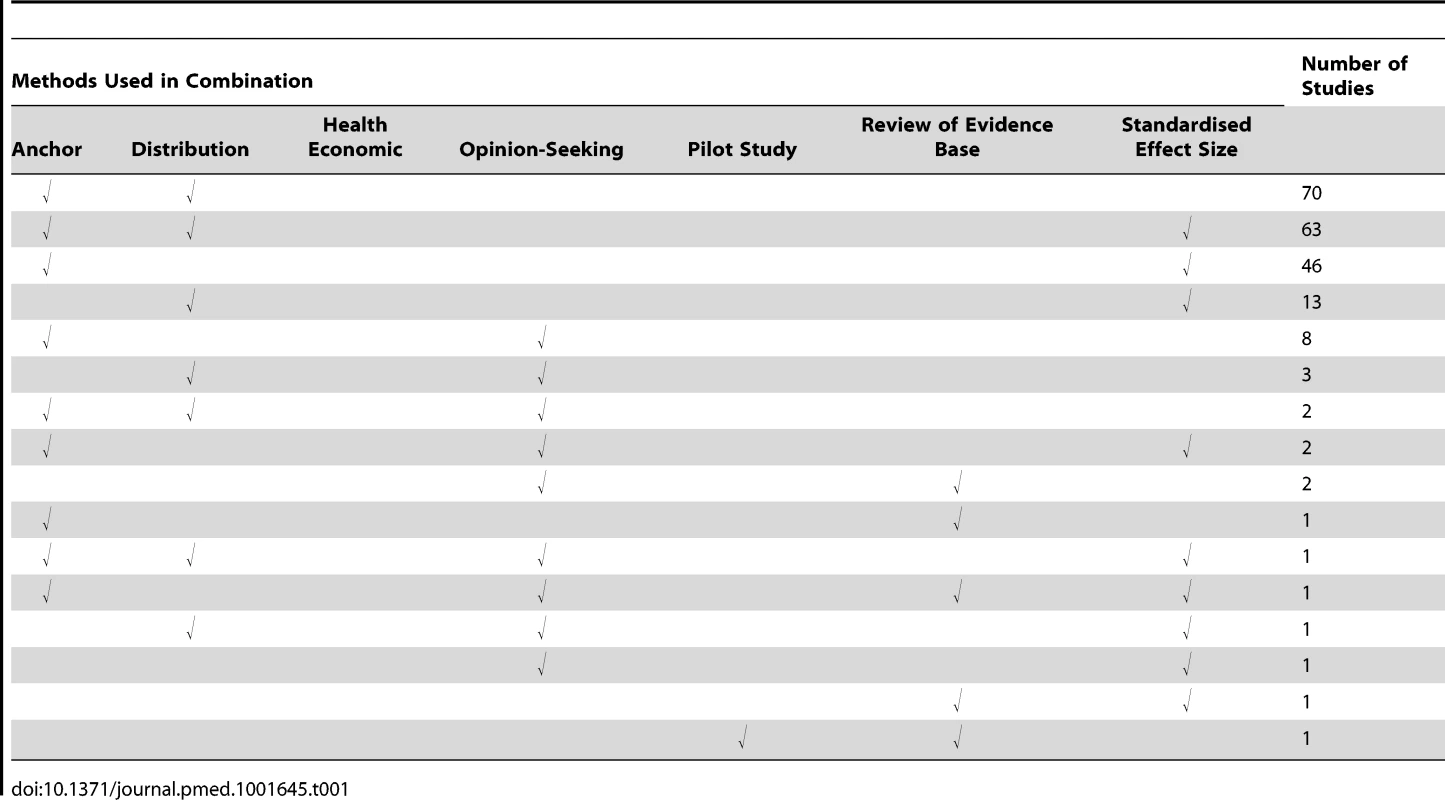

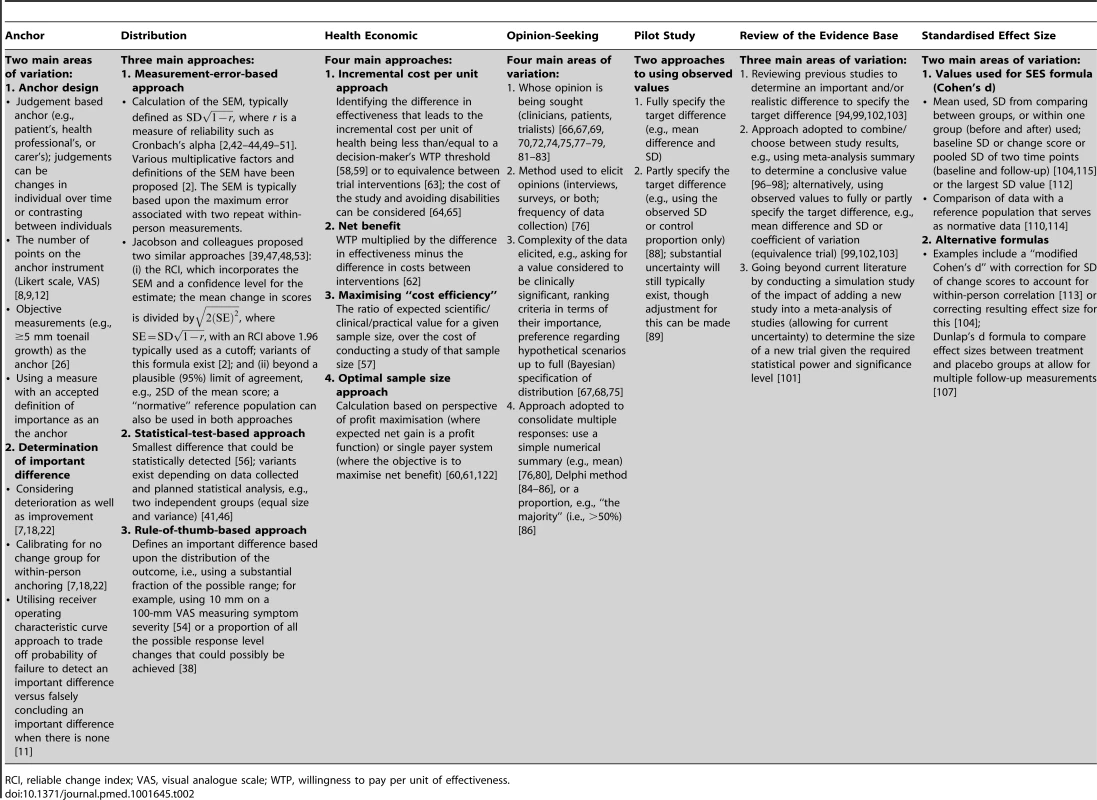

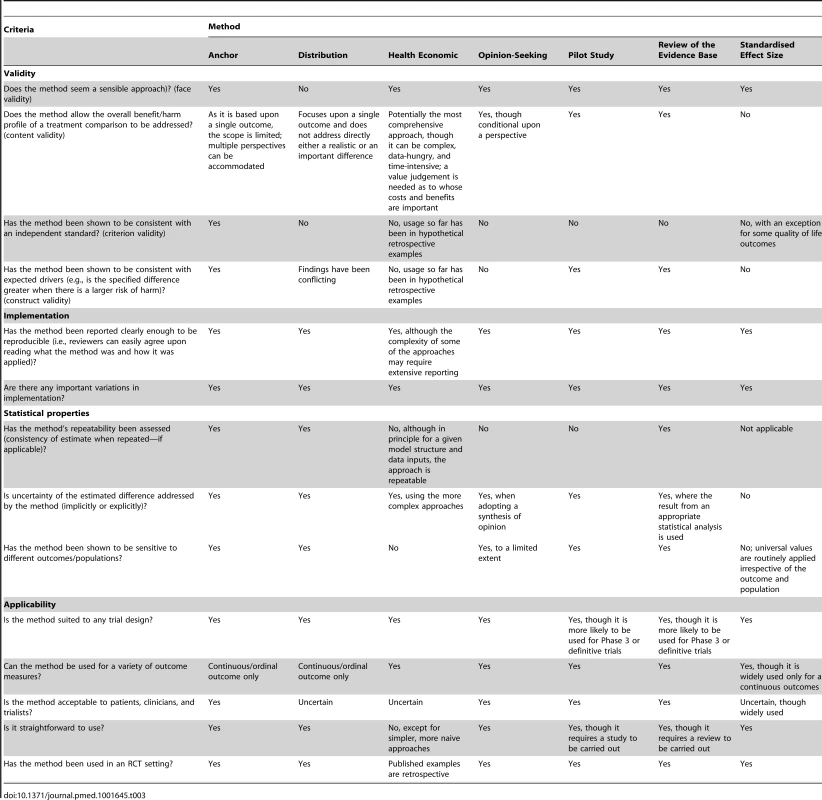

For all methods used in combination with others, Table 1 provides a breakdown of the variety of combinations identified and their frequency. The main variations identified from the systematic review for each of the methods are described in Table 2, and are further described in the text below. A brief summary of the literature for each method is given below and also of studies that used a combination of methods. Table 3 contains an assessment of the value of the individual methods. Table 4 contains examples and key implementation points for the use of each method.

Tab. 1. Use of multiple methods.

Tab. 2. Main variations in implementation of the methods.

RCI, reliable change index; VAS, visual analogue scale; WTP, willingness to pay per unit of effectiveness. Tab. 3. Assessment of the value of the methods.

Tab. 4. Usage of methods—examples and key implementation points.

IVF, in vitro fertilisation. Anchor Method

Implementation of the anchor method varied greatly [6]–[37]. In its most basic form, the anchor method evaluates the minimal (clinically) important change in score for a particular instrument. This is established by calculating the mean change score (post-intervention minus pre-intervention) for that instrument, among a group of patients for whom it is indicated—via another instrument (the “anchor”)—that a minimum clinically important change has occurred. The anchor instrument, the number of available points on the anchor instrument for response, and the corresponding labelling varied between applications. The anchor instrument was most often a subjective assessment of improvement (e.g., global rating of change), though objective measures of improvement could be used (e.g., a 15-letter change in visual acuity as measured on the Snellen eye chart) [34]. The anchor instrument was usually posed to patients alone [19],[35], though in some cases the clinicians' views alone were used. Older studies tended to use a 15-point Likert scale for the anchor instrument, as suggested by Jaeschke and colleagues [16]; more recent studies tended to use five - or seven-point scales instead. Depending upon the study size and/or clinical context, merging of multiple points on the scale may be required. For example, if a seven-point scale has been used but very few people rate themselves at the extremes of this scale (1 and 7), it may be possible to merge points 1 and 2 of the scale and points 6 and 7. It should be noted that it may not always be appropriate to do this, depending on the clinical question under consideration.

Relative change can be incorporated by comparing those for whom an important change was identified to another patient subset (tested using the same instrument and anchor) who reported no change over time. Another common variation is to consider the percentage change score in the instrument under consideration [33], rather than the absolute score change. Determination of what constituted an important difference was sometimes based upon the use of methodology more typically used to assess diagnostic accuracy, such as receiver operating characteristic curves [6],[11],[20], or more complex statistical approaches. It is worth noting that the anchor method was not always successful in deriving values for an important difference; failure was usually due to either practical or methodological difficulties [17],[23].

A substantially different way of achieving an anchor-based approach for specifying an important difference was proposed by Redelmeier and colleagues [28]: in this study, other patients formed a reference against which a patient could rate their own health (or health improvement) [10],[27]–[30]. Generalisability of the resulting estimate of an important difference is a key concern. For example, if the disease is chronic and progressive, an important change value from a newly diagnosed population may not apply to a population with a far longer duration of illness [15],[24],[25],[32],[36]. A key consideration is how to decide on an appropriate cutoff point for the anchor “transition” tool.

Participant biases, such as recall bias, are also potentially problematic [13],[14],[21],[22],[25], as are response shift (whereby patients' perceptions of acceptable change alter during the course of disease or treatment and become inconsistent) [37] and gratitude factor or halo bias (whereby responses that are more favourable than is realistic need to be taken into account) [31],[35]. Another key choice is whether to consider improvement and deterioration together or separately. If a Likert scale has been used as the anchor, improvement and deterioration can be merged to obtain one more general measure for “change” by “folding” the scale at zero, though this assumes symmetry of effect, with “no change” centred upon zero difference. This approach may be unrealistic because of response biases and regression to the mean, and is inappropriate if patients are likely to rate improvements in their health differently from how they would rate deterioration with the same condition. The method proposed by Redelmeier and colleagues, where other participants act as the anchor, avoids recall bias because all data can be collected at the same time, though it may not be a universally appropriate method, as participants might find it difficult to discuss particularly sensitive or private health issues with others.

Distribution Method

Three distinct distribution approaches were found [38]–[56]: measurement error, statistical test, and rule of thumb. The measurement error approach determines a value that is larger than the inherent imprecision in the measurement and that is therefore likely to be consistently noticed by patients. The most common approach for determining this value was based upon the standard error of measurement (SEM). The SEM can be defined in various ways, with different multiplicative factors suggested as signifying a non-trivial (important) difference.

The most commonly used alternative to the SEM method (although it can be thought of as an extension of this approach) was the reliable change index proposed by Jacobson and Truax [47], which incorporates confidence around the measurement error. For the statistical test approach, a “minimal detectable difference”—the smallest difference that could be statistically detected for a given sample size—is calculated. This is then used as a guide for interpreting the presence of an “important” difference in this study. The rule-of-thumb approach defines an important difference based on the distribution of the outcome, such as using a substantial fraction of the possible range without further justification (e.g., 10 mm on a 100-mm visual analogue scale measuring symptom severity being viewed as a substantial shift in outcome response) [54].

Measurement error and rule-of-thumb approaches are widely used, but do not translate straightforwardly to an RCT target difference. This is because for measurement error approaches, assessment is typically based on test–retest (within-person) data, whereas many trials are of parallel group (between-person) design. Additionally, measurement error is not suitable as the sole basis for determining the importance of a particular target difference. More generally, the setting and timing of data collection may also be important to the calculation of measurement error (e.g., results may vary between pre - and post-treatment) [52]. The statistical test approach cannot be used to specify a priori a target difference in an RCT sample size calculation, as the observed precision of the statistical test is conditional on the sample size. Rule-of-thumb approaches are dependent upon the outcome having inherent value (e.g., Glasgow coma scale), where a substantial fraction of a unit change (e.g., one-third or one-half) can be viewed as important.

Health Economic Method

The approaches included under the health economic method typically involve defining a threshold value for the cost of a unit of health effect that a decision-maker is willing to pay and using this threshold to construct a “net benefit” that combines both resource cost and health outcomes [57]–[65]. The extent to which data on the differences in costs, benefits, and harms are used depends on the decision and perspective adopted (e.g., treatment x is better than treatment y when the net benefit for x is greater than that for y, i.e., the incremental net benefit for x compared to y is positive) [62]. The net benefit approach can be extended into a decision-theoretic model in order to undertake a value of information analysis [60],[61],[65], which seeks to address the value of removing the current uncertainty regarding the choice of treatment. The optimal sample size of a new study given the current evidence and the decision faced can be calculated. The perspective of the decision-making is critical, i.e., whether it is from the standpoint of clinicians, patients, funders, policy-makers, or some combination.

More sophisticated modelling approaches can potentially allow a comprehensive evaluation of the treatment decision and the potential value of a new study, though they require strong assumptions about, for example, different measurements of effectiveness, harms, uptake, adherence, costs of interventions, and the cost of new research. The increased complexity, along with the gap between the input requirements of the more sophisticated modelling approaches and the data that are typically available, and the need to be explicit about the basis of synthesis of all the evidence upfront, perhaps explains the limited use of these modelling approaches in practice to date.

Opinion-Seeking Method

The opinion-seeking method determines a value (or a plausible range of values) for the target difference, by asking one or more individuals to state their view on what value or values for a particular difference should be important and/or realistic [66]–[86]. The identified studies varied widely in whose opinion was sought (e.g., patients, clinicians, or trialists), the method of selecting individual experts (e.g., literature search, mailing list, or conference attendance), and the number of experts consulted. Other variations included the method used to elicit values (e.g., interview or survey), the complexity of the data elicited, and the method used to consolidate results into an overall value or range of values for the difference.

One advantage of the opinion-seeking method is the ease with which it can be carried out (e.g., through a survey). However, estimates will vary according to the specified population. Additionally, different perspectives (e.g., patient versus health professional) may lead to very different estimates of what is important and/or realistic [73]. Also, the views of approached individuals may not necessarily be representative of the wider community. Furthermore, some methods for eliciting opinions have feasibility constraints (e.g., face-to-face methods), but alternative approaches for capturing the views of a larger number of experts require careful planning or may be subject to low response rates or partial responses [77].

Pilot Study Method

A small number of studies used a pilot study method to determine a relevant value for the target difference [87]–[90]. A pilot study can be defined as running the intended study in miniature prior to conducting the actual trial, to guide expectations on an appropriate value for the target difference. The simplest approach is to use the observed effect in the pilot study as the target difference in an RCT. More sophisticated approaches account for imprecision in the estimate from the pilot study and/or use the pilot study to estimate only the standard deviation (SD) (or control group event proportion) and not the target difference.

However, there are practical difficulties in conducting a pilot study that may limit the relevance of results [87], most notably the inherent uncertainty in results due to the small study sample size, rendering the effect size imprecise and unreliable. Additionally, a pilot study can address only a realistic difference and does not inform what an important difference would be. Finally, it is worth noting that an internal pilot study, using the initial recruits within a larger study, cannot be used to pre-specify the target difference, though it could inform an adaptive update [90]. Notwithstanding the above critique, a pilot study can have a valuable role in addressing feasibility issues (e.g., recruitment challenges) that may need to be considered in a larger trial [89]. Pilot studies are most useful when they can be readily and quickly conducted. While few studies addressed using a pilot study to inform the specification of the target difference, trialists may use pilot studies to help determine the target difference without reporting this formally in trial reports.

Review of the Evidence Base Method

Implementation of the review of the evidence base method varied regarding what studies and results were considered as part of the review and how the findings of different studies were combined [91]–[103]. The most common approach involved implementing a pre-specified strategy for reviewing the evidence base for either a particular instrument or variety of instruments to identify an important difference. Alternatively, pre-existing studies for a specific research question may be used (e.g., using the pooled estimate of a meta-analysis) to determine the target difference [100]. Extending this general approach, Sutton and colleagues [101] derived a distribution for the effect of treatment from the meta-analysis, from which they then simulated the effect of a “new” study; the result of this study was added to the existing meta-analysis data, which were then re-analysed. Implicitly this adopts a realistic difference as the basis for the target difference.

Reviewing the existing evidence base is valuable as it provides a rationale for choosing an important and/or realistic target difference. It is likely that this general approach is often informally used, though few have addressed how it should be formally done. However, estimates identified from existing evidence may not necessarily be appropriate for the population being considered for the trial, so the generalisability of the available studies and susceptibility to bias should be considered. For reviews of studies that identified an important difference, the methods used in each of the individual studies to determine that difference are subject to the practical issues mentioned here for that method (e.g., the anchor method). Imprecision of the estimate is also an important consideration, and publication bias may also be an issue if reviews of the evidence base consider only published data. If a meta-analysis of previous results is used to determine a sample size, then additional evidence published after the search used in the meta-analysis was conducted may necessitate updating the sample size.

Standardised Effect Size Method

This method is commonly used to determine the importance of a difference in an outcome when set in comparison to other possible effect sizes upon a standardised scale [88],[104]–[116]. Overwhelmingly, studies used the guidelines suggested by Cohen [106] for the Cohen's d metric, i.e., 0.2, 0.5, and 0.8 for small, medium, and large effects, respectively, in the context of a continuous outcome. Other SES metrics exist for continuous (e.g., Dunlap's d), binary (e.g., odds ratio), and survival (hazard ratio) outcomes [106],[111],[116]. Most of the literature relates to within-group SESs for a continuous outcome. The SD used should reflect the anticipated RCT population as far as possible.

The main benefit of using a SES method is that it can be readily calculated and compared across different outcomes, conditions, studies, settings, and people; all differences are translated into a common metric. It is also easy to calculate the SES from existing evidence if studies have reported sufficient information. The Cohen guidelines for small, medium, and large effects can be converted into equivalent values for other binary metrics (e.g., 1.44, 2.48, and 4.27, respectively, for odds ratio) [105]. As noted above, SES metrics are commonly used for binary (e.g., odds ratio or risk ratio) and survival outcomes (e.g., hazard ratio) in medical research [111], and a similar approach can be readily adopted for such outcomes. However, no equivalent guideline values are in widespread use. Informally, a doubling or halving of a ratio is sometimes seen as a marker of a large relative effect [109].

It is important to note that SES values are not uniquely defined, and different combinations of values on the original scale can produce the same SES value. For the standard Cohen's d statistic, different combinations of mean and SD values produce the same SES estimate. For example, a mean (SD) of 5 (10) and 2 (4) both give a standardised effect of 0.5SD. As a consequence, specifying the target difference as a SES alone, though sufficient in terms of sample size calculation, can be viewed as insufficient in that it does not actually define the target difference for the outcome measure of interest. A limitation of the SES is the difficulty in determining why different effect sizes are seen in different studies: for example, whether these differences are due to differences in the outcome measure, intervention, settings, or participants in the studies, or study methodology.

Combining Methods

The vast majority of studies that combined methods used two or three of the anchor, distribution, and SES methods. Studies that used multiple methods were not always clear in describing whether and how results were triangulated, and for certain combinations the result of one method seemed to be considered of greater value than the result of another method (i.e., as if a primary and supplementary method had been selected). For example, values that were found using the anchor method were often chosen over effect size results or distribution-based estimates [117]. Alternatively, the most conservative value was chosen, regardless of the comparative robustness of the methods used [118]. In cases where the results of the different methods were similar, triangulation of the results was straightforward [119].

Discussion

This comprehensive systematic review summarizes approaches for specifying the target difference in a RCT sample size calculation. Of the seven identified methods, the anchor, distribution, and SES methods were most widely used. There are several reasons for the popularity of these methods, including ease of use, usefulness in studies validating quality of life instruments, and simplicity of calculation of distribution and SES estimates alongside the anchor method. While most studies adopted (though typically implicitly) the conventional Neyman-Pearson statistical framework, some of the methods (i.e., health economic and opinion-seeking) particularly suit a Bayesian framework.

No further methods were identified by this review beyond the seven methods pre-identified from a scoping search. However, substantial variations in implementation were noted, even for relatively simple approaches such as the anchor method, and many studies used multiple methods. Most studies focused on continuous outcomes, although other outcome types were considered using opinion-seeking and evidence base review. While the methods could in principle be used for any type of RCT, they are most relevant to the design of Phase 3, or “definitive”, trials.

A number of key issues were common across the methods. First, it is critical to decide whether the focus is to determine an important and/or a realistic difference. Some methods can be used for both (e.g., opinion-seeking), and some for only one or the other (e.g., the anchor method to determine an important difference and the pilot study method to determine a realistic difference). Evaluating how the difference was determined and the context of determining the target difference is important. Some approaches commonly used for specifying an important difference either cannot be used for specifying a target difference (such as the statistical test approach) or do not straightforwardly translate into the typical RCT context (for example the measurement error approach). The anchor, opinion-seeking, and health economic methods explicitly involve judgment, and the perspective taken in the study is a key consideration regarding their use. As a consequence, these methods explicitly allow different perspectives to be considered, and in particular enable the views of patients and the public to be part of the decision-making process.

Some methodological issues are specific to particular methods. For example, the necessity of choosing a cutoff point to define an “important” difference/change is specific to the anchor method. This approach is a widely recognised part of the validation process for new quality of life instruments, where the scale has no inherent meaning without reference to an outside marker (i.e., anchor).

All three approaches of the distribution method—measurement error, statistical test, and rule of thumb—have clear limitations, the foremost being that they do not match the setting of a standard RCT design (two parallel groups). The statistical test approach cannot be used to specify a target difference, given that it is essentially a rearranged sample size formula. The rule-of-thumb approach is dependent upon the interpretability of the individual scale.

The SES method was used in a substantial number of studies for a continuous outcome, but was rarely reported for non-continuous outcomes, despite informal use of such an approach probably being widespread. No parallel for a binary outcome exists, though odds ratio values approximately equivalent to Cohen's d values can be used. The validity of Cohen's cutoffs is uncertain (despite widespread usage), and some modifications to the original values have been proposed [120],[121].

The opinion-seeking method was often used with multiple strategies involved in the process (e.g., questionnaires being sent to experts using particular sampling methods, followed by an additional conference being organised to discuss findings in more detail). The Delphi technique for survey development and the nominal group technique for face-to-face meetings are commonly used and are potentially useful for this type of research when developing instruments. In terms of planning a trial, the opinion-seeking method can be relatively easy to implement, but the resulting usefulness of the estimated target difference may depend on the robustness of the approach used to elicit opinions.

The health economic and pilot study methods were infrequently reported as specific methods. For the health economic method, this is likely due to the complexity of the method and/or the resource-intensive procedures that are required to conduct the theoretically more robust variants that have been developed. The use of pilot studies to determine the target difference is problematic and probably only useful for the control group event proportion or SD, for a binary or continuous outcome, respectively. Internal pilot studies may be incorporated into the start of larger clinical trials, but are not useful for specifying the target difference, though they could be used to revise the sample size calculation. The review of the evidence base method can be applied to identify both an important or realistic difference; a pilot study addresses only a realistic difference. For both methods, applicability to the anticipated study and the impact of statistical uncertainty on estimates should be considered.

A review of the evidence base approach for a particular outcome measurement or study population may be combined with any of the other methods identified for establishing an important difference. However, the number of studies reporting a formal method for identifying an important difference using the existing evidence was surprisingly small. It could be that there is wide variation in the extent to which reviews of the existing evidence base have been undertaken prospectively using a specific and formal strategy.

Some methods can be readily used with others, potentially increasing the robustness of their findings. The anchor and distribution methods were often used together within the same study, frequently also with the SES approach. Multiple methods for specifying an important difference were used in some studies, though the combinations varied, as did the extent to which results were triangulated. The result of one method may validate the result found using another method, but conflicting estimates increase uncertainty over the estimate of an important difference.

Strengths and Limitations

To our knowledge, this review is the first comprehensive and systematic search of all possible methods for specifying a target difference. The search strategy was inclusive, robust, and logical; however, this led to a large number of studies that did not report a method for specifying an important and/or realistic difference. Also, it is possible some studies were missed because of the lack of standardised terminology. Finally, our search period ended in January 2011, and another method not included in the seven identified by this review may have been published since then, although we believe this is unlikely. More likely is the use of new variations in the implementation of existing methods.

Conclusions

A variety of methods are available that researchers can use for specifying the target difference in an RCT sample size calculation. Appropriate methods and implementation vary according to the aim (e.g., specifying an important difference versus a realistic difference), context (research question and availability of data), and underlying framework adopted (Bayesian versus conventional statistical approach). No single method provides a perfect solution for all contexts. Some methods for specifying an important difference (e.g., a statistical test–based approach) are inappropriate in the RCT sample size context. Further research is required to determine the best uses of some methods, particularly the health economic, opinion-seeking, pilot study, and SES methods. Prospective comparisons of methods in the context of RCT design may also be useful. Better reporting of the basis upon which the target difference was determined is needed [122].

Supporting Information

Zdroje

1. AltmanDG, SchulzKF, MoherD, EggerM, DavidoffF, et al. (2001) The revised CONSORT statement for reporting randomized trials: explanation and elaboration. Ann Intern Med 134 : 663–694.

2. CopayAG, SubachBR, GlassmanSD, PollyJ, SchulerTC (2007) Understanding the minimum clinically important difference: a review of concepts and methods. Spine J 7 : 541–546.

3. LenthRV (2001) Some practical guidelines for effective sample size determination. Am Stat 55 : 187–193.

4. LenthRV (2001) “A first course in the design of experiments: a linear models approach” by Weber & Skillins: book review. Am Stat 55 : 370.

5. WellsG, BeatonD, SheaB, BoersM, SimonL, et al. (2001) Minimal clinically important differences: review of methods. J Rheumatol 28 : 406–412.

6. AletahaD, FunovitsJ, WardMM, SmolenJS, KvienTK (2009) Perception of improvement in patients with rheumatoid arthritis varies with disease activity levels at baseline. Arthritis Rheum 61 : 313–320.

7. BarberBL, SantanelloNC, EpsteinRS (1996) Impact of the global on patient perceivable change in an asthma specific QOL questionnaire. Qual Life Res 5 : 117–122.

8. BastyrEJIII, PriceKL, BrilV (2005) MBBQ Study Group (2005) Development and validity testing of the neuropathy total symptom score-6: questionnaire for the study of sensory symptoms of diabetic peripheral neuropathy. Clin Ther 27 : 1278–1294.

9. BeninatoM, Gill-BodyKM, SallesS, StarkPC, Black-SchafferRM, et al. (2006) Determination of the minimal clinically important difference in the FIM instrument in patients with stroke. Arch Phys Med Rehabil 87 : 32–39.

10. BrantR, SutherlandL, HilsdenR (1999) Examining the minimum important difference. Stat Med 18 : 2593–2603.

11. DeRogatisLR, GraziottinA, BitzerJ, SchmittS, KoochakiPE, et al. (2009) Clinically relevant changes in sexual desire, satisfying sexual activity and personal distress as measured by the profile of female sexual function, sexual activity log, and personal distress scale in postmenopausal women with hypoactive sexual desire disorder. J Sex Med 6 : 175–183.

12. DeyoRA, InuiTS (1984) Toward clinical applications of health status measures: sensitivity of scales to clinically important changes. Health Serv Res 19 : 275–289.

13. EberleE, OttillingerB (1999) Clinically relevant change and clinically relevant difference in knee osteoarthritis. Osteoarthritis Cartilage 7 : 502–503.

14. FritzJM, HebertJ, KoppenhaverS, ParentE (2009) Beyond minimally important change: defining a successful outcome of physical therapy for patients with low back pain. Spine 34 : 2803–2809.

15. GlassmanSD, CopayAG, BervenSH, PollyDW, SubachBR, et al. (2008) Defining substantial clinical benefit following lumbar spine arthrodesis. J Bone Joint Surg Am 90 : 1839–1847.

16. JaeschkeR, SingerJ, GuyattGH (1989) Measurement of health status. Ascertaining the minimal clinically important difference. Control Clin Trials 10 : 407–415.

17. KawataAK, RevickiDA, ThakkarR, JiangP, KrauseS, et al. (2009) Flushing ASsessment Tool (FAST): psychometric properties of a new measure assessing flushing symptoms and clinical impact of niacin therapy. Clin Drug Investig 29 : 215–229.

18. KhannaD, TsengCH, FurstDE, ClementsPJ, ElashoffR, et al. (2009) Minimally important differences in the Mahler's Transition Dyspnoea Index in a large randomized controlled trial—results from the Scleroderma Lung Study. Rheumatology (Oxford) 48 : 1537–1540.

19. KragtJJ, NielsenIM, van der LindenFA, UitdehaagBM, PolmanCH (2006) How similar are commonly combined criteria for EDSS progression in multiple sclerosis? Mult Scler 12 : 782–786.

20. KvammeMK, KristiansenIS, LieE, KvienTK (2010) Identification of cutpoints for acceptable health status and important improvement in patient-reported outcomes, in rheumatoid arthritis, psoriatic arthritis, and ankylosing spondylitis. J Rheumatol 37 : 26–31.

21. MannionAF, PorchetF, KleinstückFS, LattigF, JeszenszkyD, et al. (2009) The quality of spine surgery from the patient's perspective: part 2. Minimal clinically important difference for improvement and deterioration as measured with the Core Outcome Measures Index. Eur Spine J 18 (Suppl 3)374–379.

22. MetzSM, WyrwichKW, BabuAN, KroenkeK, TierneyWM, et al. (2006) A comparison of traditional and Rasch cut points for assessing clinically important change in health-related quality of life among patients with asthma. Qual Life Res 15 : 1639–1649.

23. PepinV, LavioletteL, BrouillardC, SewellL, SinghSJ, et al. (2011) Significance of changes in endurance shuttle walking performance. Thorax 66 : 115–120.

24. PivaSR, GilAB, MooreCG, FitzgeraldGK (2009) Responsiveness of the activities of daily living scale of the knee outcome survey and numeric pain rating scale in patients with patellofemoral pain. J Rehabil Med 41 : 129–135.

25. PopeJE, KhannaD, NorrieD, OuimetJM (2009) The minimally important difference for the health assessment questionnaire in rheumatoid arthritis clinical practice is smaller than in randomized controlled trials. J Rheumatol 36 : 254–259.

26. PotterLP, MathiasSD, RautM, KianifardF, TavakkolA (2006) The OnyCOE-t questionnaire: responsiveness and clinical meaningfulness of a patient-reported outcomes questionnaire for toenail onychomycosis. Health Qual Life Outcome 4 : 50.

27. PouchotJ, KheraniRB, BrantR, LacailleD, LehmanAJ, et al. (2008) Determination of the minimal clinically important difference for seven fatigue measures in rheumatoid arthritis. J Clin Epidemiol 61 : 705–713.

28. RedelmeierDA, GuyattGH, GoldsteinRS (1996) Assessing the minimal important difference in symptoms: a comparison of two techniques. J Clin Epidemiol 49 : 1215–1219.

29. RingashJ, BezjakA, O'SullivanB, RedelmeierDA (2004) Interpreting differences in quality of life: the FACT-H&N in laryngeal cancer patients. Qual Life Res 13 : 725–733.

30. RingashJ, O'SullivanB, BezjakA, RedelmeierDA (2007) Interpreting clinically significant changes in patient-reported outcomes. Cancer 110 : 196–202.

31. SantanelloNC, ZhangJ, SeidenbergB, ReissTF, BarberBL (1999) What are minimal important changes for asthma measures in a clinical trial? Eur Respir J 14 : 23–27.

32. SekhonS, PopeJ (2010) Canadian Scleroderma Research Group, Baron M (2010) The minimally important difference in clinical practice for patient-centered outcomes including health assessment questionnaire, fatigue, pain, sleep, global visual analog scale, and SF-36 in scleroderma. J Rheumatol 37 : 591–598.

33. SpiegelB, BolusR, HarrisLA, LucakS, NaliboffB, et al. (2009) Measuring irritable bowel syndrome patient-reported outcomes with an abdominal pain numeric rating scale. Aliment Pharmacol Ther 30 : 1159–1170.

34. SunerIJ, KokameGT, YuE, WardJ, DolanC, et al. (2009) Responsiveness of NEI VFQ-25 to changes in visual acuity in neovascular AMD: validation studies from two phase 3 clinical trials. Invest Ophthalmol Vis Sci 50 : 3629–3635.

35. TafazalSI, SellPJ (2006) Outcome scores in spinal surgery quantified: excellent, good, fair and poor in terms of patient-completed tools. Eur Spine J 15 : 1653–1660.

36. TashjianRZ, DeloachJ, GreenA, PorucznikCA, PowellAP (2010) Minimal clinically important differences in ASES and simple shoulder test scores after nonoperative treatment of rotator cuff disease. J Bone Joint Surg Am 92 : 296–303.

37. ten KloosterPM, Drossaers-BakkerKW, TaalE, van de LaarMA (2006) Patient-perceived satisfactory improvement (PPSI): interpreting meaningful change in pain from the patient's perspective. Pain 121 : 151–157.

38. AbramsP, KelleherC, HuelsJ, Quebe-FehlingE, OmarMA, et al. (2008) Clinical relevance of health-related quality of life outcomes with darifenacin. BJU Int 102 : 208–213.

39. AsenlofP, DenisonE, LindbergP (2006) Idiographic outcome analyses of the clinical significance of two interventions for patients with musculoskeletal pain. Behav Res Ther 44 : 947–965.

40. BowersoxNW, SaundersSM, WojcikJV (2009) An evaluation of the utility of statistical versus clinical significance in determining improvement in alcohol and other drug (AOD) treatment in correctional settings. Alcohol Treat Q 27 : 113–129.

41. BridgesTS, FarrarJD (1997) The influence of worm age, duration of exposure and endpoint selection on bioassay sensitivity for Neanthes arenaceodentata (Annelida: Polychaeta). Environ Toxicol Chem 16 : 1650–1658.

42. DuruG, FantinoB (2008) The clinical relevance of changes in the Montgomery-Asberg Depression Rating Scale using the minimum clinically important difference approach. Curr Med Res Opin 24 : 1329–1335.

43. FitzpatrickR, NorquistJM, JenkinsonC (2004) Distribution-based criteria for change in health-related quality of life in Parkinson's disease. J Clin Epidemiol 57 : 40–44.

44. GnatR, KuszewskiM, KoczarR, DziewonskaA (2010) Reliability of the passive knee flexion and extension tests in healthy subjects. J Manipulative Physiol Ther 33 : 659–665.

45. GrotleM, BroxJI, llestadNK (2006) Reliability, validity and responsiveness of the fear-avoidance beliefs questionnaire: methodological aspects of the Norwegian version. J Rehabil Med 38 : 346–353.

46. HansonML, SandersonH, SolomonKR (2003) Variation, replication, and power analysis of Myriophyllum spp. microcosm toxicity data. Environ Toxicol Chem 22 : 1318–1329.

47. JacobsonNS, TruaxP (1991) Clinical significance: a statistical approach to defining meaningful change in psychotherapy research. J Consult Clin Psychol 59 : 12–19.

48. KendallPC, Marrs-GarciaA, NathSR, SheldrickRC (1999) Normative comparisons for the evaluation of clinical significance. J Consult Clin Psychol 67 : 285–299.

49. KrebsEE, BairMJ, DamushTM, TuW, WuJ, et al. (2010) Comparative responsiveness of pain outcome measures among primary care patients with musculoskeletal pain. Med Care 48 : 1007–1014.

50. ModiAC, ZellerMH (2008) Validation of a parent-proxy, obesity-specific quality-of-life measure: sizing them up. Obesity 16 : 2624–2633.

51. MovsasB, ScottC, Watkins-BrunerD (2006) Pretreatment factors significantly influence quality of life in cancer patients: a Radiation Therapy Oncology Group (RTOG) analysis. Int J Radiat Oncol Biol Phys 65 : 830–835.

52. NewnhamEA, HarwoodKE, PageAC (2007) Evaluating the clinical significance of responses by psychiatric inpatients to the mental health subscales of the SF-36. J Affect Disord 98 : 91–97.

53. PekarikG, WolffCB (1996) Relationship of satisfaction to symptom change, follow-up adjustment, and clinical significance. Prof Psychol Res Pr 27 : 202–208.

54. SarnaL, CooleyME, BrownJK, CherneckyC, ElashoffD, et al. (2008) Symptom severity 1 to 4 months after thoracotomy for lung cancer. Am J Crit Care 17 : 455–467.

55. SeggarLB, LambertMJ, HansenNB (2002) Assessing clinical significance: application to the Beck Depression Inventory. Behav Ther 33 : 253–269.

56. van der HoevenN (2008) Calculation of the minimum significant difference at the NOEC using a non-parametric test. Ecotoxicol Environ Saf 70 : 61–66.

57. BacchettiP, McCullochCE, SegalMR (2008) Simple, defensible sample sizes based on cost efficiency. Biometrics 64 : 577–585.

58. BriggsAH, GrayAM (1998) Power and sample size calculations for stochastic cost-effectiveness analysis. Med Decis Making 18: S81–S92.

59. DetskyAS (1990) Using cost-effectiveness analysis to improve the efficiency of allocating funds to clinical trials. Stat Med 9 : 173–184.

60. GittinsJC, PezeshkH (2002) A decision theoretic approach to sample size determination in clinical trials. J Biopharm Stat 12 : 535–551.

61. KikuchiT, PezeshkH, GittinsJ (2008) A Bayesian cost-benefit approach to the determination of sample size in clinical trials. Stat Med 27 : 68–82.

62. O'HaganA, StevensJW (2001) Bayesian assessment of sample size for clinical trials of cost-effectiveness. Med Decis Making 21 : 219–230.

63. SamsaGP, MatcharDB (2001) Have randomized controlled trials of neuroprotective drugs been underpowered? An illustration of three statistical principles. Stroke 32 : 669–674.

64. TorgersonDJ, RyanM, RatcliffeJ (1995) Economics in sample size determination for clinical trials. QJM 88 : 517–521.

65. WillanAR (2008) Optimal sample size determinations from an industry perspective based on the expected value of information. Clin Trials 5 : 587–594.

66. AarabiM, SkinnerJ, PriceCE, JacksonPR (2008) Patients' acceptance of antihypertensive therapy to prevent cardiovascular disease: a comparison between South Asians and Caucasians in the United Kingdom. Eur J Prev Cardiol 15 : 59–66.

67. AllisonDB, ElobeidMA, CopeMB, BrockDW, FaithMS, et al. (2010) Sample size in obesity trials: patient perspective versus current practice. Med Decis Making 30 : 68–75.

68. BarrettB, BrownD, MundtM, BrownR (2005) Sufficiently important difference: expanding the framework of clinical significance. Med Decis Making 25 : 250–261.

69. BarrettB, BrownR, MundtM, DyeL, AltJ, et al. (2005) Using benefit harm tradeoffs to estimate sufficiently important difference: the case of the common cold. Med Decis Making 25 : 47–55.

70. BarrettB, HarahanB, BrownD, ZhangZ, BrownR (2007) Sufficiently important difference for common cold: severity reduction. Ann Fam Med 5 : 216–223.

71. BellamyN, AnastassiadesTP, BuchananWW, DavisP, LeeP, et al. (1991) Rheumatoid arthritis antirheumatic drug trials. III. Setting the delta for clinical trials of antirheumatic drugs—results of a consensus development (Delphi) exercise. J Rheumatol 18 : 1908–1915.

72. BellmLA, CunninghamG, DurnellL, EilersJ, EpsteinJB, et al. (2002) Defining clinically meaningful outcomes in the evaluation of new treatments for oral mucositis: oral mucositis patient provider advisory board. Cancer Invest 20 : 793–800.

73. BloomLF, LapierreNM, WilsonKG, CurranD, DeForgeDA, et al. (2006) Concordance in goal setting between patients with multiple sclerosis and their rehabilitation team. Am J Phys Med Rehabil 85 : 807–813.

74. BoersM, TugwellP (1993) OMERACT conference questionnaire results. OMERACT Committee. J Rheumatol 20 : 552–554.

75. BurgessP, TrauerT, CoombsT, McKayR, PirkisJ (2009) What does ‘clinical significance’ mean in the context of the Health of the Nation Outcome Scales? Australas Psychiatry 17 : 141–148.

76. FriedBJ, BoersM, BakerPR (1993) A method for achieving consensus on rheumatoid arthritis outcome measures: the OMERACT conference process. J Rheumatol 20 : 548–551.

77. KirkbyHM, WilsonS, CalvertM, DraperH (2011) Using e-mail recruitment and an online questionnaire to establish effect size: a worked example. BMC Med Res Methodol 11 : 89.

78. MoscaM, LockshinM, SchneiderM, LiangMH, AlbrechtJ, et al. (2007) Response criteria for cutaneous SLE in clincal trials. Clin Exp Rheumatol 25 : 666–671.

79. RiderLG, GianniniEH, Harris-LoveM, JoeG, IsenbergD, et al. (2003) Defining clinical improvement in adult and juvenile myositis. J Rheumatol 30 : 603–617.

80. StoneMA, InmanRD, WrightJG, MaetzelA (2004) Validation exercise of the Ankylosing Spondylitis Assessment Study (ASAS) group response criteria in ankylosing spondylitis patients treated with biologics. Arthritis Rheum 51 : 316–320.

81. TubachF, RavaudP, BeatonD, BoersM, BombardierC, et al. (2007) Minimal clinically important improvement and patient acceptable symptom state for subjective outcome measures in rheumatic disorders. J Rheumatol 34 : 1188–1193.

82. WellsG, AndersonJ, BoersM, FelsonD, HeibergT, et al. (2003) MCID/Low Disease Activity State Workshop: summary, recommendations, and research agenda. J Rheumatol 30 : 1115–1118.

83. WongRK, GafniA, WhelanT, FranssenE, FungK (2002) Defining patient-based minimal clinically important effect sizes: a study in palliative radiotherapy for painful unresectable pelvic recurrences from rectal cancer. Int J Radiat Oncol Biol Phys 54 : 661–669.

84. WyrwichKW, NelsonHS, TierneyWM, BabuAN, KroenkeK, et al. (2003) Clinically important differences in health-related quality of life for patients with asthma: an expert consensus panel report. Ann Allergy Asthma Immunol 91 : 148–153.

85. WyrwichKW, FihnSD, TierneyWM, KroenkeK, BabuAN, et al. (2003) Clinically important changes in health-related quality of life for patients with chronic obstructive pulmonary disease: an expert consensus panel report. J Gen Intern Med 18 : 196–202.

86. WyrwichKW, SpertusJA, KroenkeK, TierneyWM, BabuAN, et al. (2004) Clinically important differences in health status for patients with heart disease: an expert consensus panel report. Am Heart J 147 : 615–622.

87. JohnstoneR, DonaghyM, MartinD (2002) A pilot study of a cognitive-behavioural therapy approach to physiotherapy, for acute low back pain patients, who show signs of developing chronic pain. Adv Physiother 4 : 182–188.

88. KraemerHC, MintzJ, NodaA, TinklenbergJ, YesavageJA (2006) Caution regarding the use of pilot studies to guide power calculations for study proposals. Arch Gen Psychiatry 63 : 484–489.

89. SalterGC, RomanM, BlandMJ, MacPhersonH (2006) Acupuncture for chronic neck pain: a pilot for a randomised controlled trial. BMC Musculoskelet Disord 7 : 99.

90. ThabaneL, MaJ, ChuR, ChengJ, IsmailaA, et al. (2010) A tutorial on pilot studies: the what, why and how. BMC Med Res Methodol 10 : 1.

91. BlumenauerB (2003) Quality of life in patients with rheumatoid arthritis: which drugs might make a difference? Pharmacoeconomics 21 : 927–940.

92. BombardierC, HaydenJ, BeatonDE (2001) Minimal clinically important difference. Low back pain: outcome measures. J Rheumatol 28 : 431–438.

93. CampbellJD, GriesKS, WatanabeJH, RaveloA, DmochowskiRR, et al. (2009) Treatment success for overactive bladder with urinary urge incontinence refractory to oral antimuscarinics: a review of published evidence. BMC Urol 9 : 18.

94. CranneyA, WelchV, WellsG, AdachiJ, SheaB, et al. (2001) Discrimination of changes in osteoporosis outcomes. J Rheumatol 28 : 413–421.

95. FeiseRJ, MenkeJM (2010) Functional Rating Index: literature review. Med Sci Monit 16: RA25–RA36.

96. MullerU, DuetzMS, RoederC, GreenoughCG (2004) Condition-specific outcome measures for low back pain: part I: validation. Eur Spine J 13 : 301–313.

97. RevickiDA, FeenyD, HuntTL, ColeBF (2006) Analyzing oncology clinical trial data using the Q-TWiST method: clinical importance and sources for health state preference data. Qual Life Res 15 : 411–423.

98. SchünemannHJ, GoldsteinR, MadorMJ, McKimD, StahlE, et al. (2005) A randomised trial to evaluate the self-administered standardised chronic respiratory questionnaire. Eur Respir J 25 : 31–40.

99. JohnstonMF, HaysRD, HuiKK (2009) Evidence-based effect size estimation: an illustration using the case of acupuncture for cancer-related fatigue. BMC Complement Altern Med 9 : 1.

100. Julious SA (2006) Designing clinical trials with uncertain estimates. London: University of London.

101. SuttonAJ, CooperNJ, JonesDR, LambertPC, ThompsonJR, et al. (2007) Evidence-based sample size calculations based upon updated meta-analysis. Stat Med 26 : 2479–2500.

102. ThomasJR, LochbaumMR, LandersDM, HeC (1997) Planning significant and meaningful research in exercise science: estimating sample size. Res Q Exerc Sport 68 : 33–43.

103. ZanenP, LammersJW (1995) Sample sizes for comparative inhaled corticosteroid trials with emphasis on showing therapeutic equivalence. Eur J Clin Pharmacol 48 : 179–184.

104. AndrewMK, RockwoodK (2008) A five-point change in Modified Mini-Mental State Examination was clinically meaningful in community-dwelling elderly people. J Clin Epidemiol 61 : 827–831.

105. ChinnS (2000) A simple method for converting an odds ratio to effect size for use in meta-analysis. Stat Med 19 : 3127–3131.

106. Cohen J (1977) Statistical power: analysis of behavioural sciences. New York: Academic Press.

107. FredricksonA, SnyderPJ, CromerJ, ThomasE, LewisM, et al. (2008) The use of effect sizes to characterize the nature of cognitive change in psychopharmacological studies: an example with scopolamine. Hum Psychopharmacol 23 : 425–436.

108. GordonJE, PowellC, RockwoodK (1999) Goal attainment scaling as a measure of clinically important change in nursing-home patients. Age Ageing 28 : 275–281.

109. Hackshaw AK (2009) A concise guide to clinical trials. Oxford: Wiley-Blackwell.

110. HarrisMA, GrecoP, WysockiT, WhiteNH (2001) Family therapy with adolescents with diabetes: a litmus test for clinically meaningful change. Fam Syst Health 19 : 159–168.

111. Higgins JPT, Greene S (2011) Cochrane handbook for systematic reviews of interventions, version 5.1.0. Available: http://www.cochrane-handbook.org/. Accessed 8 Apr 2014.

112. HortonAM (1980) Estimation of clinical significance: a brief note. Psychol Rep 47 : 141–142.

113. HowardR, PhillipsP, JohnsonT, O'BrienJ, SheehanB, et al. (2011) Determining the minimum clinically important differences for outcomes in the DOMINO trial. Int J Geriatr Psychiatry 26 : 812–817.

114. KlassenAF (2005) Quality of life of children with attention deficit hyperactivity disorder. Expert Rev Pharmacoecon Outcomes Res 5 : 95–103.

115. KrakowB, MelendrezD, SisleyB, WarnerTD, KrakowJ, et al. (2006) Nasal dilator strip therapy for chronic sleep-maintenance insomnia and symptoms of sleep-disordered breathing: a randomized controlled trial. Sleep Breath 10 : 16–28.

116. WoodsSW, StolarM, SernyakMJ, CharneyDS (2001) Consistency of atypical antipsychotic superiority to placebo in recent clinical trials. Biol Psychiatry 49 : 64–70.

117. WyrwichK, HarnamN, RevickiDA, LocklearJC, SvedsäterH, et al. (2009) Assessing health-related quality of life in generalized anxiety disorder using the Quality Of Life Enjoyment and Satisfaction Questionnaire. Int Clin Psychopharmacol 24 : 289–295.

118. ArbuckleRA, HumphreyL, VardevaK, ArondekarB, Danten-VialaM, et al. (2009) Psychometric evaluation of the Diabetes Symptom Checklist-Revised (DSC-R)—a measure of symptom distress. Value Health 12 : 1168–1175.

119. FunkGF, KarnellLH, SmithRB, ChristensenAJ (2004) Clinical significance of health status assessment measures in head and neck cancer: what do quality-of-life scores mean? Arch Otolaryngol Head Neck Surg 130 : 825–829.

120. CocksK, KingMT, VelikovaG, Martyn St-JamesM, FayersPM, et al. (2011) Evidence-based guidelines for determination of sample size and interpretation of the European Organisation for the Research and Treatment of Cancer Quality of Life Questionnaire Core 30. J Clin Oncol 29 : 89–96.

121. Machin D, Day S, Greene S, editors (2006) Textbook of clinical trials. Chichester: John Wiley.

122. Cook JA, Hislop J, Altman DA, Briggs AH, Fayers PM, et al.. (2014) Use of methods for specifying the target difference in randomised controlled trial sample size calculations: two surveys of trialists' practice. Clin Trials. E-pub ahead of print. doi:10.1177/1740774514521907

Štítky

Interní lékařství

Článek vyšel v časopisePLOS Medicine

Nejčtenější tento týden

2014 Číslo 5- Berberin: přírodní hypolipidemikum se slibnými výsledky

- Léčba bolesti u seniorů

- Příznivý vliv Armolipidu Plus na hladinu cholesterolu a zánětlivé parametry u pacientů s chronickým subklinickým zánětem

- Jak postupovat při výběru betablokátoru − doporučení z kardiologické praxe

- Červená fermentovaná rýže účinně snižuje hladinu LDL cholesterolu jako vhodná alternativa ke statinové terapii

-

Všechny články tohoto čísla

- The Role of Open Access in Reducing Waste in Medical Research

- Provider-Initiated HIV Testing and Counselling for Children

- Fecal Contamination of Drinking-Water in Low- and Middle-Income Countries: A Systematic Review and Meta-Analysis

- Call to Action: Promoting Domestic and Global Tobacco Control by Ratifying the Framework Convention on Tobacco Control in the United States

- Methods for Specifying the Target Difference in a Randomised Controlled Trial: The Difference ELicitation in TriAls (DELTA) Systematic Review

- Achieving the HIV Prevention Impact of Voluntary Medical Male Circumcision: Lessons and Challenges for Managing Programs

- Effectiveness of a Pre-treatment Snack on the Uptake of Mass Treatment for Schistosomiasis in Uganda: A Cluster Randomized Trial

- Communicating and Monitoring Surveillance and Response Activities for Malaria Elimination: China's “1-3-7” Strategy

- Improving the Quality of Adult Mortality Data Collected in Demographic Surveys: Validation Study of a New Siblings' Survival Questionnaire in Niakhar, Senegal

- Yellow Fever in Africa: Estimating the Burden of Disease and Impact of Mass Vaccination from Outbreak and Serological Data

- Gene-Lifestyle Interaction and Type 2 Diabetes: The EPIC InterAct Case-Cohort Study

- Barriers to Provider-Initiated Testing and Counselling for Children in a High HIV Prevalence Setting: A Mixed Methods Study

- Maternal Overweight and Obesity and Risks of Severe Birth-Asphyxia-Related Complications in Term Infants: A Population-Based Cohort Study in Sweden

- Ethical Alternatives to Experiments with Novel Potential Pandemic Pathogens

- PLOS Medicine

- Archiv čísel

- Aktuální číslo

- Informace o časopisu

Nejčtenější v tomto čísle- Provider-Initiated HIV Testing and Counselling for Children

- Fecal Contamination of Drinking-Water in Low- and Middle-Income Countries: A Systematic Review and Meta-Analysis

- Achieving the HIV Prevention Impact of Voluntary Medical Male Circumcision: Lessons and Challenges for Managing Programs

- Effectiveness of a Pre-treatment Snack on the Uptake of Mass Treatment for Schistosomiasis in Uganda: A Cluster Randomized Trial

Kurzy

Zvyšte si kvalifikaci online z pohodlí domova

Současné možnosti léčby obezity

nový kurzAutoři: MUDr. Martin Hrubý

Všechny kurzyPřihlášení#ADS_BOTTOM_SCRIPTS#Zapomenuté hesloZadejte e-mailovou adresu, se kterou jste vytvářel(a) účet, budou Vám na ni zaslány informace k nastavení nového hesla.

- Vzdělávání